StyleGAN2 Distillation for Feed-forward Image Manipulation

StyleGAN2蒸馏用于前馈图像处理

Yuri Viazovetskyiequal contribution1 Yandex 1Vladimir Ivashkin1 Yandex 12Moscow Institute of Physics and Technology

Evgeny Kashin1 Yandex 1

ABSTRACT

StyleGAN2 is a state-of-the-art network in generating realistic images. Besides, it was explicitly trained to have disentangled directions in latent space, which allows efficient image manipulation by varying latent factors. Editing existing images requires embedding a given image into the latent space of StyleGAN2. Latent code optimization via backpropagation is commonly used for qualitative embedding of real world images, although it is prohibitively slow for many applications. We propose a way to distill a particular image manipulation of StyleGAN2 into image-to-image network trained in paired way. The resulting pipeline is an alternative to existing GANs, trained on unpaired data. We provide results of human faces’ transformation: gender swap, aging/rejuvenation, style transfer and image morphing. We show that the quality of generation using our method is comparable to StyleGAN2 backpropagation and current state-of-the-art methods in these particular tasks.

StyleGAN2是用于生成逼真的图像的先进网络。此外,还对它进行了明确训练,使其在潜在空间中具有纠缠的方向,从而可以通过更改潜在因子来进行有效的图像处理。编辑现有图像需要将给定图像嵌入到StyleGAN2的潜在空间中。通过反向传播进行的潜在代码优化通常用于对真实世界图像进行定性嵌入,尽管对于许多应用程序来说,这样做的速度太慢了。我们提出了一种将StyleGAN2的特定图像处理提炼成以配对方式训练的图像到图像网络的方法。生成的管道是对现有GAN的替代方案,它是针对未配对数据进行训练的。我们提供人脸转换的结果:性别互换,老化/复兴,样式转换和图像变形。

Keywords:

Computer Vision, StyleGAN2, distillation, synthetic data

Figure 1: Image manipulation examples generated by our method from (a) source image: (b) gender swap at 1024x1024 and (c) style mixing at 512x512. Samples are generated feed-forward.## 1INTRODUCTION

图1:我们的方法从(a)源图像生成的图像处理示例:(b)1024x1024的性别互换和(c)512x512的样式混合。样品产生前馈。

Generative adversarial networks (GANs) [17] have created wide opportunities in image manipulation. General public is familiar with them from the many applications which offer to change one’s face in some way: make it older/younger, add glasses, beard, etc.

There are two types of network architecture which can perform such translations feed-forward: neural networks trained on either paired or unpaired datasets. In practice, only unpaired datasets are used. The methods used there are based on cycle consistency [60]. The follow-up studies [23, 10, 11] have maximum resolution of 256x256.

生成对抗网络(GANs)[ 17 ]在图像处理中创造了广泛的机会。公众从许多可以以某种方式改变面孔的应用程序中熟悉它们:变老/变年轻,加眼镜,胡须等。

可以执行这种转换前馈的网络结构有两种类型:在成对或不成对的数据集上训练的神经网络。实际上,仅使用未配对的数据集。那里使用的方法基于周期一致性[ 60 ]。后续的研究[ 23,10,11 ]有256×256的最大分辨率。

At the same time, existing paired methods (e.g. pix2pixHD [54] or SPADE [41]) support resolution up to 2048x1024. But it is very difficult or even impossible to collect a paired dataset for such tasks as age manipulation. For each person, such dataset would have to contain photos made at different age, with the same head position and facial expression. Close examples of such datasets exist, e.g. CACD [8], AgeDB [39], although with different expressions and face orientation. To the best of our knowledge, they have never been used to train neural networks in a paired mode.

同时,现有的配对方法(例如pix2pixHD [ 54 ]或SPADE [ 41 ])支持高达2048x1024的分辨率。但是为诸如年龄操纵之类的任务收集成对的数据集是非常困难的,甚至是不可能的。对于每个人,这样的数据集都必须包含不同年龄的照片,并具有相同的头部位置和面部表情。尽管具有不同的表情和面部朝向,但存在此类数据集的近似示例,例如CACD [ 8 ],AgeDB [ 39 ]。据我们所知,它们从未被用于以配对模式训练神经网络。

These obstacles can be overcome by making a synthetic paired dataset, if we solve two known issues concerning dataset generation: appearance gap [21] and content gap [27]. Here, unconditional generation methods, like StyleGAN [29], can be of use. StyleGAN generates images of quality close to real world and with distribution close to real one according to low FID results. Thus output of this generative model can be a good substitute for real world images. The properties of its latent space allow to create sets of images differing in particular parameters. Addition of path length regularization (introduced as measure of quality in [29]) in the second version of StyleGAN [30] makes latent space even more suitable for manipulations.

如果我们解决有关数据集生成的两个已知问题,则可以通过创建合成的配对数据集来克服这些障碍:外观差距[ 21 ]和内容差距[ 27 ]。在这里,可以使用无条件的生成方法,例如StyleGAN [ 29 ]。根据低FID结果,StyleGAN生成的图像质量接近真实世界,并且分布接近真实世界。因此,该生成模型的输出可以很好地替代现实世界的图像。其潜在空间的属性允许创建在特定参数上不同的图像集。增加路径长度正则化(在[ 29 ]中作为质量度量引入)在第二版StyleGAN [ 30 ]中使潜在空间更加适合操纵。

Basic operations in the latent space correspond to particular image manipulation operations. Adding a vector, linear interpolation, and crossover in latent space lead to expression transfer, morphing, and style transfer, respectively. The distinctive feature of both versions of StyleGAN architecture is that the latent code is applied several times at different layers of the network. Changing the vector for some layers will lead to changes at different scales of generated image. Authors group spatial resolutions in process of generation into coarse, middle, and fine ones. It is possible to combine two people by using one person’s code at one scale and the other person’s at another.

潜在空间中的基本操作对应于特定的图像操作操作。在潜在空间中添加向量,线性插值和交叉分别导致表达式传递,变形和样式传递。两种版本的StyleGAN体系结构的显着特点是,潜在代码在网络的不同层上多次应用。更改某些图层的矢量将导致所生成图像的比例不同。作者将空间分辨率在生成过程中分为粗略,中度和精细。可以通过从不同维度使用不同人的代码来合并生成新的人。

Operations mentioned above are easily performed for images with known embeddings. For many entertainment purposes this is vital to manipulate some existing real world image on the fly, e.g. to edit a photo which has just been taken. Unfortunately, in all the cases of successful search in latent space described in literature the backpropagation method was used [2, 1, 15, 30, 46]. Feed-forward is only reported to be working as an initial state for latent code optimization [5]. Slow inference makes application of image manipulation with StyleGAN2 in production very limited: it costs a lot in data center and is almost impossible to run on a device. However, there are examples of backpropagation run in production, e.g. [47].

对于具有已知嵌入的图像,可以轻松执行上述操作。对于许多娱乐目的,至关重要的是动态地处理一些现有的现实世界图像,例如,编辑刚拍摄的照片。不幸的是,在文献中所述的潜在空间成功搜索的所有情况,使用反向传播方法 [ 2,1,15,30,46 ]。前馈仅被报告为潜在代码优化的初始状态[ 5 ]。缓慢的推理使得使用StyleGAN2进行图像处理的应用非常有限:在数据中心花费很多,并且几乎不可能在设备上运行。但是,有一些反向传播在生产中运行的示例,例如[ 47 ]。

In this paper we consider opportunities to distill [20, 4] a particular image manipulation of StyleGAN2 generator, trained on the FFHQ dataset. The distillation allows to extract the information about faces’ appearance and the ways they can change (e.g. aging, gender swap) from StyleGAN into image-to-image network. We propose a way to generate a paired dataset and then train a “student” network on the gathered data. This method is very flexible and is not limited to the particular image-to-image model.

在本文中,我们考虑提制的机会[ 20,4 ] StyleGAN2生成的特定图像处理,训练有素的FFHQ数据集。蒸馏允许从StyleGAN提取有关面部外观及其更改方式(例如,老化,性别互换)的信息,以进入图像到图像网络。我们提出了一种生成配对数据集,然后在收集到的数据上训练“学生”网络的方法。该方法非常灵活,并且不限于特定的图像到图像模型。

Despite the resulting image-to-image network is trained only on generated samples, we show that it performs on real world images on par with StyleGAN backpropagation and current state-of-the-art algorithms trained on unpaired data.

Our contributions are summarized as follows:

- We create synthetic datasets of paired images to solve several tasks of image manipulation on human faces: gender swap, aging/rejuvenation, style transfer and face morphing;

- We show that it is possible to train image-to-image network on synthetic data and then apply it to real world images;

- We study the qualitative and quantitative performance of image-to-image networks trained on the synthetic datasets;

- We show that our approach outperforms existing approaches in gender swap task.

We publish all collected paired datasets for reproducibility and future research: https://github.com/EvgenyKashin/stylegan2-distillation.

尽管生成的图像到图像网络仅在生成的样本上进行训练,但我们证明它在真实世界图像上的表现与StyleGAN反向传播以及在未配对数据上训练的当前最新算法相当。

我们的贡献总结如下:

- 我们创建配对图像的合成数据集,以解决人脸图像处理的多项任务:性别互换,衰老/复兴,样式转换和脸部变形;

- 我们表明,有可能在合成数据上训练图像到图像网络,然后将其应用于现实世界图像;

- 我们研究在合成数据集上训练的图像到图像网络的定性和定量性能;

- 我们表明,在性别互换任务中,我们的方法要优于现有方法。

我们发布所有收集的配对数据集,以实现可重复性和未来研究:https : //github.com/EvgenyKashin/stylegan2-distillation。

2RELATED WORK

Unconditional image generation 无条件图像生成

Following the success of ProgressiveGAN [28] and BigGAN [6], StyleGAN [29] became state-of-the-art image generation model. This was achieved due to rethinking generator architecture and borrowing approaches from style transfer networks: mapping network and AdaIN [22], constant input, noise addition, and mixing regularization. The next version of StyleGAN – StyleGAN2 [30], gets rid of artifacts of the first version by revising AdaIN and improves disentanglement by using perceptual path length as regularizer.

继ProgressiveGAN [ 28 ]和BigGAN [ 6 ]成功之后,StyleGAN [ 29 ]成为了最先进的图像生成模型。之所以能够实现这一目标,是因为重新考虑了生成器体系结构,并借鉴了样式传递网络的方法:映射网络和AdaIN [ 22 ],恒定输入,噪声添加和混合正则化。下一版本的StyleGAN – StyleGAN2 [ 30 ]通过修改AdaIN摆脱了第一版的伪像,并通过使用感知路径长度作为正则化函数来改进了解缠结。

Mapping network is a key component of StyleGAN, which allows to transform latent space Z into less entangled intermediate latent space W. Instead of actual latent z∈Z sampled from normal distribution, w∈W resulting from mapping network f:Z→W is fed to AdaIN. Also it is possible to sample vectors from extended space W+, which consists of multiple independent samples of W, one for each layer of generator. Varying w at different layers will change details of generated picture at different scales.

映射网络是StyleGAN的关键组件,可以转换潜在空间 ž 进入较少纠缠的中间潜空间W 。而不是实际的潜能ž∈Z 从正态分布中采样 w∈W来自地图网络 F:Z→W被喂给AdaIN。也可以从扩展空间中采样向量W+,由多个独立的样本组成W,每层生成器一个。变化的w 在不同的图层将以不同的比例更改生成的图片的细节。

Latent codes manipulation 潜在代码操纵

It was recently shown [16, 26] that linear operations in latent space of generator allow successful image manipulations in a variety of domains and with various GAN architectures. In GANalyze [16], the attention is directed to search interpretable directions in latent space of BigGAN [6] using MemNet [31] as “assessor” network. Jahanian et al. [26] show that walk in latent space lead to interpretable changes in different model architectures: BigGAN, StyleGAN, and DCGAN [42].

最近表明[ 16,26 ],在发生器的潜在空间线性操作允许在各种域和与各种GAN架构成功的图像操作。在GANalyze [ 16 ]中,注意力集中在使用MemNet [ 31 ]作为“评估者”网络的BigGAN [ 6 ]的潜在空间中搜索可解释的方向 。Jahanian等。[ 26 ]表明,在潜在空间中行走会导致不同模型架构中的可解释性变化:BigGAN,StyleGAN和DCGAN [ 42 ]。

To manipulate real images in latent space of StyleGAN, one needs to find their embeddings in it. The method of searching the embedding in intermediate latent space via backprop optimization is described in [2, 1, 15, 46]. The authors use non-trivial loss functions to find both close and perceptually good image and show that embedding fits better in extended space W+. Gabbay et al. [15] show that StyleGAN generator can be used as general purpose image prior. Shen et al. [46] show the opportunity to manipulate appearance of generated person, including age, gender, eyeglasses, and pose, for both PGGAN [28] and StyleGAN. The authors of StyleGAN2 [30] propose to search embeddings in W instead of W+ to check if the picture was generated by StyleGAN2.

为了在StyleGAN的潜在空间中处理真实图像,需要在其中找到其嵌入。经由backprop优化在中间潜在空间搜索嵌入的方法中描述了[ 2,1,15,46 ]。作者使用非平凡的损失函数来找到接近且在视觉上都不错的图像,并表明嵌入在扩展空间中更合适W+。Gabbay等。 [ 15 ]表明StyleGAN生成器可以先用作通用图像。沉等。 [ 46 ]显示了为PGGAN [ 28 ]和StyleGAN操纵生成人的外观的机会,包括年龄,性别,眼镜和姿势 。StyleGAN2 [ 30 ]的作者 建议在以下位置搜索嵌入:W 代替 W+ 检查图片是否由StyleGAN2生成。

Paired Image-to-image translation 配对的图像到图像翻译

Pix2pix [25] is one of the first conditional generative models applied for image-to-image translation. It learns mapping from input to output images. Chen and Koltun [9] propose the first model which can synthesize 2048x1024 images. It is followed by pix2pixHD [54] and SPADE [41]. In SPADE generator, each normalization layer uses the segmentation mask to modulate the layer activations. So its usage is limited to the translation from segmentation maps. There are numerous follow-up works based on pix2pixHD architecture, including those working with video [7, 52, 53].

Pix2pix [ 25 ]是最早应用于图像到图像翻译的条件生成模型之一。它学习从输入图像到输出图像的映射。Chen和Koltun [ 9 ]提出了第一个可以合成2048x1024图像的模型。紧随其后的是pix2pixHD [ 54 ]和SPADE [ 41 ]。在SPADE生成器中,每个归一化层都使用分段掩码来调制层激活。因此,它的用法仅限于分割图的翻译。有基于pix2pixHD架构许多后续工作,包括那些使用视频[ 7,52,53 ]。

Unpaired Image-to-image translation 未配对的图像到图像翻译

The idea of applying cycle consistency to train on unpaired data is first introduced in CycleGAN [60]. The methods of unpaired image-to-image translation can be either single mode GANs [60, 58, 35, 10] or multimodal GANs [61, 23, 32, 33, 36, 11]. FUNIT [36] supports multi-domain image translation using a few reference images from a target domain. StarGAN v2 [11] provide both latent-guided and reference-guided synthesis. All of the above-mentioned methods operate at resolution of at most 256x256 when applied to human faces.

在CycleGAN [ 60 ]中首次引入了应用循环一致性训练未配对数据的思想 。不成对图像到图像的翻译的方法可以是单模式甘斯 [ 60,58,35,10 ]或多峰甘斯 [ 61,23,32,33,36,11 ]。FUNIT [ 36 ]支持使用来自目标域的一些参考图像进行多域图像转换。StarGAN v2 [ 11 ]提供潜在指导和参考指导综合。当应用于人脸时,所有上述方法均以最高256x256的分辨率运行。

Gender swap is one of well-known tasks of unsupervised image-to-image translation [10, 11, 37].

Face aging/rejuvenation is a special task which gets a lot of attention [59, 49, 18]. Formulation of the problem can vary. The simplest version of this task is making faces look older or younger [10]. More difficult task is to produce faces matching particular age intervals [34, 55, 57, 37]. S2GAN [18] proposes continuous changing of age using weight interpolation between transforms which correspond to two closest age groups.

性别交换是无监督图像到图像平移的公知的任务之一[ 10,11,37 ]。

面部老化/复兴,是一个特殊的任务,得到了很多的关注[ 59,49,18 ]。问题的表述可能会有所不同。此任务的最简单版本是使面孔看起来更老或更年轻 [ 10 ]。更困难的任务是产生面匹配特定年龄间隔 [ 34,55,57,37 ]。S2GAN [ 18 ]提出了在对应于两个最接近年龄组的变换之间使用权重插值来连续改变年龄。

Training on synthetic data 综合数据训练

Synthetic datasets are widely used to extend datasets for some analysis tasks (e.g. classification). In many cases, simple graphical engine can be used to generate synthetic data. To perform well on real world images, this data need to overcome both appearance gap [21, 14, 50, 51, 48] and content gap [27, 45].

Ravuri et al. [43] study the quality of a classificator trained on synthetic data generated by BigGAN and show [44] that BigGAN does not capture the ImageNet [13] data distributions and is only partly successful for data augmentation. Shrivastava et al. [48] reduce the quality drop of this approach by revising train setup.

合成数据集被广泛用于扩展某些分析任务(例如分类)的数据集。在许多情况下,可以使用简单的图形引擎来生成综合数据。对现实世界的图像表现良好,该数据需要克服两个外观间隙 [ 21,14,50,51,48 ]和内容间隙 [ 27,45 ]。

Ravuri等。[ 43 ]研究了由BigGAN生成的合成数据训练的分类器的质量,并显示[ 44 ] BigGAN未捕获ImageNet [ 13 ]数据分布,仅部分成功用于数据增强。Shrivastava等。[ 48 ]通过修改火车设置来减少这种方法的质量下降。

Synthetic data is what underlies knowledge distillation, a technique that allows to train “student” network using data generated by “teacher” network [20, 4]. Usage of this additional source of data can be used to improve measures [56] or to reduce size of target model [38]. Aguinaldo et al. [3] show that knowledge distillation is successfully applicable for generative models.

知识蒸馏合成数据,一种允许使用由“老师”网络生成的数据来训练“学生”网络[ 20,4 ]。可以使用这些额外的数据源来改进度量[ 56 ]或减小目标模型的大小[ 38 ]。Aguinaldo等。[ 3 ]表明知识蒸馏成功地适用于生成模型。

3METHOD OVERVIEW

3.1DATA COLLECTION 数据采集

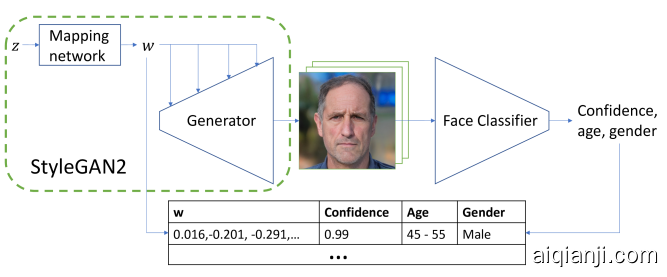

Figure 2: Method of finding correspondence between latent codes and facial attributes.

Figure 2: Method of finding correspondence between latent codes and facial attributes.

图2:寻找潜在代码和面部属性之间的对应关系的方法

All of the images used in our datasets are generated using the official implementation of StyleGAN211https://github.com/NVlabs/stylegan2. In addition to that we only use the config-f version checkpoint pretrained by the authors of StyleGAN2 on FFHQ dataset. All the manipulations are performed with the disentangled image codes w.。

我们数据集中使用的所有图像均使用StyleGAN2 1的官方实现生成1个https://github.com/NVlabs/stylegan2。除此之外,我们仅在FFHQ数据集上使用由StyleGAN2的作者预先训练的config-f版本检查点。所有操作均通过解纠缠的图像代码执行w。

We use the most straightforward way of generating datasets for style mixing and face morphing. Style mixing is described in [29] as a regularization technique and requires using two intermediate latent codes w1 and w2 at different scales. Face morphing corresponds to linear interpolation of intermediate latent codes w. We generate 50 000 samples for each task. Each sample consists of two source images and a target image. Each source image is obtained by randomly sampling z from normal distribution, mapping it to intermediate latent code w, and generating image g(w) with StyleGAN2. We produce target image by performing corresponding operation on the latent codes and feeding the result to StyleGAN2.

我们使用最直接的方式来生成用于样式混合和面部变形的数据集。[ 29 ]中将样式混合描述为一种正则化技术,并且需要使用两个中间潜在代码w1个 和 w2个在不同的规模。人脸变形对应于中间潜码的线性插值w。我们为每个任务生成5万个样本。每个样本都包含两个源图像和一个目标图像。每个源图像都是通过随机采样获得的ž 从正态分布,映射到中间潜在代码 w,并生成图像 G(w)与StyleGAN2。我们通过对潜在代码执行相应的操作并将结果提供给StyleGAN2来生成目标图像。

Face attributes, such as gender or age, are not explicitly encoded in StyleGAN2 latent space or intermediate space. To overcome this limitation we use a separate pretrained face classification network. Its outputs include confidence of face detection, age bin and gender. The network is proprietary, therefore we release the final version of our gender and age datasets in order to maintain full reproducibility of this work22https://github.com/EvgenyKashin/stylegan2-distillation.

人脸属性(例如性别或年龄)未在StyleGAN2潜在空间或中间空间中明确编码。为了克服这一局限性,我们使用了单独的预训练人脸分类网络。它的输出包括面部识别的信心,年龄段和性别。该网络是专有网络,因此我们发布了性别和年龄数据集的最终版本,以保持这项工作的完全可重复性22个https://github.com/EvgenyKashin/stylegan2-distillation

We create gender and age datasets in four major steps. First, we generate an intermediate dataset, mapping latent vectors to target attributes as illustrated in Fig. 2. Second, we find the direction in latent space associated with the attribute. Third, we generate raw dataset, using above-mentioned vector as briefly described in Fig. 3. Finally, we filter the images to get the final dataset. The method is described below in more detail.

我们通过四个主要步骤来创建性别和年龄数据集。首先,我们生成一个中间数据集,将潜在向量映射到目标属性,如图2所示 。其次,我们在与属性关联的潜在空间中找到方向。第三,我们使用图3中简要描述的上述向量生成原始数据集 。最后,我们对图像进行过滤以获得最终的数据集。该方法在下面更详细地描述。

- Generate random latent vectors z1…zn, map them to intermediate latent codes w1…wn, and generate corresponding image samples g(wi) with StyleGAN2.

生成随机潜在向量 z1…zn,将它们映射到中间潜在代码 w1个…wn,并用StyleGAN2生成相应的图像样本 G(wi)。 - Get attribute predictions from pretrained neural network f, c(wi)=f(g(wi)).

从预训练的神经网络获取属性预测 F, c(wi)=f(g(wi)). - Filter out images where faces were detected with low confidence33This helps to reduce generation artifacts in the dataset, while maintaining high variability as opposed to lowering truncation-psi parameter.. Then select only images with high classification certainty.

过滤掉以低置信度检测到脸部的图像33与降低截断psi参数相反,这有助于减少数据集中的生成伪像,同时保持较高的可变性。。然后仅选择具有较高分类确定性的图像。 - Find the center of every class Ck=1nc=k∑c(wi)=kwi and the transition vectors from one class to another Δci,cj=Cj−Ci

找到每个类的中心Ck=1nc=k∑c(wi)=kwi 以及从一类到另一类的过渡向量Δci,cj=Cj−Ci - Generate random samples zi and pass them through mapping network. For gender swap task, create a set of five images g(w−Δ),g(w−Δ/2),g(w),g(w+Δ/2),g(w+Δ) For aging/rejuvenation first predict faces’ attributes c(wi), then use corresponding vectors Δc(wi) to generate faces that should be two bins older/younger.

产生随机样本 zi并将它们通过制图网络。对于性别互换任务,创建一组五个图像g(w−Δ),g(w−Δ/2),g(w),g(w+Δ/2),g(w+Δ) - Get predictions for every image in the raw dataset. Filter out by confidence.

获取原始数据集中每个图像的预测。通过置信度过滤。 - From every set of images, select a pair based on classification results. Each image must belong to the corresponding class with high certainty.

从每组图像中,根据分类结果选择一对。每个图像必须高度确定地属于相应的类别。

As soon as we have aligned data, a paired image-to-image translation network can be trained.

一旦我们对齐了数据,就可以训练成对的图像到图像翻译网络。

Figure 3: Dataset generation. We first sample random vectors z from normal distribution. Then for each z we generate a set of images along the vector Δ corresponding to a facial attribute. Then for each set of images we select the best pair based on classification results.

Figure 3: Dataset generation. We first sample random vectors z from normal distribution. Then for each z we generate a set of images along the vector Δ corresponding to a facial attribute. Then for each set of images we select the best pair based on classification results.

图3:数据集生成。我们首先对随机向量进行采样ž从正态分布。然后每个ž 我们沿着向量生成一组图像 Δ对应于面部属性。然后,针对每组图像,我们基于分类结果选择最佳对。

3.2TRAINING PROCESS

In this work, we focus on illustrating the general approach rather than solving every task as best as possible. As a result, we choose to train pix2pixHD44https://github.com/NVIDIA/pix2pixHD [54] as a unified framework for image-to-image translation instead of selecting a custom model for every type of task.

It is known that pix2pixHD has blob artifacts55https://github.com/NVIDIA/pix2pixHD/issues/46 and also tends to repeat patterns [41]. The problem with repeated patterns is solved in [29, 41]. Light blobs is a problem which is solved in StyleGAN2.

在这项工作中,我们专注于说明通用方法,而不是尽可能地解决每一项任务。结果,我们选择训练pix2pixHD 44https://github.com/NVIDIA/pix2pixHD [ 54 ]作为图像到图像翻译的统一框架,而不是为每种任务选择自定义模型。

已知pix2pixHD具有斑点伪影55https://github.com/NVIDIA/pix2pixHD/issues/46并且也倾向于重复模式[ 41 ]。与重复的图案的问题就解决了[ 29,41 ]。浅色斑点是StyleGAN2中解决的一个问题。

We suppose that similar treatment also in use for pix2pixHD. Fortunately, even vanilla pix2pixHD trained on our datasets produces sufficiently good results with little or no artifacts. Thus, we leave improving or replacing pix2pixHD for future work. We make most part of our experiments and comparison in 512x512 resolution, but also try 1024x1024 for gender swap.

Style mixing and face averaging tasks require two input images to be fed to the network at the same time. It is done by setting number of input channels to 6 and concatenating the inputs along channel axis.

我们假设pix2pixHD也使用了类似的处理方法。幸运的是,即使在我们的数据集上训练的原始pix2pixHD也能产生足够好的结果,而几乎没有伪影。因此,我们留待改进或替换pix2pixHD以便将来工作。我们在512x512分辨率下进行了大部分实验和比较,但也尝试使用1024x1024进行性别互换。

样式混合和面部平均任务需要将两个输入图像同时馈送到网络。通过将输入通道数设置为6并沿通道轴连接输入来完成此操作。

4EXPERIMENTS

Although StyleGAN2 can be trained on data of different nature, we concentrate our efforts only on face data. We show application of our method to several tasks: gender swap, aging/rejuvenation and style mixing and face morphing. In all our experiments we collect data from StyleGAN2, trained on FFHQ dataset [29].

尽管可以对StyleGAN2进行不同性质的数据训练,但我们仅将精力集中在面部数据上。我们展示了我们的方法在多项任务中的应用:性别互换,衰老/年轻化以及样式混合和脸部变形。在我们所有的实验中,我们从FFHQ数据集上训练过的StyleGAN2收集数据[ 29 ]。

4.1EVALUATION PROTOCOL

Only the task of gender transform (two directions) is used for evaluation. We use Frechét inception distance (FID) [19] for quantitative comparison of methods, as well as human evaluation.

For each feed-forward baseline we calculate FID between 50 000 real images from FFHQ datasets and 20 000 generated images, using 20 000 images from FFHQ as source images. For each source image we apply transformation to the other gender, assuming source gender is determined by our classification model. Before calculating FID measure all images are resized to 256x256 size for fair comparison.

仅使用性别转换任务(两个方向)进行评估。我们使用Frechét起始距离(FID)[ 19 ]进行方法的定量比较以及人工评估。

对于每个前馈基线,我们使用FFHQ的20000张图像作为源图像,计算FFHQ数据集的50000张真实图像和20000张生成的图像之间的FID。对于每个源图像,假设源性别由我们的分类模型确定,我们将转换应用于其他性别。在计算FID度量之前,将所有图像调整为256x256大小以进行公平比较。

Also human evaluation is used for more accurate comparison with optimization based methods. Our study consists of two surveys. In the first one crowdsource workers were asked to select an image showing better translation from one gender to the other given a source image. They were also instructed to consider preserving of person’s identity, clothes and accessories. We refer to this measure as ”quality”. The second survey asked to select the most realistic image, and no source image was provided. All images were resized to 512x512 size in this comparison.

此外,人工评估还可以与基于优化的方法进行更准确的比较。我们的研究包括两项调查。在第一个中,要求众包工作者选择一幅图像,该图像在给定源图像的情况下能更好地从一种性别转换为另一种。还指示他们考虑保留个人身份,衣服和配件。我们将此措施称为“质量”。第二次调查要求选择最真实的图像,但未提供源图像。在此比较中,所有图像均调整为512x512大小。

The first task should show which method is the best at performing transformation, the second – which has the best quality. We use side-by-side experiments for both tasks where one side is our method and the other side is one of optimization based baselines. Answer choices are shuffled. For each comparison of our method with a baseline, we generate 1000 questions and each question is answered by 10 different people. For answers aggregation we use Dawid-Skene method [12] and filter out the examples with confidence level less than 95% (it is approximately 4% of all questions).

第一项任务应显示哪种方法最适合执行转换,第二项任务应显示最佳质量。我们对两个任务都使用了并行实验,其中一侧是我们的方法,另一侧是基于优化的基准之一。答案选择被打乱了。对于我们的方法与基线的每次比较,我们都会生成1000个问题,并且每个问题都会由10个不同的人回答。对于答案汇总,我们使用Dawid-Skene方法 [ 12 ]并过滤出置信度小于95%(大约占所有问题的4%)的示例。

4.2DISTILLATION OF IMAGE-TO-IMAGE TRANSLATION

Gender swap

We generate a paired dataset for male and female faces according to the method described above and than train a separate pix2pixHD model for each gender translation.

我们根据上述方法为男性和女性面部生成了配对的数据集,然后为每种性别翻译训练了一个单独的pix2pixHD模型。

(a) Female to male (a) Female to male |

(b) Male to female (b) Male to female |

|---|

Figure 4: Gender transformation: comparison with image-to-image translation approaches. MUNIT and StarGAN v2* are multimodal so we show one random realization there.

图4:性别转换:与图像到图像翻译方法的比较。MUNIT和StarGAN v2 *是多模式的,因此我们在此处显示了一个随机实现。

We compete with both unpaired image-to-image methods and different StyleGAN embedders with latent code optimization. We choose StarGAN66https://github.com/yunjey/stargan [10], MUNIT77https://github.com/NVlabs/MUNIT [24] and StarGAN v2*88https://github.com/taki0112/StarGAN_v2-Tensorflow (unofficial implementation, so its results may differ from the official one) [11] for a competition with unpaired methods. We train all these methods on FFHQ classified into males and females.

我们与不成对的图像到图像方法以及具有潜在代码优化功能的不同StyleGAN嵌入器竞争。我们选择StarGAN 66https://github.com/yunjey/stargan [ 10 ],MUNIT 77https://github.com/NVlabs/MUNIT [ 24 ]和StarGAN v2 * 88https://github.com/taki0112/StarGAN_v2-Tensorflow(非官方实施,因此其结果可能与官方结果不同) [ 11 ] 与未配对方法的比赛。我们在FFHQ上将所有这些方法训练为男性和女性。

Fig. 4 shows qualitative comparison between our approach and unpaired image-to-image ones. It demonstrates that distilled transformation have significantly better visual quality and more stable results. Quantitative comparison in Table 4(a) confirms our observations.

图 4显示了我们的方法与未配对的图像到图像方法之间的定性比较。它表明蒸馏转化具有明显更好的视觉质量和更稳定的结果。表4(a)中的定量比较证实了我们的观察结果。

StyleGAN2 provides an official projection method. This method operates in W, which only allows to find faces generated by this model, but not real world images. So, we also build a similar method for W+ for comparison. It optimizes separate w for each layer of the generator, which helps to better reconstruct a given image. After finding w we can add transformation vector described above and generate a transformed image.

Also we add previous approaches [40, 5] for finding latent code to comparison, even though they are based on the first version of StyleGAN. StyleGAN encoder[5] add to[40] more advanced loss functions and forward pass approximation of optimization starting point, that improve reconstruction results.

StyleGAN2提供了一种官方的投影方法。此方法在W上作用,它仅允许查找由该模型生成的脸部,而不是现实世界的图像。因此,我们还为W进行比较。它优化了单独的w生成器的每一层,这有助于更好地重建给定图像。找到之后w 我们可以添加上述转换向量并生成转换后的图像。

此外,我们添加以前的方法[ 40,5 ]寻找潜在代码来比较,虽然它们都是基于StyleGAN的第一个版本。StyleGAN编码器[ 5 ]在[ 40 ]的基础上增加了更多的高级损耗函数,并优化起点的前向逼近近似,从而改善了重建结果。

| Method | FID |

|---|---|

| StarGAN [10] | 29.7 |

| MUNIT [23] | 40.2 |

| StarGANv2* | 25.6 |

| Ours | 14.7 |

| Real images | 3.3 |

(a) Comparison with unpaired image-to-image methods

与未配对的图像对图像方法进行比较

| Method | Quality | Realism |

|---|---|---|

| StyleGAN Encoder [40] | 18% | 14% |

| StyleGAN Encoder [5] | 30% | 68% |

| StyleGAN2 projection (W) | 22% | 22% |

| StyleGAN2 projection (W+) | 11% | 14% |

| Real images | - | 85% |

(b) User study of StyleGAN-based approaches. Winrate method vs ours”

基于StyleGAN的方法的用户研究。Winrate方法与我们的方法”

Table 1: Quantitative comparison. We use FID as a main measure for comparison with another image-to-image transformation baselines. We measure user study for all StyleGAN-based approaches because we consider human evaluation more reliable measure for perception.

表1:定量比较。我们将FID作为与其他图像到图像转换基线进行比较的主要指标。我们考虑所有基于StyleGAN的方法的用户研究,因为我们认为人类评估是感知的更可靠方法。

Figure 5: Gender transformation: comparison with StyleGAN2 latent code optimization methods. Input samples are real images from FFHQ.

图5:性别转换:与StyleGAN2潜在代码优化方法的比较。输入样本是来自FFHQ的真实图像。请注意,异常对象会因优化而丢失,但会保留图像到图像的转换。

Notice that unusual objects are lost with optimization but kept with image-to-image translation.Since unpaired methods show significantly worse quality, we put more effort into comparisons between different methods of searching embedding through optimization. We avoid using methods that utilize FID because all of them are based on the same StyleGAN model. Also, FID cannot measure ”quality of transformation” because it does not check keeping of personality. So we decide to make user study our main measure for all StyleGAN-based methods. Fig. 5 shows qualitative comparison of all the methods. It is visible that our method performs better in terms of transformation quality. And only StyleGAN Encoder [5] outperforms our method in realism. However this method generates background unconditionally.

由于未配对的方法显示出明显较差的质量,因此我们将更多精力投入到通过优化搜索嵌入的不同方法之间的比较中。我们避免使用利用FID的方法,因为所有方法都基于相同的StyleGAN模型。此外,FID无法衡量“变革的质量”,因为它不检查保持个性的能力。因此,我们决定让用户研究所有基于StyleGAN的方法的主要指标。图5显示了所有方法的定性比较。可见,我们的方法在转换质量方面表现更好。而且只有StyleGAN Encoder [ 5 ]在实际效果上胜过我们的方法。但是,此方法无条件生成背景。

We find that pix2pixHD keeps more details on transformed images than all the encoders. We suppose that this is achieved due to the ability of pix2pixHD to pass part of the u