s1: Simple test-time scaling

s1: 简单测试时缩放

Niklas Mu en nigh off * 1 3 4 Zitong Yang * 1 Weijia $\mathbf\mathbf\mathbf^\mathbf^$ Xiang Lisa $\mathbf\mathbf^\mathbf^$ Li Fei-Fei 1 Hannaneh Hajishirzi 2 3 Luke Z ett le moyer Percy Liang Emmanuel Candès Tatsunori Hashimoto

Niklas Muennighoff * 1 3 4 Zitong Yang * 1 Weijia $\mathbf\mathbf\mathbf^\mathbf^$ Xiang Lisa $\mathbf\mathbf^\mathbf^$ Li Fei-Fei 1 Hannaneh Hajishirzi 2 3 Luke Zettlemoyer Percy Liang Emmanuel Candès Tatsunori Hashimoto

Abstract

摘要

Test-time scaling is a promising new approach to language modeling that uses extra test-time compute to improve performance. Recently, OpenAI’s o1 model showed this capability but did not publicly share its methodology, leading to many replication efforts. We seek the simplest approach to achieve test-time scaling and strong reasoning performance. First, we curate a small dataset s1K of 1,000 questions paired with reasoning traces relying on three criteria we validate through ablations: difficulty, diversity, and quality. Second, we develop budget forcing to control test-time compute by forcefully terminating the model’s thinking process or lengthening it by appending “Wait” multiple times to the model’s generation when it tries to end. This can lead the model to doublecheck its answer, often fixing incorrect reasoning steps. After supervised finetuning the Qwen2.5- 32B-Instruct language model on s1K and equipping it with budget forcing, our model s1-32B exceeds o1-preview on competition math questions by up to $27%$ (MATH and AIME24). Further, scaling s1-32B with budget forcing allows extrapolating beyond its performance without test-time intervention: from $50%$ to $57%$ on AIME24. Our model, data, and code are open-source at https: //github.com/simple scaling/s1

测试时扩展是一种有前景的新方法,用于语言建模,通过额外的测试计算来提升性能。最近,OpenAI 的 o1 模型展示了这一能力,但未公开其方法,导致了许多复现尝试。我们寻求最简单的方法来实现测试时扩展和强大的推理性能。首先,我们通过消融实验验证了三个标准:难度、多样性和质量,策划了一个包含 1,000 个问题及相应推理路径的小数据集 s1K。其次,我们开发了预算强制控制方法,通过强制终止模型的思考过程或在模型试图结束时多次附加“Wait”来延长其生成内容,从而控制测试时的计算量。这可以使模型重新检查其答案,经常纠正错误的推理步骤。在将 Qwen2.5-32B-Instruct 语言模型在 s1K 上进行监督微调并配备预算强制控制后,我们的模型 s1-32B 在竞赛数学问题上超越了 o1-preview,最高提升了 27%(MATH 和 AIME24)。此外,通过预算强制控制扩展 s1-32B,允许其在不进行测试时干预的情况下超越自身性能:在 AIME24 上从 50% 提升到 57%。我们的模型、数据及代码已开源,地址为 https://github.com/simple scaling/s1。

Figure 1. Test-time scaling with s1-32B. We benchmark s1-32B on reasoning-intensive tasks and vary test-time compute.

图 1: 使用 s1-32B 的测试时间缩放。我们在推理密集型任务上对 s1-32B 进行基准测试,并改变测试时的计算量。

of this approach is to increase the compute at test time to get better results. There has been much work exploring this idea (Snell et al., 2024; Welleck et al., 2024), and the viability of this paradigm was recently validated by OpenAI o1 (OpenAI, 2024). o1 has demonstrated strong reasoning performance with consistent gains from scaling test-time compute. OpenAI describes their approach as using largescale reinforcement learning (RL) implying the use of sizable amounts of data (OpenAI, 2024). This has led to various attempts to replicate their models relying on techniques like Monte Carlo Tree Search (Gao et al., 2024b; Zhang et al., 2024a), multi-agent approaches (Qin et al., 2024), and others (Wang et al., 2024a; Huang et al., 2024b; 2025). Among these approaches, DeepSeek R1 (DeepSeek-AI et al., 2025) has successfully replicated o1-level performance, also employing reinforcement learning via millions of samples and multiple training stages. However, despite the large number of o1 replication attempts, none have openly replicated a clear test-time scaling behavior. Thus, we ask: what is the simplest approach to achieve both test-time scaling and strong reasoning performance?

该方法的目的是通过增加测试时的计算量以获得更好的结果。许多工作探索了这一想法 (Snell et al., 2024; Welleck et al., 2024),而这一范式的可行性最近被 OpenAI 的 o1 (OpenAI, 2024) 验证。o1 展示了强大的推理性能,并且随着测试时计算量的增加,性能持续提升。OpenAI 描述了他们的方法,即使用大规模强化学习 (RL),这意味着需要使用大量数据 (OpenAI, 2024)。这引发了各种尝试,试图复制他们的模型,依赖于蒙特卡洛树搜索 (Gao et al., 2024b; Zhang et al., 2024a)、多智能体方法 (Qin et al., 2024) 以及其他技术 (Wang et al., 2024a; Huang et al., 2024b; 2025)。在这些方法中,DeepSeek R1 (DeepSeek-AI et al., 2025) 成功复制了 o1 级别的性能,同样通过数百万样本和多个训练阶段使用强化学习。然而,尽管有大量的 o1 复制尝试,但没有一个公开复制出明显的测试时扩展行为。因此,我们提出一个问题:实现测试时扩展和强大推理性能的最简单方法是什么?

1. Introduction

1. 引言

Performance improvements of language models (LMs) over the past years have largely relied on scaling up train-time compute using large-scale self-supervised pre training (Kaplan et al., 2020; Hoffmann et al., 2022). The creation of these powerful models has set the stage for a new scaling paradigm built on top of them: test-time scaling. The aim

语言模型的性能提升

We show that training on only 1,000 samples with next-token prediction and controlling thinking duration via a simple test-time technique we refer to as budget forcing leads to a strong reasoning model that scales in performance with more test-time compute. Specifically, we construct s1K, which consists of 1,000 carefully curated questions paired with reasoning traces and answers distilled from Gemini Thinking Experimental (Google, 2024). We perform supervised fine-tuning (SFT) of an off-the-shelf pretrained model on our small dataset requiring just 26 minutes of training on 16 H100 GPUs. After training, we control the amount of test-time compute our model spends using budget forcing: (I) If the model generates more thinking tokens than a desired limit, we forcefully end the thinking process by appending an end-of-thinking token delimiter. Ending the thinking this way makes the model transition to generating its answer. (II) If we want the model to spend more test-time compute on a problem, we suppress the generation of the end-of-thinking token delimiter and instead append “Wait” to the model’s current reasoning trace to encourage more exploration. Equipped with this simple recipe – SFT on 1,000 samples and test-time budget forcing – our model s1- 32B exhibits test-time scaling (Figure 1). Further, s1-32B is the most sample-efficient reasoning model and outperforms closed-source models like OpenAI’s o1-preview (Figure 2).

我们展示了仅使用1,000个样本进行训练并通过我们称为预算强制(budget forcing)的简单测试时技术来控制思考时长,可以生成一个在性能上随测试时计算量增加而扩展的强大推理模型。具体来说,我们构建了s1K,其中包含1,000个精心挑选的问题以及从Gemini Thinking Experimental(Google, 2024)中提炼的推理轨迹和答案。我们在一个现成的预训练模型上进行了监督微调(SFT),仅需在16个H100 GPU上进行26分钟的训练。训练完成后,我们使用预算强制来控制模型在测试时的计算量:(I)如果模型生成的思考Token超过所需的限制,我们通过添加一个思考结束Token分隔符来强制结束思考过程。以这种方式结束思考会使模型过渡到生成其答案。(II)如果我们希望模型在某个问题上花费更多的测试时计算量,我们会抑制思考结束Token分隔符的生成,并在模型当前的推理轨迹后添加“等待”以鼓励更多的探索。通过这个简单的方案——在1,000个样本上进行SFT和测试时预算强制——我们的模型s1-32B展示了测试时的扩展能力(图1)。此外,s1-32B是样本效率最高的推理模型,并且优于OpenAI的o1-preview等闭源模型(图2)。

We conduct extensive ablation experiments targeting (a) our selection of 1,000 (1K) reasoning samples and (b) our testtime scaling. For (a), we find that jointly incorporating difficulty, diversity, and quality measures into our selection algorithm is important. Random selection, selecting samples with the longest reasoning traces, or only selecting maximally diverse samples all lead to significantly worse performance (around $-30%$ on AIME24 on average). Training on our full data pool of 59K examples, a superset of s1K, does not offer substantial gains over our 1K selection. This highlights the importance of careful data selection and echoes prior findings for instruction tuning (Zhou et al., 2023). For ${\bf(b)}$ , we define desiderata for test-time scaling methods to compare different approaches. Budget forcing leads to the best scaling as it has perfect control l ability with a clear positive slope leading to strong performance.

我们进行了广泛的消融实验,针对(a)我们选择的1,000个(1K)推理样本和(b)我们的测试时间扩展。对于(a),我们发现将难度、多样性和质量度量共同纳入我们的选择算法是很重要的。随机选择、选择具有最长推理路径的样本,或仅选择最多样化的样本,都会导致性能显著下降(在AIME24上平均约为$-30%$)。在我们包含59K示例的完整数据池(s1K的超集)上进行训练,并不能比我们选择的1K样本带来显著增益。这突显了仔细选择数据的重要性,并与之前关于指令微调的发现相呼应(Zhou et al., 2023)。对于(b),我们定义了测试时间扩展方法的需求,以比较不同的方法。预算强制扩展表现出最佳的扩展效果,因为它具有完美的控制能力,且具有明显的正斜率,从而实现了强大的性能。

In summary, our contributions are: We develop simple methods for creating a sample-efficient reasoning dataset (§2) and test-time scaling (§3); Based on these we build s1-32B which is competitive with o1-preview (§4); We ablate subtleties of data (§5.1) and test-time scaling (§5.2). We end with a discussion to motivate future work on simple reasoning (§6). Our code, model, and data are open-source at https://github.com/simple scaling/s1.

总结来说,我们的贡献是:我们开发了简单的方法来创建样本高效的推理数据集(第2节)和测试时间扩展(第3节);基于这些方法,我们构建了s1-32B,与o1-preview具有竞争力(第4节);我们消融了数据的细节(第5.1节)和测试时间扩展的细节(第5.2节)。最后,我们讨论了推动未来简单推理工作的动机(第6节)。我们的代码、模型和数据在https://github.com/simple scaling/s1上开源。

2. Reasoning data curation to create s1K

2. 推理数据管理以创建 s1K

In this section, we describe our process for creating a large dataset first in $\S2.1$ and then filtering it down to s1K in $\S2.2$ .

在本节中,我们首先在 $\S2.1$ 中描述了创建大型数据集的过程,然后在 $\S2.2$ 中将其过滤至 s1K。

2.1. Initial collection of 59K samples

2.1. 初步收集的 59K 样本

We collect an initial 59,029 questions from 16 diverse sources following three guiding principles. Quality: Datasets should be of high quality; we always inspect samples and ignore datasets with, e.g., poor formatting; Difficulty: Datasets should be challenging and require significant reasoning effort; Diversity: Datasets should stem from different fields to cover different reasoning tasks. We collect datasets of two categories:

我们根据三个指导原则从16个不同来源收集了最初的59,029个问题。质量:数据集应具有高质量;我们始终检查样本并忽略格式不良的数据集;难度:数据集应具有挑战性,需要大量的推理努力;多样性:数据集应来自不同领域,以涵盖不同的推理任务。我们收集了两类数据集:

Curation of existing datasets Our largest source is NuminaMATH (LI et al., 2024) with 30,660 mathematical problems from online websites. We also include historical AIME problems (1983-2021). To enhance diversity, we add Olympic Arena (Huang et al., 2024a) with 4,250 questions spanning Astronomy, Biology, Chemistry, Computer Science, Geography, Mathematics, and Physics from various Olympiads. OmniMath (Gao et al., 2024a) adds 4,238 competition-level mathematics problems. We also include 2,385 problems from AGIEval (Zhong et al., 2023), which features questions from standardized tests like SAT and LSAT, covering English, Law, and Logic. We refer to Table 6 in $\S B$ for our other sources.

现有数据集的整理

New datasets in quantitative reasoning To complement these existing datasets, we create two original datasets. s1-prob consists of 182 questions from the probability section of Stanford University’s Statistics Department’s PhD Qualifying Exams (https://statistics. stanford.edu), accompanied by handwritten solutions that cover difficult proofs. The probability qualifying exam is held yearly and requires professional-level mathematical problem-solving. s1-teasers comprises 23 challenging brain-teasers commonly used in interview questions for quantitative trading positions. Each sample consists of a problem and solution taken from Puzzled Quant (https: //www.puzzled quant.com/). We only take examples with the highest difficulty level ("Hard").

定量推理中的新数据集

为了补充这些现有数据集,我们创建了两个原始数据集。s1-prob 包含 182 道来自斯坦福大学统计系博士资格考试概率部分的问题 (https://statistics.stanford.edu),并附有涵盖复杂证明的手写解答。概率资格考试每年举行一次,要求具备专业水平的数学问题解决能力。s1-teasers 包含 23 道常用于定量交易职位面试题中的具有挑战性的脑筋急转弯。每个样本均由取自 Puzzled Quant (https://www.puzzledquant.com/) 的问题和解答组成。我们仅选取难度级别最高 ("Hard") 的示例。

For each question, we generate a reasoning trace and solution using the Google Gemini Flash Thinking API (Google, 2024) extracting its reasoning trace and response. This yields 59K triplets of a question, generated reasoning trace, and generated solution. Examples from our dataset are in $\mathrm{\SC}.2$ We decontaminate all samples against our evaluation questions (MATH500, GPQA Diamond, AIME24; $\S B.5$ ) using 8-grams and de duplicate the data.

对于每个问题,我们使用 Google Gemini Flash Thinking API (Google, 2024) 生成推理轨迹和解决方案,提取其推理轨迹和响应。这将产生 59K 个问题、生成的推理轨迹和生成的解决方案的三元组。我们数据集的示例如 $\mathrm{\SC}.2$ 所示。我们使用 8-grams 对所有样本进行去污染处理(针对评估问题 MATH500、GPQA Diamond、AIME24;参见 $\S B.5$),并去重数据。

2.2. Final selection of 1K samples

2.2. 1K样本的最终选择

We could directly train on our pool of 59K questions, however, our goal is to find the simplest approach with minimal resources. Thus, we go through three stages of filtering to arrive at a minimal set of 1,000 samples relying on our three guiding data principles: Quality, Difficulty, and Diversity.

我们可以直接在 59K 问题的池中进行训练,然而,我们的目标是找到最简方法并最小化资源消耗。因此,我们通过三个阶段进行筛选,最终得出 1000 个样本,遵循我们的三个数据原则:质量、难度和多样性。

Quality We first remove any questions where we ran into any API errors reducing our dataset to 54,116 samples. Next, we filter out low-quality examples by checking if they contain any string patterns with formatting issues, such as ASCII art diagrams, non-existent image references, or inconsistent question numbering reducing our dataset to 51,581 examples. From this pool, we identify 384 samples for our final 1,000 samples from datasets that we perceive as high-quality and not in need of further filtering (see $\S B.4$ for details).

质量

我们首先剔除了所有遇到 API 错误的样本,将数据集减少到 54,116 个样本。接着,我们通过检查是否包含任何格式问题的字符串模式(如 ASCII 艺术图、不存在的图像引用或不一致的题号)来过滤低质量样本,将数据集进一步减少到 51,581 个样本。从这个池中,我们从我们认为高质量且无需进一步过滤的数据集中挑选了 384 个样本,作为最终 1,000 个样本的一部分(详见 $\S B.4$ )。

Figure 2. s1K and s1-32B. (left) s1K is a dataset of 1,000 high-quality, diverse, and difficult questions with reasoning traces. (right) s1-32B, a 32B parameter model finetuned on s1K is on the sample-efficiency frontier. See Table 1 for details on other models.

图 2: s1K 和 s1-32B。(左)s1K 是一个包含 1,000 个高质量、多样化且具有挑战性问题的数据集,附带推理过程。(右)s1-32B 是一个在 s1K 上微调的 32B 参数模型,位于样本效率的前沿。详见 表 1 中其他模型的详细信息。

Difficulty For difficulty, we use two indicators: model performance and reasoning trace length. We evaluate two models on each question: Qwen2.5-7B-Instruct and Qwen2.5- 32B-Instruct (Qwen et al., 2024), with correctness assessed by Claude 3.5 Sonnet comparing each attempt against the reference solution (see $\S B.3$ for the grading protocol). We measure the token length of each reasoning trace to indicate problem difficulty using the Qwen2.5 tokenizer. This relies on the assumption that more difficult problems require more thinking tokens. Based on the grading, we remove questions that either Qwen2.5-7B-Instruct or Qwen2.5-32B-Instruct can solve correctly and thus may be too easy. By using two models we reduce the likelihood of an easy sample slipping through our filtering due to a rare mistake on an easy question of one of the models. This brings our total samples down to 24,496, setting the stage for the next round of subsampling based on diversity. While filtering with these two models may be optimized for our setup as we will also use Qwen2.5-32B-Instruct as our model to finetune, the idea of model-based filtering generalizes to other setups.

难度

对于难度,我们使用两个指标:模型性能和推理轨迹长度。我们在每个问题上评估了两个模型:Qwen2.5-7B-Instruct 和 Qwen2.5-32B-Instruct (Qwen et al., 2024),通过 Claude 3.5 Sonnet 将每次尝试与参考解决方案进行比较来评估正确性(参见 $\S B.3$ 的评分协议)。我们使用 Qwen2.5 的 tokenizer 测量每个推理轨迹的 token 长度,以指示问题难度。这基于一个假设,即更困难的问题需要更多的思考 token。根据评分结果,我们删除了 Qwen2.5-7B-Instruct 或 Qwen2.5-32B-Instruct 能够正确解决并因此可能过于简单的问题。通过使用两个模型,我们减少了一个简单样本因其中一个模型在简单问题上罕见错误而通过过滤的可能性。这将我们的总样本量减少到 24,496 个,为下一轮基于多样性的子采样奠定了基础。虽然使用这两个模型进行过滤可能针对我们的设置进行了优化,因为我们也使用 Qwen2.5-32B-Instruct 作为微调的模型,但基于模型的过滤思路可以推广到其他设置。

Diversity To quantify diversity we classify each question into specific domains using Claude 3.5 Sonnet based on the Mathematics Subject Classification (MSC) system (e.g., geometry, dynamic systems, real analysis, etc.) from the American Mathematical Society. The taxonomy focuses on topics in mathematics but also includes other sciences such as biology, physics, and economics. To select our final examples from the pool of 24,496 questions, we first choose one domain uniformly at random. Then, we sample one problem from this domain according to a distribution that favors longer reasoning traces (see $\S B.4$ for details) as motivated in Difficulty. We repeat this process until we have 1,000 total samples.

多样性 为了量化多样性,我们使用 Claude 3.5 Sonnet 根据美国数学学会的数学主题分类系统 (Mathematical Subject Classification, MSC) (例如几何、动力系统、实分析等) 将每个问题分类到特定领域。该分类法主要关注数学主题,但也包括其他科学领域,如生物学、物理学和经济学。为了从 24,496 个问题池中选择最终示例,我们首先均匀随机选择一个领域。然后,根据有利于更长推理轨迹的分布从该领域中抽样一个问题 (详见 $\S B.4$),这一选择动机来自难度部分。我们重复此过程,直到总共获得 1,000 个样本。

This three-stage process yields a dataset spanning 50 different domains (see Table 5). In $\S5.1$ , we will show that using our three criteria in combination is important, as only relying on quality, diversity, or difficulty in isolation leads to worse datasets. Examples from our dataset are in $\mathrm{\SC}.2$ .

这个三步过程生成了一个涵盖50个不同领域的数据集(见表5)。在$\S5.1$中,我们将展示组合使用我们的三个标准是重要的,因为仅依赖质量、多样性或难度会导致较差的数据集。我们数据集中的示例在$\mathrm{\SC}.2$中。

3. Test-time scaling

3. 测试时缩放

3.1. Method

3.1. 方法

We classify test-time scaling methods into 1) Sequential, where later computations depend on earlier ones (e.g., a long reasoning trace), and 2) Parallel, where computations run independent ly (e.g., majority voting) (Snell et al., 2024; Brown et al., 2024). We focus on sequential scaling as intuitively we believe it should scale better, since later computations can build on intermediate results, allowing for deeper reasoning and iterative refinement. We propose new sequential scaling methods and ways to benchmark them.

我们将测试时扩展方法分为两类:1) 顺序扩展 (Sequential),其中后续计算依赖于先前的计算结果(例如,长推理链),以及 2) 并行扩展 (Parallel),其中计算独立运行(例如,多数投票)(Snell et al., 2024; Brown et al., 2024)。我们专注于顺序扩展,因为我们直观上认为它应该更具可扩展性,因为后续计算可以基于中间结果进行,从而实现更深的推理和迭代优化。我们提出了新的顺序扩展方法以及对其进行基准测试的方式。

Budget forcing We propose a simple decoding-time intervention by forcing a maximum and/or minimum number of thinking tokens at test time. Specifically, we enforce a maximum token count by simply appending the end-of-thinking token delimiter and “Final Answer:” to early exit the thinking stage and make the model provide its current best answer. To enforce a minimum, we suppress the generation of the end-of-thinking token delimiter and optionally append the string “Wait” to the model’s current reasoning trace to encourage the model to reflect on its current generation. Figure 3 contains an example of how this simple approach can lead the model to arrive at a better answer.

预算强制

我们提出了一种简单的解码时干预方法,通过在测试时强制设置最大和/或最小思考Token数量。具体来说,我们通过简单地追加思考结束Token定界符和“最终答案:”来强制最大Token计数,以提前退出思考阶段,并让模型提供其当前的最佳答案。为了强制最小计数,我们抑制生成思考结束Token定界符,并可选择在当前推理轨迹中追加“等待”字符串,以鼓励模型反思其当前的生成。图 3 包含了一个示例,展示了这种简单方法如何引导模型得出更好的答案。

"raspberry"中有多少个'r'?问题

让我们分解一下计算单词"raspberry"中字母'r'的过程...

- 第一个字母:'r' - 这是一个'r',计数 = 1。

- 第二个字母:'a' - 不是'r',计数保持为1。

- 第六个字母:'e' - 不是'r',计数保持为1。

- 第七个字母:'r' - 这是一个'r',计数 = 2。

- 第八个字母:'y' - 不是'r',计数保持为2...

"raspberry"中的'r'的数量是2。等等,让我们仔细重新阅读问题。它问的是"raspberry中有多少个'r'?"。

*r - a - s - p - b - e - r - r - y...

- 第一个'r'...

- 第二个'r'...

- 第三个'r'...计数 = 3...

推理轨迹:由于快速阅读单词,我最初回答的2是错误的。最终答案:最终答案是3

Baselines We benchmark budget forcing with: (I) Conditional length-control methods, which rely on telling the model in the prompt how long it should generate for. We group them by granularity into (a) Token-conditional control: We specify an upper bound of thinking tokens in the prompt; (b) Step-conditional control: We specify an upper bound of thinking steps, where each step is around 100 tokens; (c) Class-conditional control: We write two generic prompts that tell the model to either think for a short or long amount of time (see $\S D.1$ for details). (II) Rejection sampling, which samples until a generation fits a predetermined compute budget. This oracle captures the posterior over responses conditioned on its length.

基准方法

我们通过以下方法对预算约束进行基准测试:

(I) 条件长度控制方法,这些方法依赖于在提示中告诉模型应该生成多长的内容。我们按粒度将其分为:

(a) Token条件控制:在提示中指定思考Token的上限;

(b) 步骤条件控制:在提示中指定思考步骤的上限,其中每一步大约为100个Token;

(c) 类别条件控制:我们编写两个通用提示,告诉模型思考较短或较长的时间(详见$\S D.1$)。

(II) 拒绝采样,该方法不断采样,直到生成的样本符合预定的计算预算。此方法捕捉了基于长度条件的后验响应分布。

3.2. Metrics

3.2. 指标

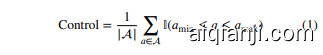

We establish a set of desiderata as evaluation metrics to measure test-time scaling across methods. Importantly, we do not only care about the accuracy a method can achieve but also its control l ability and test-time scaling slope. For each method we consider, we run a set of evaluations $a\in$ $\mathbfcal{A}$ varying test-time compute on a fixed benchmark, e.g. AIME24. This produces a piece-wise linear function $f$ with compute as the $\mathbf{X}$ -axis measured in thinking tokens and accuracy as the y-axis (see Figure 1, where the rightmost dot for AIME24 corresponds to $f(7320)=57%$ ). We measure

我们建立了一组期望作为评估指标,以衡量方法在测试时的扩展能力。重要的是,我们不仅关心方法可以达到的准确性,还关心其控制能力和测试时扩展斜率。对于每种方法,我们运行一组评估 $a\in$ $\mathbfcal{A}$,在固定基准(例如 AIME24)上改变测试时的计算量。这产生了一个分段线性函数 $f$,其中计算量作为 $\mathbf{X}$ 轴,以思考Token (thinking tokens) 衡量,准确性作为 y 轴(参见图 1,其中 AIME24 最右侧的点对应于 $f(7320)=57%$)。我们测量

three metrics:

三个指标:

where $a_{\mathrm{min}},a_{\mathrm{max}}$ refer to a pre-specified minimum and maximum amount of test-time compute; in our case thinking tokens. We usually only constrain $a_{\mathrm{max}}$ . As tokens generated correspond to the amount of test-time compute spent, this metric measures the extent to which a method allows control l ability over the use of that test-time compute. We report it as a percentage with $100%$ being perfect control.

其中,$a_{\mathrm{min}},a_{\mathrm{max}}$ 表示预先指定的测试时计算量(compute)的最小值和最大值;在本例中指 Token。通常我们只约束 $a_{\mathrm{max}}$。由于生成的 Token 与测试时计算量的消耗相对应,该指标衡量了某种方法对测试时计算量使用的控制能力。我们以百分比形式报告,100% 表示完全控制。

Performance is simply the maximum performance the method achieves on the benchmark. A method with monotonically increasing scaling achieves $100%$ performance on any benchmark in the limit. However, the methods we investigate eventually flatten out or further scaling fails due to control or context window limitations.

性能是该方法在基准测试中达到的最大性能。随着规模单调增加的方法在任何基准测试中最终都能达到 $100%$ 的性能。然而,由于控制或上下文窗口的限制,我们研究的方法最终会趋于平缓或无法进一步扩展。

4. Results

4. 结果

4.1. Setup

4.1. 设置

Training We perform supervised finetuning on Qwen2.5- 32B-Instruct using s1K to obtain our model s1-32B using basic hyper parameters outlined in $\S C$ . Finetuning took 26 minutes on 16 NVIDIA H100 GPUs with PyTorch FSDP.

训练:我们使用s1K对Qwen2.5-32B-Instruct进行监督微调,以获得我们的模型s1-32B,并遵循$\S C$中概述的基本超参数。在16个NVIDIA H100 GPU上使用PyTorch FSDP进行微调,耗时26分钟。

Evaluation We select three representative reasoning benchmarks widely used in the field: AIME24 (of America, 2024) consists of 30 problems that were used in the 2024 American Invitational Mathematics Examination (AIME) held from Wednesday, January 31 – Thursday, February 1, 2024. AIME tests mathematical problem-solving with arithmetic, algebra, counting, geometry, number theory, probability, and other secondary school math topics. High-scoring high school students in the test are invited to participate in the United States of America Mathematics Olympiad (USAMO). All AIME answers are integers ranging from 000 to 999, inclusive. Some AIME problems rely on figures that we provide to our model using the vector graphics language Asymptote as it cannot take image inputs. MATH500 (Hendrycks et al., 2021) is a benchmark of competition math problems of varying difficulty. We evaluate on the same 500 samples selected by OpenAI in prior work (Lightman et al., 2023). GPQA Diamond (Rein et al., 2023) consists of 198

评估我们选择了三个在该领域广泛使用的代表性推理基准:AIME24 (America, 2024) 包含30道题目,这些题目来自2024年1月31日至2月1日举行的美国数学邀请赛(AIME)。AIME测试算术、代数、计数、几何、数论、概率等中学数学主题的解题能力。在考试中获得高分的高中生将被邀请参加美国数学奥林匹克(USAMO)。所有AIME答案均为000到999之间的整数。由于模型无法处理图像输入,部分AIME题目依赖于我们使用矢量图形语言Asymptote提供的图形。MATH500 (Hendrycks et al., 2021) 是一个包含不同难度竞赛数学题目的基准。我们在OpenAI先前工作中选择的500个样本上进行评估 (Lightman et al., 2023)。GPQA Diamond (Rein et al., 2023) 包含198道题目。

(a) Sequential scaling via budget forcing

(a) 通过预算约束进行顺序扩展

Figure 4. Sequential and parallel test-time scaling. (a): Budget forcing shows clear scaling trends and extrapolates to some extent. For the three rightmost dots, we prevent the model from stopping its thinking 2/4/6 times, each time appending “Wait” to its current reasoning trace. $(b)$ : For Qwen2.5-32B-Instruct we perform 64 evaluations for each sample with a temperature of 1 and visualize the performance when majority voting across 2, 4, 8, 16, 32, and 64 of these. (b) Parallel scaling via majority voting

图 4: 顺序和并行测试时间扩展。(a): 预算强制显示了明显的扩展趋势,并在一定程度上进行了外推。对于最右边的三个点,我们阻止模型停止思考2/4/6次,每次将其当前的推理轨迹附加“Wait”。(b): 对于Qwen2.5-32B-Instruct,我们对每个样本进行了64次评估,温度为1,并在2、4、8、16、32和64次多数投票时可视化性能。(b) 通过多数投票进行并行扩展

PhD-level science questions from Biology, Chemistry and Physics. Experts with PhDs in the corresponding domains only achieved $69.7%$ on GPQA Diamond (OpenAI, 2024). When we write “GPQA” in the context of evaluation in this work, we always refer to the Diamond subset. We build on the “lm-evaluation-harness” framework (Gao et al., 2021; Biderman et al., 2024).

来自生物学、化学和物理学的博士级科学问题。相应领域的博士专家在GPQA钻石(OpenAI, 2024)上仅取得了 $69.7%$ 的成绩。在本工作的评估背景下,当我们提到“GPQA”时,始终指的是钻石子集。我们基于“lm-evaluation-harness”框架(Gao et al., 2021; Biderman et al., 2024)。

Other models We benchmark s1-32B against: OpenAI o1 series (OpenAI, 2024), which are closed-source models that popularized the idea of test-time scaling; DeepSeek r1 series (Team, 2024a), which are open-weight reasoning models with up to o1-level performance, concurrently released to ours; Qwen’s QwQ-32B-preview (Team, 2024b), a 32B open-weight reasoning model without disclosed methodology; Sky-T1-32B-Preview (Team, 2025) and Bespoke32B (Labs, 2025), which are open models with open reasoning data distilled from QwQ-32B-preview and r1; Google Gemini 2.0 Flash Thinking Experimental (Google, 2024), the API that we distill from. As it has no official evaluation scores, we use the Gemini API to benchmark it ourselves. However, the “recitation error” of the Gemini API makes evaluation challenging.2 We circumvent this, by manually inserting all 30 AIME24 questions in its web interface where the error does not appear. However, we leave out MATH500 (500 questions) and GPQA Diamond (198 questions), thus they are N.A. in Table 1. Our model, s1-32B, is fully open including weights, reasoning data, and code.

其他模型我们对比了以下基准模型:OpenAI o1系列,该系列是使测试时扩展理念普及的闭源模型;DeepSeek r1系列,这是一些与我们同期发布的、性能可达o1水平的开源权重推理模型;Qwen的QwQ-32B-preview,这是一款32B参数、方法论未公开的开源权重推理模型;Sky-T1-32B-Preview和Bespoke32B,它们是从QwQ-32B-preview及r1蒸馏而来的开放推理数据的开源模型;Google Gemini 2.0 Flash Thinking Experimental,这是我们从其API中蒸馏的模型。鉴于其无官方评估分数,我们自行使用Gemini API进行基准测试。然而,Gemini API的“复述错误”使评估颇具挑战。为此,我们手动在其网页界面中插入所有30道AIME24题目,这一接口下该错误不会出现。但对于MATH500(500题)和GPQA Diamond(198题),我们选择略过,故它们在表1中标记为N.A.。我们的模型s1-32B全面开放,包括权重、推理数据和代码。

Table 1. s1-32B is an open and sample-efficient reasoning model. We evaluate s1-32B, Qwen, and Gemini (some entries are unknown (N.A.), see $\S4$ ). Other results are from the respective reports (Qwen et al., 2024; Team, 2024b; OpenAI, 2024; DeepSeek-AI et al., 2025; Labs, 2025; Team, 2025). # ex. $=$ number examples used for reasoning finetuning; BF $=$ budget forcing.

表 1: s1-32B 是一个开放且高效的推理模型。我们评估了 s1-32B、Qwen 和 Gemini(部分条目未知 (N.A.),见 $\S4$ )。其他结果来自各自的报告 (Qwen et al., 2024; Team, 2024b; OpenAI, 2024; DeepSeek-AI et al., 2025; Labs, 2025; Team, 2025)。# ex. $=$ 用于推理微调的样本数量;BF $=$ 预算强制。

| 模型 | # ex. | AIME 2024 | MATH 500 | GPQA Diamond |

|---|---|---|---|---|

| API only | ||||

| 01-preview | N.A. | 44.6 85.5 | 73.3 | |

| 01-mini | N.A. 70.0 | 90.0 | 60.0 | |

| 01 | N.A. 74.4 | 94.8 | 77.3 | |

| Gemini 2.0 Flash Think. | N.A. 60.0 | N.A. | N.A. | |

| Open Weights | ||||

| Qwen2.5- 32B-Instruct | N.A. | 26.7 84.0 | 49.0 | |

| QwQ-32B | N.A. 50.0 | 90.6 | 65.2 | |

| rl | >800K 79.8 | 97.3 | 71.5 | |

| rl-distill | 800K 72.6 | 94.3 | 62.1 | |

| Open Weights and Open Data | 56.8 | |||

| Sky-T1 17K Bespoke-32B 17K s1 w/o BF 1K s1-32B 1K | 43.3 63.3 50.0 56.7 | 82.4 93.0 58.1 92.6 56.6 93.0 59.6 |

4.2. Performance

4.2. 性能

Test-time scaling Figure 1 shows the performance of s1- 32B with budget forcing scales with more test-time compute. In Figure 4 (left), we expand the plot from Figure 1 (middle) showing that while we can improve AIME24 performance using our budget forcing technique (§3) and more test-time compute it does eventually flatten out at six times. Suppressing the end-of-thinking token delimiter too often can lead the model into repetitive loops instead of continued reasoning. In Figure 4 (right), we show that after training Qwen2.5- 32B-Instruct on our 1,000 samples to produce s1-32B and equipping it with the simple budget forcing technique, it operates in a different scaling paradigm. Scaling test-time compute on the base model via majority voting cannot catch up with the performance of s1-32B which validates our intuition from $\S3$ that sequential scaling is more effective than parallel. We provide example generations of s1-32B in Figure 5.

测试时的扩展

图1展示了s1-32B在更多测试时计算资源下的性能表现。在图4(左)中,我们扩展了图1(中间)的图表,显示虽然我们可以通过预算强制技术(第3节)和更多测试时计算资源来提高AIME24的性能,但最终在六倍时会趋于平缓。过于频繁地抑制思考结束标志(end-of-thinking token delimiter)可能会导致模型进入重复循环,而不是继续推理。在图4(右)中,我们展示了在1000个样本上训练Qwen2.5-32B-Instruct以生成s1-32B,并为其配备简单的预算强制技术后,它在一个不同的扩展范式中运行。通过多数投票在基础模型上进行测试时计算的扩展无法赶上s1-32B的性能,这验证了我们在第3节的直觉,即顺序扩展比并行扩展更有效。我们在图5中提供了s1-32B的生成示例。

Sample-efficiency In Figure 2 (right) and Table 1 we compare s1-32B with other models. We find that s1- 32B is the most sample-efficient open data reasoning model. It performs significantly better than our base model (Qwen2.5-32B-Instruct) despite just training it on an additional 1,000 samples. The concurrently released r1-32B shows stronger performance than s1-32B while also only using SFT (DeepSeek-AI et al., 2025). However, it is trained on $800,\times$ more reasoning samples. It is an open question whether one can achieve their performance with just 1,000 samples. Finally, our model nearly matches Gemini 2.0 Thinking on AIME24. As s1-32B is distilled from Gemini 2.0, this shows our distillation procedure was likely effective.

样本效率

在图 2 (右) 和表 1 中,我们将 s1-32B 与其他模型进行了比较。我们发现 s1-32B 是样本效率最高的开源数据推理模型。尽管仅额外训练了 1000 个样本,它的表现明显优于我们的基础模型 (Qwen2.5-32B-Instruct)。同时发布的 r1-32B 显示出比 s1-32B 更强的性能,但同样只使用了 SFT (DeepSeek-AI et al., 2025)。然而,它使用了 $800,\times$ 更多的推理样本进行训练。是否仅用 1000 个样本就能达到它们的性能仍是一个开放问题。最后,我们的模型在 AIME24 上几乎与 Gemini 2.0 Thinking 相当。由于 s1-32B 是从 Gemini 2.0 蒸馏而来,这表明我们的蒸馏过程可能是有效的。

Table 2. s1K data ablations. We budget force (BF) a maximum of around 30,000 thinking tokens for all scores in this table. This performs slightly better than the scores without BF (Table 1) as it allows the model to finish with a best guess when stuck in an infinite loop. We report $95%$ paired bootstrap confidence intervals for differences relative to the s1K model using 10,000 bootstrap samples. E.g., the interval $[-13%$ , $20%]$ means that, with $95%$ confidence, the true difference between 59K-full and s1K is between $13%$ and $+20%$ . If the entire interval is negative, e.g. $[-27%,-3%]$ , we can confidently say that the performance is worse than s1K.

表 2. s1K 数据消融实验。我们在本表中为所有分数预算了最多约 30,000 个思维 token 的强制预算 (budget force, BF)。这比没有 BF 的分数表现略好 (表 1),因为它允许模型在陷入无限循环时以最佳猜测结束。我们使用 10,000 个自举样本,报告了相对于 s1K 模型差异的 95% 配对自举置信区间。例如,区间 $[-13%$ , $20%]$ 意味着,在 95% 的置信度下,59K-full 和 s1K 之间的真实差异在 $13%$ 和 $+20%$ 之间。如果整个区间为负,例如 $[-27%,-3%]$ ,我们可以肯定地说性能比 s1K 更差。

| 模型 | AIME 2024 | MATH 500 | GPQA Diamond |

|---|---|---|---|

| 1K-random | 36.7 [-26.7%, -3.3%] | 90.6 [-4.8%, 0.0%] | 52.0 [-12.6%, 2.5%] |

| 1K-diverse | 26.7 [-40.0%, -10.0%] | 91.2 [-4.0%, 0.2%] | 54.6 [-10.1%, 5.1%] |

| 1K-longest | 33.3 [-36.7%, 0.0%] | 90.4 [-5.0%, -0.2%] | 59.6 [-5.1%, 10.1%] |

| 59K-full | 53.3 [-13.3%, 20.0%] | 92.8 [-2.6%, 2.2%] | 58.1 |

| s1K | 50.0 | 93.0 | [-6.6%, 8.6%] 57.6 |

samples, a superset of all the 1K-sample versions. This leads to a strong model but uses much more resources. To finetune on 59K samples, we use $394;\mathrm{Hl}00$ GPU hours while s1-32B only required 7 H100 GPU hours. Moreover, relying only on s1K is extremely competitive as shown in $\S2$ . Overall, combining all three criteria – Quality, Difficulty, Diversity – via our methodology in $\S2$ is key for sampleefficient reasoning training.

样本,所有1K样本版本的超集。这导致了一个强大的模型,但使用了更多的资源。为了在59K样本上进行微调,我们使用了$394;\mathrm{Hl}00$的GPU小时,而s1-32B只需要7个H100 GPU小时。此外,仅依赖s1K也极具竞争力,如$\S2$所示。总体而言,通过我们在$\S2$中的方法论,结合质量、难度和多样性这三个标准,是高效推理训练的关键。

5. Ablations

5. 消融实验

5.1. Data Quantity, Diversity, and Difficulty

5.1 数据量、多样性与难度

In $\S2$ we outlined our three guiding principles in curating s1K: Quality, Difficulty, and Diversity. Here we test the importance of combining them and the overall efficacy of our selection. Only Quality (1K-random): After obtaining our high-quality reasoning chains from Gemini, we select 1,000 samples at random; not relying on our difficulty and diversity filtering at all. Table 2 shows this approach performs much worse than s1K across all benchmarks. Only Diversity (1K-diverse): For this dataset, we sample uniformly across domains to maximize diversity disregarding any notion of difficulty. This approach also leads to poor performance similar to 1K-random. Only Difficulty (1K-longest): Here we rely on one of our difficulty indicators introduced in $\S2$ by selecting the 1,000 samples with the longest reasoning traces. This approach significantly boosts GPQA performance but overall still falls short of using s1K. Maximize Quantity: Finally, we compare with just training on all of our 59K

在 $\S2$ 中,我们概述了筛选s1K的三大指导原则:质量、难度和多样性。在此,我们将测试结合这些原则的重要性以及我们筛选的整体效果。仅有质量 (1K-random):在从Gemini获取高质量推理链后,我们随机选择1,000个样本;完全不依赖我们的难度和多样性筛选。表2显示,这种方法在所有基准测试中的表现都比s1K差得多。仅有多样性 (1K-diverse):对于这个数据集,我们在各个领域中均匀采样,以最大化多样性,忽略任何难度概念。这种方法的表现也较差,与1K-random类似。仅有难度 (1K-longest):在这里,我们依赖于 $\S2$ 中引入的一个难度指标,选择推理链最长的1,000个样本。这种方法显著提升了GPQA的表现,但总体上仍然不及使用s1K。最大化数量:最后,我们与仅在我们的59K数据集上训练的结果进行了比较。

5.2. Test-time scaling methods

5.2. 测试时缩放方法

Table 3. Ablations on methods to scale test-time compute on AIME24. $|{\mathcal{A}}|$ refers to the number of evaluation runs used to estimate th e| pr|operties; thus a higher value indicates more robustness. Bold indicates our chosen method and the best values. BF $=$ budget forcing, TCC/SCC/CCC $=$ token/step/class-conditional control, $\mathrm{RS}=$ rejection sampling.

表 3: 在 AIME24 上扩展测试时计算的方法消融实验。$|{\mathcal{A}}|$ 指的是用于估计属性 pr 的评估运行次数;因此,较高的值表示更强的鲁棒性。粗体表示我们选择的方法和最佳值。BF $=$ 预算强制, TCC/SCC/CCC $=$ token/step/class-conditional控制, $\mathrm{RS}=$ 拒绝采样。

| 方法 | 控制 | 扩展 | 性能 | [A |

|---|---|---|---|---|

| BF | 100% | 15 | 56.7 | 5 |

| TCC | 40% | -24 | 40.0 | 5 |

| TCC + BF | 100% | 13 | 40.0 | 5 |

| SCC | 9% | 3 | 36.7 | 5 |

| SCC + BF | 100% | 6 | 36.7 | 5 |

| CCC | 50% | 25 | 36.7 | 2 |

| RS | 100% | -35 | 40.0 | 5 |

[AIME24]

[AIME24]

[MATH500]

[MATH500]

[GPQA]

[GPQA]

Alice and Bob play the following game. A stack of $n$ tokens lies before them. The players take turns with Alice going first. On each turn, the player removes either 1 token or 4 tokens from the stack. Whoever removes the last token wins. Find the number of positive integers $n$ less than or equal to 2024 for which there exists a strategy for Bob that guarantees that Bob will win the game regardless of Alice’s play.

Alice 和 Bob 玩以下游戏。他们面前有一堆 $n$ 个 Token。玩家轮流进行,Alice 先手。在每轮中,玩家从堆中移除 1 个或 4 个 Token。移除最后一个 Token 的玩家获胜。找出所有小于等于 2024 的正整数 $n$,使得 Bob 存在一种策略,可以保证无论 Alice 如何操作,Bob 都能赢得游戏。

Find the projection of $a$ onto $b=$ $\binom{2}{6},{\mathfrak{i f}},a\cdot b=8.$

求 $a$ 在 $b=$ $\binom{2}{6}$ 上的投影,已知 $a \cdot b=8$。

The projection of vector 𝐚onto vector 𝐛is given by the formula:

向量 𝐚 在向量 𝐛 上的投影由公式给出:

A quantum mechanical particle of mass $m$ moves in two dimensions in the following potential, as a function of the polar coordinates $(r,\theta)$ :

质量为 $m$ 的量子力学粒子在极坐标 $(r,\theta)$ 下的势能函数中沿二维运动:

Find the energy spectrum. Hint: Write the potential in Cartesian coordinates.

求能量谱。提示:将势能用笛卡尔坐标表示。

The total number of positive integers $n\leq2024$ that are losing positions is $404+405={\boxed{809}}.$

不超过 2024 的正整数 $n$ 中,处于不利位置的总数为 $404+405={\boxed{809}}$。

Budget forcing In Table 3 we compare the test-time scaling methods we have introduced in $\S3$ . Overall, we find that budget forcing provides perfect control, good scaling, and leads to our best AIME24 score. Thus, this is the method we use for s1-32B in Figure 1 and in $\S4$ . In Table 4, we compare different strings for extrapolating performance. We find that “Wait” generally gives the best performance.

预算强制

在表 3 中,我们比较了在 $\S3$ 中介绍的测试时间扩展方法。总体上,我们发现预算强制提供了完美的控制、良好的扩展,并获得了最佳的 AIME24 分数。因此,这是我们在图 1 和 $\S4$ 中用于 s1-32B 的方法。在表 4 中,我们比较了用于外推性能的不同字符串。我们发现“Wait”通常能提供最佳性能。

Class-conditional control We provide benchmark scores for this method in $\S D.1$ and summarize three findings here: (1) Token-conditional control fails without budget forcing, as our model cannot reliably count tokens - even when trained to do so. (2) Under step-conditional control, the model generates a similar total number of tokens when given different step targets, as the model goes from few steps with many tokens per step, to many steps with few tokens in each step. Thus, the model learns to hack its way around the compute constraint making the control l ability of this method mediocre. (3) Class-conditional control can work - telling a model to simply think longer can increase its test-time compute and performance, which leads good scaling in Table 3.

类别条件控制

我们在 $\S D.1$ 中提供了这种方法的基准分数,并在这里总结了三个发现:(1) 在没有预算强制的情况下,Token 条件控制失败,因为我们的模型无法可靠地计数 Token —— 即使经过训练也是如此。(2) 在步骤条件控制下,当给定不同的步骤目标时,模型生成的总 Token 数量相似,因为模型从每步生成多个 Token 的少步骤,转变为每步生成少量 Token 的多步骤。因此,模型学会了绕过计算约束,使得这种方法的控制能力一般。(3) 类别条件控制可以发挥作用 —— 告诉模型简单地思考更长时间可以增加其测试时间计算和性能,这导致了表 3 中的良好扩展。

Table 4. Budget forcing extrapolation ablations. We compare ignoring the end-of-thinking delimiter twice and appending none or various strings.

表 4: 预算强制外推消融实验。我们比较了两次忽略思考结束分隔符以及不附加或附加不同字符串的情况。

| 模型 | AIME 2024 | MATH 500 | GPQA Diamond |

|---|---|---|---|

| 不外推 | 50.0 | 93.0 | 57.6 |

| 2x 无字符串 | 50.0 | 90.2 | 55.1 |

| 2x“Alternatively” | 50.0 | 92.2 | 59.6 |

| 2x“Hmm” | 50.0 | 93.0 | 59.6 |

| 2x“Wait” | 53.3 | 93.0 | 59.6 |

Rejection sampling Surprisingly, we find that simply sampling until the generation fits a specific length leads to an inverse scaling trend as depicted in Figure 6. In $\S\mathrm{D}.2$ we inspect a question, which was answered correctly by the model when rejection sampling for $\leq4000$ , but not for the $\leq8000$ token setting. In the $\leq4000$ setting the model directly jumps to the correct approach, while for the $\leq8000$ setting it backtracks a lot. We hypothesize that there is a correlation such that shorter generations tend to be the ones where the model was on the right track from the start, whereas longer ones tend to be ones where the model made mistakes and thus backtracks or questions itself. This leads to longer samples often being wrong when rejection sampling and thus the inverse scaling trend.

拒绝采样 (Rejection Sampling) 令人惊讶的是,我们发现,简单地通过采样直到生成的内容符合特定长度,会导致如图 6 所示的逆向缩放趋势。在 $\S\mathrm{D}.2$ 中,我们检查了一个问题,该问题在 $\leq4000$ 的拒绝采样设置下被模型正确回答,但在 $\leq8000$ 的 token 设置下却没有。在 $\leq4000$ 的设置中,模型直接跳到了正确的方法,而在 $\leq8000$ 的设置中,模型多次回溯。我们假设存在一种相关性,即较短的生成往往是模型从开始就走对了路,而较长的生成往往是模型犯了错误并因此回溯或自我质疑。这导致在拒绝采样时,较长的样本通常是错误的,从而导致了逆向缩放趋势。

Figure 6. Rejection sampling on AIME24 with s1-32B. We sample with a temperature of 1 until all generations have less than (from left to right) 3500, 4000, 5000, 8000, and 16000 thinking tokens requiring an average of 655, 97, 8, 3, 2, and 1 tries per sample.

图 6: 在 AIME24 上使用 s1-32B 进行拒绝采样。我们以温度 1 进行采样,直到所有生成样本的思考 token 数少于(从左到右)3500、4000、5000、8000 和 16000,每个样本平均需要 655、97、8、3、2 和 1 次尝试。

6. Discussion and related work

6. 讨论与相关工作

6.1. Sample-efficient reasoning

6.1. 样本高效推理

Models There are a number of concurrent efforts to build models that replicate the performance of o1 (OpenAI, 2024). For example, DeepSeek-r1 and $\mathrm{k}1.5$ (DeepSeek-AI et al., 2025; Team et al., 2025) are built with reinforcement learning methods, while others rely on SFT using tens of thousands of distilled examples (Team, 2025; Xu et al., 2025; Labs, 2025). We show that SFT on only 1,000 examples suffices to build a competitive reasoning model matching o1-preview and produces a model that lies on the pareto frontier (Figure 2). Further, we introduce budget forcing which combined with our reasoning model leads to the first reproduction of OpenAI’s test-time scaling curves (OpenAI, 2024). Why does supervised finetuning on just 1,000 samples lead to such performance gains? We hypothesize that the model is already exposed to large amounts of reasoning data during pre training which spans trillions of tokens. Thus, the ability to perform reasoning is already present in our model. Our sample-efficient finetuning stage just activates it and we scale it further at test time with budget forcing. This is similar to the "Superficial Alignment Hypothesis" presented in LIMA (Zhou et al., 2023), where the authors find that 1,000 examples can be sufficient to align a model to adhere to user preferences.

模型

Benchmarks and methods To evaluate and push the limits of these models, increasingly challenging benchmarks have been introduced, such as Olympiad-level science competitions (He et al., 2024; Jain et al., 2024; Zhong et al., 2023) and others (Srivastava et al., 2023; Glazer et al., 2024; Su et al., 2024; Kim et al., 2024; Phan et al., 2025). To enhance models’ performance on reasoning-related tasks, researchers have pursued several strategies: Prior works have explored continuing training language models on specialized corpora related to mathematics and science (Azerbayev et al., 2023; Yang et al., 2024), sometimes even synthetically generated data (Yu et al., 2024). Others have developed training methodologies specifically aimed at reasoning performance (Zelikman et al., 2022; 2024; Luo et al., 2025;

基准测试与方法:为了评估并突破这些模型的极限,人们引入了越来越具挑战性的基准测试,例如奥林匹克级别的科学竞赛 (He et al., 2024; Jain et al., 2024; Zhong et al., 2023) 以及其他测试 (Srivastava et al., 2023; Glazer et al., 2024; Su et al., 2024; Kim et al., 2024; Phan et al., 2025)。为了提升模型在推理相关任务中的表现,研究人员采取了多种策略:先前的工作探索了在专门与数学和科学相关的语料库上继续训练语言模型 (Azerbayev et al., 2023; Yang et al., 2024),有时甚至使用合成生成的数据 (Yu et al., 2024)。其他人则开发了专门针对推理性能的训练方法 (Zelikman et al., 2022; 2024; Luo et al., 2025)。

Yuan et al., 2025; Wu et al., 2024a). Another significant line of work focuses on prompting-based methods to elicit and improve reasoning abilities, including methods like Chainof-Thought prompting (Wei et al., 2023; Yao et al., 2023a;b; Bi et al., 2023; Fu et al., 2023; Zhang et al., 2024b; Xiang et al., 2025; Hu et al., 2024). These combined efforts aim to advance the reasoning ability of language models, enabling them to handle more complex and abstract tasks effectively.

Yuan 等, 2025; Wu 等, 2024a). 另一项重要的工作关注基于提示的方法来激发和提升推理能力,包括 Chain-of-Thought 提示方法 (Wei 等, 2023; Yao 等, 2023a;b; Bi 等, 2023; Fu 等, 2023; Zhang 等, 2024b; Xiang 等, 2025; Hu 等, 2024)。这些努力共同旨在提升大语言模型的推理能力,使它们能够有效地处理更复杂和抽象的任务。

6.2. Test-time scaling

6.2. 测试时缩放

Methods As we introduce in $\S3$ , we differentiate two methods to scale test-time compute: parallel and sequential. The former relies on multiple solution attempts generated in parallel and selecting the best outcome via specific criteria. These criteria include choosing the most frequent response for majority voting or the best response based on an external reward for Best-of-N (Brown et al., 2024; Irvine et al., 2023; Snell et al., 2024). Unlike repeated sampling, previous sequential scaling methods let the model generate solution attempts sequentially based on previous attempts, allowing it to refine each attempt based on previous outcomes (Snell et al., 2024; Hou et al., 2025; Lee et al., 2025). Tree-based search methods (Gandhi et al., 2024; Wu et al., 2024b) offer a hybrid approach between sequential and parallel scaling, such as Monte-Carlo Tree Search (MCTS) (Liu et al., 2024; Zhang et al., 2023; Zhou et al., 2024; Choi et al., 2023) and guided beam search (Xie et al., 2023). REBASE (Wu et al., 2024b) employs a process reward model to balance exploitation and pruning during tree search. Empirically, REBASE has been shown to outperform sampling-based methods and MCTS (Wu et al., 2024b). Reward models (Lightman et al., 2023; Wang et al., 2024b;c) play a key role in these methods. They come in two variants: outcome reward models and process reward models. Outcome reward models (Xin et al., 2024; Ankner et al., 2024) assign a score to complete solutions and are particularly useful in Best-of-N selection, while process reward models (Lightman et al., 2023; Wang et al., 2024b; Wu et al., 2024b) assess individual reasoning steps and are effective in guiding tree-based search methods.

方法

如我们在 $\S3$ 中所介绍的,我们区分了两种扩展测试时间计算的方法:并行和顺序。前者依赖于并行生成的多个解决方案尝试,并通过特定标准选择最佳结果。这些标准包括选择出现频率最高的响应作为多数表决,或基于外部奖励选择最佳响应(Best-of-N)(Brown et al., 2024; Irvine et al., 2023; Snell et al., 2024)。与重复采样不同,之前的顺序扩展方法允许模型基于先前的尝试顺序生成解决方案尝试,使其能够根据先前的成果逐步改进每次尝试(Snell et al., 2024; Hou et al., 2025; Lee et al., 2025)。基于树的搜索方法(Gandhi et al., 2024; Wu et al., 2024b)提供了一种介于顺序和并行扩展之间的混合方法,例如蒙特卡洛树搜索 (MCTS)(Liu et al., 2024; Zhang et al., 2023; Zhou et al., 2024; Choi et al., 2023)和引导束搜索(Xie et al., 2023)。REBASE(Wu et al., 2024b)采用过程奖励模型来平衡树搜索过程中的利用和剪枝。经验表明,REBASE 在性能上优于基于采样的方法和 MCTS(Wu et al., 2024b)。奖励模型(Lightman et al., 2023; Wang et al., 2024b;c)在这些方法中起着关键作用。它们有两种变体:结果奖励模型和过程奖励模型。结果奖励模型(Xin et al., 2024; Ankner et al., 2024)对完整解决方案进行评分,在 Best-of-N 选择中特别有用,而过程奖励模型(Lightman et al., 2023; Wang et al., 2024b; Wu et al., 2024b)则评估各个推理步骤,在指导基于树的搜索方法方面非常有效。

Limits to further test-time scaling We have shown that budget forcing allows extrapolating test-time compute in $\S4$ , e.g., improving AIME24 performance from $50%$ to $57%$ However, it has two key limitations when scaling further: it eventually flattens out (Figure 4), and the context window of the underlying language model constrains it. Despite these constraints, our work shows test-time scaling across a wide range of accuracies (Figure 1), partly because scaling down test-time compute behaves predictably and does not suffer from these constraints.

进一步扩展测试时的限制

我们已经在 $\S4$ 中展示了预算强制允许外推测试时的计算,例如将 AIME24 的性能从 $50%$ 提高到 $57%$ 。然而,在进一步扩展时,它有两个关键限制:最终会趋于平稳(图 4),并且底层大语言模型的上下文窗口会限制它。尽管存在这些限制,我们的工作显示了在广泛准确性范围内的测试时扩展(图 1),部分原因是减少测试时的计算是可预测的,并且不会受到这些限制的影响。

Continuing test-time scaling will require approaches that can further extrapolate test-time compute. How can we get such extrapolation? There may be improvements to budget forcing such as rotating through different strings, not only “Wait”, or combining it with frequency penalties or higher temperature to avoid repetitive loops. An exciting direction for future work is also researching whether applying budget forcing to a reasoning model trained with reinforcement learning yields better extrapolation; or if RL allows for new ways of test-time scaling beyond budget forcing. Our work defines the right metrics (§3.2) – Control, Scaling, and Performance – to enable future research and progress on extrapolating test-time compute.

持续扩展测试时计算需要能够进一步外推测试时计算的方法。我们如何实现这种外推?预算强制(budget forcing)可能有改进方法,例如轮换不同的字符串,而不仅仅是“等待”,或者将其与频率惩罚或更高温度结合以避免重复循环。未来工作的一个令人兴奋的方向是研究将预算强制应用于通过强化学习训练的推理模型是否会产生更好的外推效果;或者说,强化学习是否允许在预算强制之外实现新的测试时扩展方法。我们的工作定义了正确的指标(§3.2)——控制、扩展和性能——以支持未来的研究和进展,从而实现测试时计算的外推。

Figure 7. Scaling further with parallel scaling methods. All metrics averaged over the 30 questions in AIME24. Average thinking tokens for REBASE do not account for the additional compute from the reward model. For sequential scaling, we prompt the model to use up to (from left to right) 32, 64, 256, and 512 steps. For REBASE and majority voting we generate 16 parallel trajectories to aggregate across.

图 7: 使用并行扩展方法进一步扩展。所有指标均基于 AIME24 中的 30 个问题计算平均值。REBASE 的平均思考 Token 未包含奖励模型的额外计算。在顺序扩展中,我们提示模型使用最多(从左到右)32、64、256 和 512 步。对于 REBASE 和多数投票,我们生成 16 条并行轨迹进行聚合。

Parallel scaling as a solution Parallel scaling offers one solution to the limits of sequential scaling, thus we augment our sequentially scaled model with two methods: (I) Majority voting: After generating $k$ solutions, the final solution is the most frequent one across generations; $(\mathbf{II})$ Tree search via REBASE: We use the REBASE process reward model, which is initialized from LLaMA-34B and further finetuned on a synthetic process reward modeling dataset (Wu et al., 2024b). We then aggregate the solutions generated by REBASE via majority voting. As shown in Figure 7, augmenting our model with REBASE scales better than majority voting, and even sequential scaling in this scenario. However, REBASE requires an additional forward pass at each step for the reward model adding some computation overhead. For sequential scaling, when prompted to use up to 512 steps, for 12 out of the 30 evaluation questions the model generates a response that exceeds the context window leading to a large performance drop. Overall, we find that these parallel scaling methods complement sequential scaling thus they offer an avenue for scaling test-time compute even further; beyond fixed context windows.

并行扩展作为一种解决方案

并行扩展提供了一种解决顺序扩展限制的方案,因此我们通过两种方法增强我们的顺序扩展模型:

(I) 多数投票:在生成 $k$ 个解决方案后,最终解决方案是生成中出现频率最高的一个;

(II) 通过 REBASE 的树搜索:我们使用 REBASE 过程奖励模型,该模型从 LLaMA-34B 初始化,并在合成过程奖励建模数据集上进一步微调(Wu et al., 2024b)。然后我们通过多数投票聚合 REBASE 生成的解决方案。如图 7 所示,使用 REBASE 增强的模型比多数投票以及此场景中的顺序扩展表现更好。然而,REBASE 在每一步都需要额外的前向传递来评估奖励模型,这增加了一些计算开销。对于顺序扩展,当提示使用最多 512 步时,在 30 个评估问题中的 12 个问题中,模型生成的响应超出了上下文窗口,导致性能大幅下降。总体而言,我们发现这些并行扩展方法可以补充顺序扩展,因此它们为进一步扩展测试时计算提供了途径,甚至超越了固定上下文窗口的限制。

Impact Statement

影响声明

Language models with strong reasoning capabilities have the potential to greatly enhance human productivity, from assisting in complex decision-making to driving scientific breakthroughs. However, recent advances in reasoning, such as OpenAI’s o1 and DeepSeek’s r1, lack transparency, limiting broader research progress. Our work aims to push the frontier of reasoning in a fully open manner, fostering innovation and collaboration to accelerate advancements that ultimately benefit society.

具备强大推理能力的语言模型有潜力大幅提升人类生产力,从辅助复杂决策到推动科学突破。然而,最近的推理进展,例如 OpenAI 的 o1 和 DeepSeek 的 r1,缺乏透明度,限制了更广泛的研究进程。我们的工作旨在以完全开放的方式推进推理的前沿,促进创新和合作,以加速最终造福社会的进步。

Acknowledgements

致谢

This work was partly conducted using the Stanford Marlowe GPU cluster (Kapfer et al., 2025) made possible by financial support from Stanford University. We thank Alexander M. Rush, Andrew Ilyas, Banghua Zhu, Chenglei Si, Chunting Zhou, John Yang, Ludwig Schmidt, Samy Jelassi, Tengyu Ma, Xuechen Li, Yu Sun and Yue Zhang for very constructive discussions.

本工作部分使用了由斯坦福大学提供资金支持的斯坦福 Marlowe GPU 集群 (Kapfer et al., 2025)。我们感谢 Alexander M. Rush、Andrew Ilyas、Banghua Zhu、Chenglei Si、Chunting Zhou、John Yang、Ludwig Schmidt、Samy Jelassi、Tengyu Ma、Xuechen Li、Yu Sun 和 Yue Zhang 进行的非常有建设性的讨论。

References

参考文献

Ankner, Z., Paul, M., Cui, B., Chang, J. D., and Ammanabrolu, P. Critique-out-loud reward models, 2024. URL https://arxiv.org/abs/2408.11791.

Ankner, Z., Paul, M., Cui, B., Chang, J. D., 和 Ammanabrolu, P. Critique-out-loud reward models, 2024. URL https://arxiv.org/abs/2408.11791.

Arora, D., Singh, H. G., and Mausam. Have llms advanced enough? a challenging problem solving benchmark for large language models, 2023. URL https://arxiv. org/abs/2305.15074.

Arora, D., Singh, H. G., and Mausam. 大语言模型是否已经足够先进?一个大语言模型的挑战性问题解决基准,2023. URL https://arxiv.org/abs/2305.15074.

Azerbayev, Z., Schoelkopf, H., Paster, K., Santos, M. D., McAleer, S., Jiang, A. Q., Deng, J., Biderman, S., and Welleck, S. Llemma: An open language model for mathematics, 2023.

Azerbayev, Z., Schoelkopf, H., Paster, K., Santos, M. D., McAleer, S., Jiang, A. Q., Deng, J., Biderman, S., and Welleck, S. Llemma: 一个开放的数学语言模型, 2023.

Bi, Z., Zhang, N., Jiang, Y., Deng, S., Zheng, G., and Chen, H. When do program-of-thoughts work for reasoning?, 2023. URL https://arxiv.org/abs/2308. 15452.

Bi, Z., Zhang, N., Jiang, Y., Deng, S., Zheng, G., and Chen, H. 程序思维何时适用于推理?, 2023. URL https://arxiv.org/abs/2308.15452.

Biderman, S., Schoelkopf, H., Sutawika, L., Gao, L., Tow, J., Abbasi, B., Aji, A. F., Amman a man chi, P. S., Black, S., Clive, J., DiPofi, A., Etxaniz, J., Fattori, B., Forde, J. Z., Foster, C., Hsu, J., Jaiswal, M., Lee, W. Y., Li, H., Lovering, C., Mu en nigh off, N., Pavlick, E., Phang, J., Skowron, A., Tan, S., Tang, X., Wang, K. A., Winata, G. I., Yvon, F., and Zou, A. Lessons from the trenches on reproducible evaluation of language models, 2024.

Biderman, S., Schoelkopf, H., Sutawika, L., Gao, L., Tow, J., Abbasi, B., Aji, A. F., Amman a man chi, P. S., Black, S., Clive, J., DiPofi, A., Etxaniz, J., Fattori, B., Forde, J. Z., Foster, C., Hsu, J., Jaiswal, M., Lee, W. Y., Li, H., Lovering, C., Mu en nigh off, N., Pavlick, E., Phang, J., Skowron, A., Tan, S., Tang, X., Wang, K. A., Winata, G. I., Yvon, F., and Zou, A. 从实战中学习语言模型的可复现性评估,2024。

Brown, B., Juravsky, J., Ehrlich, R., Clark, R., Le, Q. V., Ré, C., and Mirhoseini, A. Large language monkeys: Scaling inference compute with repeated sampling, 2024. URL https://arxiv.org/abs/2407.21787.

Brown, B., Juravsky, J., Ehrlich, R., Clark, R., Le, Q. V., Ré, C., and Mirhoseini, A. 《大型语言猴子:通过重复采样扩展推理计算》,2024,URL https://arxiv.org/abs/2407.21787.

Cesista, F. L. Multimodal structured generation: Cvpr’s 2nd mmfm challenge technical report, 2024. URL https: //arxiv.org/abs/2406.11403.

Cesista, F. L. 多模态结构化生成:CVPR 第 2 届 MMFM 挑战赛技术报告, 2024. URL https://arxiv.org/abs/2406.11403.

Chen, W., Yin, M., Ku, M., Lu, P., Wan, Y., Ma, X., Xu, J., Wang, X., and Xia, T. Theoremqa: A theoremdriven question answering dataset, 2023. URL https: //arxiv.org/abs/2305.12524.

Chen, W., Yin, M., Ku, M., Lu, P., Wan, Y., Ma, X., Xu, J., Wang, X., and Xia, T. Theoremqa: A theorem-driven question answering dataset, 2023. URL https://arxiv.org/abs/2305.12524.

Choi, S., Fang, T., Wang, Z., and Song, Y. Kcts: Knowledgeconstrained tree search decoding with token-level hallucination detection, 2023. URL https://arxiv.org/ abs/2310.09044.

Choi, S., Fang, T., Wang, Z., 和 Song, Y. Kcts: 基于知识约束的树搜索解码与Token级幻觉检测, 2023. URL https://arxiv.org/ abs/2310.09044.

DeepSeek-AI, Guo, D., Yang, D., Zhang, H., Song, J., Zhang, R., Xu, R., Zhu, Q., Ma, S., Wang, P., Bi, X., Zhang, X., Yu, X., Wu, Y., Wu, Z. F., Gou, Z., Shao, Z., Li, Z., Gao, Z., Liu, A., Xue, B., Wang, B., Wu, B., Feng, B., Lu, C., Zhao, C., Deng, C., Zhang, C., Ruan, C., Dai, D., Chen, D., Ji, D., Li, E., Lin, F., Dai, F., Luo, F., Hao, G., Chen, G., Li, G., Zhang, H., Bao, H., Xu, H., Wang, H., Ding, H., Xin, H., Gao, H., Qu, H., Li, H., Guo, J., Li, J., Wang, J., Chen, J., Yuan, J., Qiu, J., Li, J., Cai, J. L., Ni, J., Liang, J., Chen, J., Dong, K., Hu, K., Gao, K., Guan, K., Huang, K., Yu, K., Wang, L., Zhang, L., Zhao, L., Wang, L., Zhang, L., Xu, L., Xia, L., Zhang, M., Zhang, M., Tang, M., Li, M., Wang, M., Li, M., Tian, N., Huang, P., Zhang, P., Wang, Q., Chen, Q., Du, Q., Ge, R., Zhang, R., Pan, R., Wang, R., Chen, R. J., Jin, R. L., Chen, R., Lu, S., Zhou, S., Chen, S., Ye, S., Wang, S., Yu, S., Zhou, S., Pan, S., Li, S. S., Zhou, S., Wu, S., Ye, S., Yun, T., Pei, T., Sun, T., Wang, T., Zeng, W., Zhao, W., Liu, W., Liang, W., Gao, W., Yu, W., Zhang, W., Xiao, W. L., An, W., Liu, X., Wang, X., Chen, X., Nie, X., Cheng, X., Liu, X., Xie, X., Liu, X., Yang, X., Li, X., Su, X., Lin, X., Li, X. Q., Jin, X., Shen, X., Chen, X., Sun, X., Wang, X., Song, X., Zhou, X., Wang, X., Shan, X., Li, Y. K., Wang, Y. Q., Wei, Y. X., Zhang, Y., Xu, Y., Li, Y., Zhao, Y., Sun, Y., Wang, Y., Yu, Y., Zhang, Y., Shi, Y., Xiong, Y., He, Y., Piao, Y., Wang, Y., Tan, Y., Ma, Y., Liu, Y., Guo, Y., Ou, Y., Wang, Y., Gong, Y., Zou, Y., He, Y., Xiong, Y., Luo, Y., You, Y., Liu, Y., Zhou, Y., Zhu, Y. X., Xu, Y., Huang, Y., Li, Y., Zheng, Y., Zhu, Y., Ma, Y., Tang, Y., Zha, Y., Yan, Y., Ren, Z. Z., Ren, Z., Sha, Z., Fu, Z., Xu, Z., Xie, Z., Zhang, Z., Hao, Z., Ma, Z., Yan, Z., Wu, Z., Gu, Z., Zhu, Z., Liu, Z., Li, Z., Xie, Z., Song, Z., Pan, Z., Huang, Z., Xu, Z., Zhang, Z., and Zhang, Z. Deepseek-r1: In centi viz ing reasoning capability in llms via reinforcement learning, 2025. URL https://arxiv.org/abs/2501.12948.

DeepSeek-AI, Guo, D., Yang, D., Zhang, H., Song, J., Zhang, R., Xu, R., Zhu, Q., Ma, S., Wang, P., Bi, X., Zhang, X., Yu, X., Wu, Y., Wu, Z. F., Gou, Z., Shao, Z., Li, Z., Gao, Z., Liu, A., Xue, B., Wang, B., Wu, B., Feng, B., Lu, C., Zhao, C., Deng, C., Zhang, C., Ruan, C., Dai, D., Chen, D., Ji, D., Li, E., Lin, F., Dai, F., Luo, F., Hao, G., Chen, G., Li, G., Zhang, H., Bao, H., Xu, H., Wang, H., Ding, H., Xin, H., Gao, H., Qu, H., Li, H., Guo, J., Li, J., Wang, J., Chen, J., Yuan, J., Qiu, J., Li, J., Cai, J. L., Ni, J., Liang, J., Chen, J., Dong, K., Hu, K., Gao, K., Guan, K., Huang, K., Yu, K., Wang, L., Zhang, L., Zhao, L., Wang, L., Zhang, L., Xu, L., Xia, L., Zhang, M., Zhang, M., Tang, M., Li, M., Wang, M., Li, M., Tian, N., Huang, P., Zhang, P., Wang, Q., Chen, Q., Du, Q., Ge, R., Zhang, R., Pan, R., Wang, R., Chen, R. J., Jin, R. L., Chen, R., Lu, S., Zhou, S., Chen, S., Ye, S., Wang, S., Yu, S., Zhou, S., Pan, S., Li, S. S., Zhou, S., Wu, S., Ye, S., Yun, T., Pei, T., Sun, T., Wang, T., Zeng, W., Zhao, W., Liu, W., Liang, W., Gao, W., Yu, W., Zhang, W., Xiao, W. L., An, W., Liu, X., Wang, X., Chen, X., Nie, X., Cheng, X., Liu, X., Xie, X., Liu, X., Yang, X., Li, X., Su, X., Lin, X., Li, X. Q., Jin, X., Shen, X., Chen, X., Sun, X., Wang, X., Song, X., Zhou, X., Wang, X., Shan, X., Li, Y. K., Wang, Y. Q., Wei, Y. X., Zhang, Y., Xu, Y., Li, Y., Zhao, Y., Sun, Y., Wang, Y., Yu, Y., Zhang, Y., Shi, Y., Xiong, Y., He, Y., Piao, Y., Wang, Y., Tan, Y., Ma, Y., Liu, Y., Guo, Y., Ou, Y., Wang, Y., Gong, Y., Zou, Y., He, Y., Xiong, Y., Luo, Y., You, Y., Liu, Y., Zhou, Y., Zhu, Y. X., Xu, Y., Huang, Y., Li, Y., Zheng, Y., Zhu, Y., Ma, Y., Tang, Y., Zha, Y., Yan, Y., Ren, Z. Z., Ren, Z., Sha, Z., Fu, Z., Xu, Z., Xie, Z., Zhang, Z., Hao, Z., Ma, Z., Yan, Z., Wu, Z., Gu, Z., Zhu, Z., Liu, Z., Li, Z., Xie, Z., Song, Z., Pan, Z., Huang, Z., Xu, Z., Zhang, Z., and Zhang, Z. Deepseek-r1: 通过强化学习增强大语言模型的推理能力, 2025. URL https://arxiv.org/abs/2501.12948.

Dubey, A., Jauhri, A., Pandey, A., Kadian, A., Al-Dahle, A., Letman, A., Mathur, A., Schelten, A., Yang, A., Fan, A., Goyal, A., Hartshorn, A., Yang, A., Mitra, A., Sravankumar, A., Korenev, A., Hinsvark, A., Rao, A., Zhang, A., Rodriguez, A., Gregerson, A., et al. The llama 3 herd of models, 2024. URL https://arxiv.org/abs/ 2407.21783.

Dubey, A., Jauhri, A., Pandey, A., Kadian, A., Al-Dahle, A., Letman, A., Mathur, A., Schelten, A., Yang, A., Fan, A., Goyal, A., Hartshorn, A., Yang, A., Mitra, A., Sravankumar, A., Korenev, A., Hinsvark, A., Rao, A., Zhang, A., Rodriguez, A., Gregerson, A., 等人. The llama 3 herd of models, 2024. URL https://arxiv.org/abs/ 2407.21783.

Fu, Y., Peng, H., Sabharwal, A., Clark, P., and Khot, T. Complexity-based prompting for multi-step reasoning, 2023. URL https://arxiv.org/abs/2210. 00720.

Fu, Y., Peng, H., Sabharwal, A., Clark, P., and Khot, T. Complexity-based prompting for multi-step reasoning, 2023. URL https://arxiv.org/abs/2210. 00720.

Gandhi, K., Lee, D., Grand, G., Liu, M., Cheng, W., Sharma, A., and Goodman, N. D. Stream of search (sos): Learning to search in language, 2024. URL https://arxiv. org/abs/2404.03683.

Gandhi, K., Lee, D., Grand, G., Liu, M., Cheng, W., Sharma, A., and Goodman, N. D. 搜索流(SOS): 在语言中学习搜索, 2024. URL https://arxiv.org/abs/2404.03683.

Gao, B., Song, F., Yang, Z., Cai, Z., Miao, Y., Dong, Q., Li, L., Ma, C., Chen, L., Xu, R., Tang, Z., Wang, B., Zan, D., Quan, S., Zhang, G., Sha, L., Zhang, Y., Ren, X., Liu, T., and Chang, B. Omni-math: A universal olympiad level mathematic benchmark for large language models, 2024a. URL https://arxiv.org/abs/2410.07985.

Gao, B., Song, F., Yang, Z., Cai, Z., Miao, Y., Dong, Q., Li, L., Ma, C., Chen, L., Xu, R., Tang, Z., Wang, B., Zan, D., Quan, S., Zhang, G., Sha, L., Zhang, Y., Ren, X., Liu, T., and Chang, B. Omni-math: 大语言模型的通用奥赛级别数学基准, 2024a. URL https://arxiv.org/abs/2410.07985.

Gao, L., Tow, J., Biderman, S., Black, S., DiPofi, A., Foster, C., Golding, L., Hsu, J., McDonell, K., Mu en nigh off, N., Phang, J., Reynolds, L., Tang, E., Thite, A., Wang, B., Wang, K., and Zou, A. A framework for few-shot language model evaluation, September 2021. URL doi.org/10.5281/zenodo.5371628.

Gao, L., Tow, J., Biderman, S., Black, S., DiPofi, A., Foster, C., Golding, L., Hsu, J., McDonell, K., Mu en nigh off, N., Phang, J., Reynolds, L., Tang, E., Thite, A., Wang, B., Wang, K., and Zou, A. 少样本大语言模型评估框架, September 2021. URL doi.org/10.5281/zenodo.5371628.

Gao, Z., Niu, B., He, X., Xu, H., Liu, H., Liu, A., Hu, X., and Wen, L. Interpret able contrastive monte carlo tree search reasoning, 2024b. URL https://arxiv.org/abs/ 2410.01707.

Gao, Z., Niu, B., He, X., Xu, H., Liu, H., Liu, A., Hu, X., and Wen, L. Interpretable contrastive monte carlo tree search reasoning, 2024b. URL https://arxiv.org/abs/2410.01707.

Glazer, E., Erdil, E., Besiroglu, T., Chicharro, D., Chen, E., Gunning, A., Olsson, C. F., Denain, J.-S., Ho, A., de Oliveira Santos, E., Järviniemi, O., Barnett, M., San- dler, R., Vrzala, M., Sevilla, J., Ren, Q., Pratt, E., Levine, L., Barkley, G., Stewart, N., Grechuk, B., Grechuk, T., Enugandla, S. V., and Wildon, M. Frontier math: A benchmark for evaluating advanced mathematical reasoning in ai, 2024. URL https://arxiv.org/abs/2411. 04872.

Glazer, E., Erdil, E., Besiroglu, T., Chicharro, D., Chen, E., Gunning, A., Olsson, C. F., Denain, J.-S., Ho, A., de Oliveira Santos, E., Järviniemi, O., Barnett, M., Sandler, R., Vrzala, M., Sevilla, J., Ren, Q., Pratt, E., Levine, L., Barkley, G., Stewart, N., Grechuk, B., Grechuk, T., Enugandla, S. V., and Wildon, M. Frontier math: A benchmark for evaluating advanced mathematical reasoning in ai, 2024. URL https://arxiv.org/abs/2411.04872.

Google. Gemini 2.0 flash thinking mode (gemini-2.0- flash-thinking-exp-1219), December 2024. URL https://cloud.google.com/vertex-ai/ generative-ai/docs/thinking-mode.

Google. Gemini 2.0 闪思模式 (gemini-2.0-flash-thinking-exp-1219), 2024 年 12 月. URL https://cloud.google.com/vertex-ai/generative-ai/docs/thinking-mode.

Groeneveld, D., Beltagy, I., Walsh, P., Bhagia, A., Kinney, R., Tafjord, O., Jha, A. H., Ivison, H., Magnusson, I., Wang, Y., Arora, S., Atkinson, D., Authur, R., Chandu, K. R., Cohan, A., Dumas, J., Elazar, Y., Gu, Y., Hessel, J., Khot, T., Merrill, W., Morrison, J., Mu en nigh off, N., Naik, A., Nam, C., Peters, M. E., Pyatkin, V., Ravi chan der, A., Schwenk, D., Shah, S., Smith, W., Strubell, E., Subramani, N., Wortsman, M., Dasigi, P., Lambert, N., Richardson, K., Z ett le moyer, L., Dodge, J., Lo, K., Soldaini, L., Smith, N. A., and Hajishirzi, H. Olmo: Accelerating the science of language models, 2024.

Groeneveld, D., Beltagy, I., Walsh, P., Bhagia, A., Kinney, R., Tafjord, O., Jha, A. H., Ivison, H., Magnusson, I., Wang, Y., Arora, S., Atkinson, D., Authur, R., Chandu, K. R., Cohan, A., Dumas, J., Elazar, Y., Gu, Y., Hessel, J., Khot, T., Merrill, W., Morrison, J., Mu en nigh off, N., Naik, A., Nam, C., Peters, M. E., Pyatkin, V., Ravi chan der, A., Schwenk, D., Shah, S., Smith, W., Strubell, E., Subramani, N., Wortsman, M., Dasigi, P., Lambert, N., Richardson, K., Z ett le moyer, L., Dodge, J., Lo, K., Soldaini, L., Smith, N. A., and Hajishirzi, H. Olmo:加速语言模型的科学研究,2024。

He, C., Luo, R., Bai, Y., Hu, S., Thai, Z. L., Shen, J., Hu, J., Han, X., Huang, Y., Zhang, Y., Liu, J., Qi, L., Liu, Z., and Sun, M. Olympiad bench: A challenging benchmark for promoting agi with olympiad-level bilingual multimodal scientific problems, 2024. URL https://arxiv.org/abs/2402.14008.

He, C., Luo, R., Bai, Y., Hu, S., Thai, Z. L., Shen, J., Hu, J., Han, X., Huang, Y., Zhang, Y., Liu, J., Qi, L., Liu, Z., and Sun, M. Olympiad 基准:一个具有挑战性的基准,旨在通过奥林匹克级别的双语多模态科学问题推动通用人工智能的发展,2024. URL https://arxiv.org/abs/2402.14008.

Hendrycks, D., Burns, C., Kadavath, S., Arora, A., Basart, S., Tang, E., Song, D., and Steinhardt, J. Measuring mathematical problem solving with the math dataset, 2021. URL https://arxiv.org/abs/2103.03874.

Hendrycks, D., Burns, C., Kadavath, S., Arora, A., Basart, S., Tang, E., Song, D., and Steinhardt, J. 使用数学数据集衡量数学问题解决能力, 2021. URL https://arxiv.org/abs/2103.03874.

Hoffmann, J., Borgeaud, S., Mensch, A., Buch at s kaya, E., Cai, T., Rutherford, E., de Las Casas, D., Hendricks, L. A., Welbl, J., Clark, A., Hennigan, T., Noland, E., Millican, K., van den Driessche, G., Damoc, B., Guy, A., Osindero, S., Simonyan, K., Elsen, E., Rae, J. W., Vinyals, O., and Sifre, L. Training compute-optimal large language models, 2022. URL https://arxiv.org/abs/2203. 15556.

Hoffmann, J., Borgeaud, S., Mensch, A., Buchatskaya, E., Cai, T., Rutherford, E., de Las Casas, D., Hendricks, L. A., Welbl, J., Clark, A., Hennigan, T., Noland, E., Millican, K., van den Driessche, G., Damoc, B., Guy, A., Osindero, S., Simonyan, K., Elsen, E., Rae, J. W., Vinyals, O., and Sifre, L. 训练计算最优的大语言模型 (Training compute-optimal large language models), 2022. URL https://arxiv.org/abs/2203.15556.

Hou, Z., Lv, X., Lu, R., Zhang, J., Li, Y., Yao, Z., Li, J., Tang, J., and Dong, Y. Advancing language model reasoning through reinforcement learning and inference scaling, 2025. URL https://arxiv.org/abs/2501. 11651.

Hou, Z., Lv, X., Lu, R., Zhang, J., Li, Y., Yao, Z., Li, J., Tang, J., and Dong, Y. 通过强化学习和推理扩展推进语言模型推理,2025. URL https://arxiv.org/abs/2501.11651.

Hu, Y., Shi, W., Fu, X., Roth, D., Ostendorf, M., Zettlemoyer, L., Smith, N. A., and Krishna, R. Visual sketchpad: Sketching as a visual chain of thought for multimodal language models, 2024. URL https://arxiv.org/ abs/2406.09403.

胡, Y., 施, W., 付, X., Roth, D., Ostendorf, M., Zettlemoyer, L., Smith, N. A., and Krishna, R. 视觉画板:多模态语言模型中的视觉思维链绘制, 2024. URL https://arxiv.org/ abs/2406.09403.

Huang, Z., Wang, Z., Xia, S., Li, X., Zou, H., Xu, R., Fan, R.-Z., Ye, L., Chern, E., Ye, Y., Zhang, Y., Yang, Y., Wu, T., Wang, B., Sun, S., Xiao, Y., Li, Y., Zhou, F., Chern, S., Qin, Y., Ma, Y., Su, J., Liu, Y., Zheng, Y., Zhang, S., Lin, D., Qiao, Y., and Liu, P. Olympic arena: Benchmarking multi-discipline cognitive reasoning for super intelligent ai, 2024a. URL https://arxiv.org/abs/2406. 12753.

Huang, Z., Wang, Z., Xia, S., Li, X., Zou, H., Xu, R., Fan, R.-Z., Ye, L., Chern, E., Ye, Y., Zhang, Y., Yang, Y., Wu, T., Wang, B., Sun, S., Xiao, Y., Li, Y., Zhou, F., Chern, S., Qin, Y., Ma, Y., Su, J., Liu, Y., Zheng, Y., Zhang, S., Lin, D., Qiao, Y., and Liu, P. Olympic arena: 多学科认知推理基准测试,为超级智能AI,2024a. URL https://arxiv.org/abs/2406.12753.

Huang, Z., Zou, H., Li, X., Liu, Y., Zheng, Y., Chern, E., Xia, S., Qin, Y., Yuan, W., and Liu, P. O1 replication journey – part 2: Surpassing o1-preview through simple distillation, big progress or bitter lesson?, 2024b. URL https://arxiv.org/abs/2411.16489.

Huang, Z., Zou, H., Li, X., Liu, Y., Zheng, Y., Chern, E., Xia, S., Qin, Y., Yuan, W., and Liu, P. O1 复现之旅——第二部分:通过简单蒸馏超越 o1-preview,是巨大进步还是惨痛教训?, 2024b. URL https://arxiv.org/abs/2411.16489.

Huang, Z., Geng, G., Hua, S., Huang, Z., Zou, H., Zhang, S., Liu, P., and Zhang, X. O1 replication journey – part 3: Inference-time scaling for medical reasoning, 2025. URL https://arxiv.org/abs/2501.06458.

Huang, Z., Geng, G., Hua, S., Huang, Z., Zou, H., Zhang, S., Liu, P., and Zhang, X. O1 复制之旅——第三部分:医学推理的推理时间扩展,2025. URL https://arxiv.org/abs/2501.06458.

Irvine, R., Boubert, D., Raina, V., Liusie, A., Zhu, Z., Mudupalli, V., Korshuk, A., Liu, Z., Cremer, F., As- sassi, V., Beauchamp, C.-C., Lu, X., Rialan, T., and

Irvine, R., Boubert, D., Raina, V., Liusie, A., Zhu, Z., Mudupalli, V., Korshuk, A., Liu, Z., Cremer, F., Assassi, V., Beauchamp, C.-C., Lu, X., Rialan, T.,

Beauchamp, W. Rewarding chatbots for real-world engagement with millions of users, 2023. URL https: //arxiv.org/abs/2303.06135.

Beauchamp, W. 通过数百万用户的真实互动奖励聊天机器人,2023. 链接 https://arxiv.org/abs/2303.06135.

Liu, J., Cui, L., Liu, H., Huang, D., Wang, Y., and Zhang, Y. Logiqa: A challenge dataset for machine reading comprehension with logical reasoning, 2020. URL https: //arxiv.org/abs/2007.08124.

Liu, J., Cui, L., Liu, H., Huang, D., Wang, Y., and Zhang, Y. Logiqa: 一个用于逻辑推理机器阅读理解挑战的数据集, 2020. URL https://arxiv.org/abs/2007.08124.

Liu, J., Cohen, A., Pasunuru, R., Choi, Y., Hajishirzi, H., and Cel i kyi l maz, A. Don’t throw away your value model! generating more preferable text with value-guided monte-carlo tree search decoding, 2024. URL https: //arxiv.org/abs/2309.15028.

Liu, J., Cohen, A., Pasunuru, R., Choi, Y., Hajishirzi, H., and Cel i kyi l maz, A. 不要丢弃你的价值模型!通过价值引导的蒙特卡罗树搜索解码生成更优文本, 2024. URL https://arxiv.org/abs/2309.15028.

Loshchilov, I. and Hutter, F. Decoupled weight decay regularization, 2019.

Loshchilov, I. 和 Hutter, F. 解耦权重衰减正则化 (Decoupled weight decay regularization), 2019.

Luo, H., Sun, Q., Xu, C., Zhao, P., Lou, J., Tao, C., Geng, X., Lin, Q., Chen, S., Tang, Y., and Zhang, D. Wizardmath: Empowering mathematical reasoning for large language models via reinforced evol-instruct, 2025. URL https: //arxiv.org/abs/2308.09583.

Luo, H., Sun, Q., Xu, C., Zhao, P., Lou, J., Tao, C., Geng, X., Lin, Q., Chen, S., Tang, Y., and Zhang, D. Wizardmath: Empowering mathematical reasoning for large language models via reinforced evol-instruct, 2025. URL https://arxiv.org/abs/2308.09583.

Mu en nigh off, N., Soldaini, L., Groeneveld, D., Lo, K., Morrison, J., Min, S., Shi, W., Walsh, P., Tafjord, O., Lambert, N., Gu, Y., Arora, S., Bhagia, A., Schwenk, D., Wadden, D., Wettig, A., Hui, B., Dettmers, T., Kiela, D., Farhadi, A., Smith, N. A., Koh, P. W., Singh, A., and Hajishirzi, H. Olmoe: Open mixture-of-experts language models, 2024. URL https://arxiv.org/abs/2409.02060.

Mu en nigh off, N., Soldaini, L., Groeneveld, D., Lo, K., Morrison, J., Min, S., Shi, W., Walsh, P., Tafjord, O., Lambert, N., Gu, Y., Arora, S., Bhagia, A., Schwenk, D., Wadden, D., Wettig, A., Hui, B., Dettmers, T., Kiela, D., Farhadi, A., Smith, N. A., Koh, P. W., Singh, A., and Hajishirzi, H. Olmoe: 开放式专家混合语言模型, 2024. URL https://arxiv.org/abs/2409.02060.

of America, M. A. Aime, February 2024. URL https: //art of problem solving.com/wiki/index. php/AIME Problems and Solutions/.

美国数学协会 (MAA) ,2024 年 2 月。URL https://artofproblemsolving.com/wiki/index.php/AIME_问题与解答/

OpenAI. Learning to reason with llms, September 2024. URL https://openai.com/