Abstract

摘要

We present DeepSeek-V3, a strong Mixture-of-Experts (MoE) language model with 671B total parameters with 37B activated for each token. To achieve efficient inference and cost-effective training, DeepSeek-V3 adopts Multi-head Latent Attention (MLA) and Deep Seek MoE architectures, which were thoroughly validated in DeepSeek-V2. Furthermore, DeepSeek-V3 pioneers an auxiliary-loss-free strategy for load balancing and sets a multi-token prediction training objective for stronger performance. We pre-train DeepSeek-V3 on 14.8 trillion diverse and high-quality tokens, followed by Supervised Fine-Tuning and Reinforcement Learning stages to fully harness its capabilities. Comprehensive evaluations reveal that DeepSeek-V3 outperforms other open-source models and achieves performance comparable to leading closed-source models. Despite its excellent performance, DeepSeek-V3 requires only 2.788M H800 GPU hours for its full training. In addition, its training process is remarkably stable. Throughout the entire training process, we did not experience any irrecoverable loss spikes or perform any rollbacks. The model checkpoints are available at https://github.com/deepseek-ai/DeepSeek-V3.

我们推出了 DeepSeek-V3,这是一个强大的专家混合模型 (Mixture-of-Experts, MoE) 语言模型,总参数量为 671B,每个 Token 激活的参数量为 37B。为了实现高效的推理和成本效益的训练,DeepSeek-V3 采用了多头潜在注意力 (Multi-head Latent Attention, MLA) 和 Deep Seek MoE 架构,这些架构在 DeepSeek-V2 中得到了充分验证。此外,DeepSeek-V3 率先采用了无辅助损失的负载均衡策略,并设定了多 Token 预测训练目标,以实现更强的性能。我们在 14.8 万亿个多样化且高质量的 Token 上对 DeepSeek-V3 进行了预训练,随后进行了监督微调和强化学习阶段,以充分发挥其能力。综合评估表明,DeepSeek-V3 优于其他开源模型,并实现了与领先的闭源模型相当的性能。尽管性能卓越,DeepSeek-V3 的完整训练仅需 2.788M H800 GPU 小时。此外,其训练过程非常稳定。在整个训练过程中,我们没有遇到任何不可恢复的损失峰值或执行任何回滚操作。模型检查点可在 https://github.com/deepseek-ai/DeepSeek-V3 获取。

Figure 1 | Benchmark performance of DeepSeek-V3 and its counterparts.

图 1 | DeepSeek-V3 及其对比模型的基准性能。

1. Introduction

1. 引言

In recent years, Large Language Models (LLMs) have been undergoing rapid iteration and evolution (Anthropic, 2024; Google, 2024; OpenAI, 2024a), progressively diminishing the gap towards Artificial General Intelligence (AGI). Beyond closed-source models, open-source models, including DeepSeek series (DeepSeek-AI, 2024a,b,c; Guo et al., 2024), LLaMA series (AI@Meta, $2024\mathrm{a},\mathrm{b},$ ; Touvron et al., 2023a,b), Qwen series (Qwen, 2023, 2024a,b), and Mistral series (Jiang et al., 2023; Mistral, 2024), are also making significant strides, endeavoring to close the gap with their closed-source counterparts. To further push the boundaries of open-source model capabilities, we scale up our models and introduce DeepSeek-V3, a large Mixture-of-Experts (MoE) model with 671B parameters, of which 37B are activated for each token.

近年来,大语言模型 (LLMs) 经历了快速的迭代和演进 (Anthropic, 2024; Google, 2024; OpenAI, 2024a),逐步缩小了与通用人工智能 (AGI) 的差距。除了闭源模型外,开源模型,包括 DeepSeek 系列 (DeepSeek-AI, 2024a,b,c; Guo et al., 2024)、LLaMA 系列 (AI@Meta, $2024\mathrm{a},\mathrm{b},$ ; Touvron et al., 2023a,b)、Qwen 系列 (Qwen, 2023, 2024a,b) 和 Mistral 系列 (Jiang et al., 2023; Mistral, 2024),也在取得显著进展,努力缩小与闭源模型的差距。为了进一步突破开源模型的能力边界,我们扩展了模型规模,并推出了 DeepSeek-V3,这是一个拥有 6710 亿参数的大型专家混合模型 (Mixture-of-Experts, MoE),每个 token 激活 370 亿参数。

With a forward-looking perspective, we consistently strive for strong model performance and economical costs. Therefore, in terms of architecture, DeepSeek-V3 still adopts Multi-head Latent Attention (MLA) (DeepSeek-AI, 2024c) for efficient inference and Deep Seek MoE (Dai et al., 2024) for cost-effective training. These two architectures have been validated in DeepSeekV2 (DeepSeek-AI, 2024c), demonstrating their capability to maintain robust model performance while achieving efficient training and inference. Beyond the basic architecture, we implement two additional strategies to further enhance the model capabilities. Firstly, DeepSeek-V3 pioneers an auxiliary-loss-free strategy (Wang et al., 2024a) for load balancing, with the aim of minimizing the adverse impact on model performance that arises from the effort to encourage load balancing. Secondly, DeepSeek-V3 employs a multi-token prediction training objective, which we have observed to enhance the overall performance on evaluation benchmarks.

我们始终以长远的眼光追求强大的模型性能和经济成本。因此,在架构方面,DeepSeek-V3 仍然采用多头潜在注意力机制 (Multi-head Latent Attention, MLA) (DeepSeek-AI, 2024c) 以实现高效推理,并采用 Deep Seek MoE (Dai et al., 2024) 以实现经济高效的训练。这两种架构已在 DeepSeekV2 (DeepSeek-AI, 2024c) 中得到验证,证明了它们在保持强大模型性能的同时实现高效训练和推理的能力。除了基础架构外,我们还实施了两种额外策略以进一步提升模型能力。首先,DeepSeek-V3 率先采用无辅助损失的负载均衡策略 (Wang et al., 2024a),旨在最小化因鼓励负载均衡而对模型性能产生的不利影响。其次,DeepSeek-V3 采用了多 Token 预测的训练目标,我们观察到这能够提升在评估基准上的整体表现。

In order to achieve efficient training, we support the FP8 mixed precision training and implement comprehensive optimization s for the training framework. Low-precision training has emerged as a promising solution for efficient training (Dettmers et al., 2022; Kalamkar et al., 2019; Narang et al., 2017; Peng et al., 2023b), its evolution being closely tied to advancements in hardware capabilities (Luo et al., 2024; Mic ike vici us et al., 2022; Rouhani et al., 2023a). In this work, we introduce an FP8 mixed precision training framework and, for the first time, validate its effectiveness on an extremely large-scale model. Through the support for FP8 computation and storage, we achieve both accelerated training and reduced GPU memory usage. As for the training framework, we design the DualPipe algorithm for efficient pipeline parallelism, which has fewer pipeline bubbles and hides most of the communication during training through computation-communication overlap. This overlap ensures that, as the model further scales up, as long as we maintain a constant computation-to-communication ratio, we can still employ fine-grained experts across nodes while achieving a near-zero all-to-all communication overhead. In addition, we also develop efficient cross-node all-to-all communication kernels to fully utilize InfiniBand (IB) and NVLink bandwidths. Furthermore, we meticulously optimize the memory footprint, making it possible to train DeepSeek-V3 without using costly tensor parallelism. Combining these efforts, we achieve high training efficiency.

为了实现高效的训练,我们支持 FP8 混合精度训练,并对训练框架进行了全面的优化。低精度训练已成为高效训练的一种有前景的解决方案 (Dettmers et al., 2022; Kalamkar et al., 2019; Narang et al., 2017; Peng et al., 2023b),其发展与硬件能力的进步密切相关 (Luo et al., 2024; Mic ike vici us et al., 2022; Rouhani et al., 2023a)。在这项工作中,我们引入了 FP8 混合精度训练框架,并首次在超大规模模型上验证了其有效性。通过支持 FP8 计算和存储,我们实现了加速训练并减少了 GPU 内存使用。对于训练框架,我们设计了 DualPipe 算法以实现高效的流水线并行,该算法减少了流水线气泡,并通过计算-通信重叠隐藏了大部分训练期间的通信。这种重叠确保了随着模型的进一步扩展,只要我们保持恒定的计算与通信比率,我们仍然可以在节点之间使用细粒度的专家,同时实现接近零的全对全通信开销。此外,我们还开发了高效的跨节点全对全通信内核,以充分利用 InfiniBand (IB) 和 NVLink 的带宽。此外,我们精心优化了内存占用,使得在不使用昂贵的张量并行的情况下训练 DeepSeek-V3 成为可能。结合这些努力,我们实现了高训练效率。

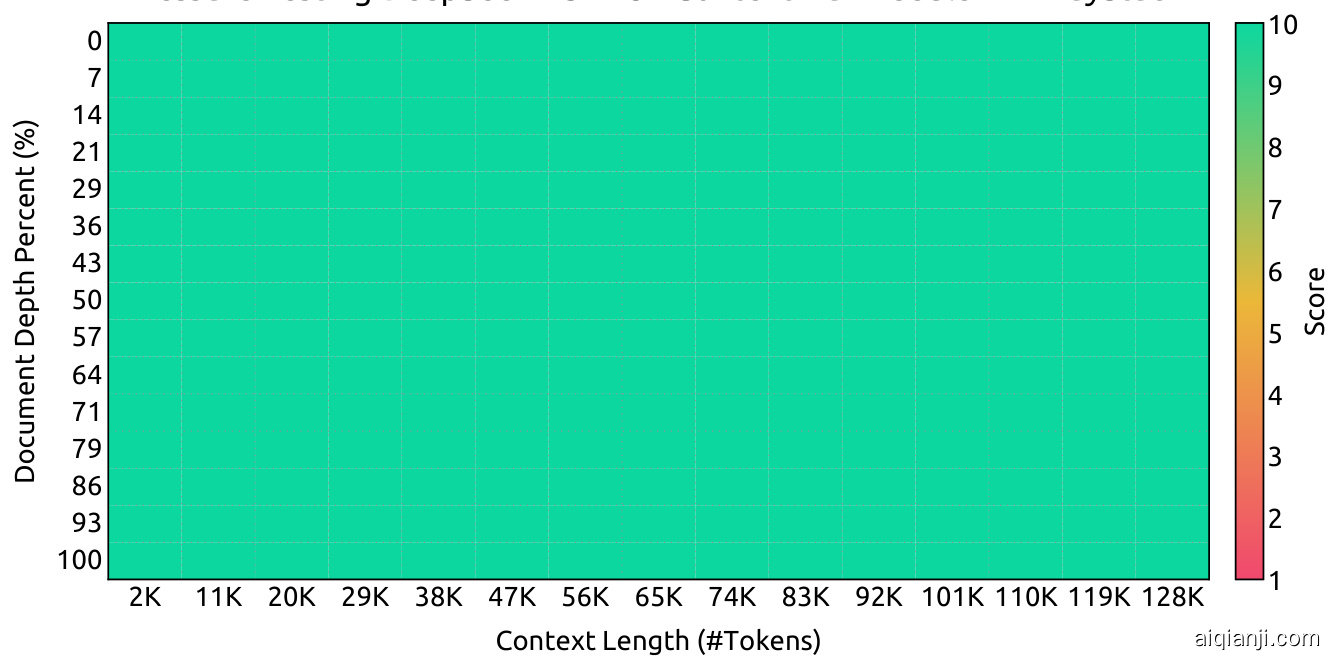

During pre-training, we train DeepSeek-V3 on 14.8T high-quality and diverse tokens. The pre-training process is remarkably stable. Throughout the entire training process, we did not encounter any irrecoverable loss spikes or have to roll back. Next, we conduct a two-stage context length extension for DeepSeek-V3. In the first stage, the maximum context length is extended to 32K, and in the second stage, it is further extended to 128K. Following this, we conduct post-training, including Supervised Fine-Tuning (SFT) and Reinforcement Learning (RL) on the base model of DeepSeek-V3, to align it with human preferences and further unlock its potential. During the post-training stage, we distill the reasoning capability from the DeepSeekR1 series of models, and meanwhile carefully maintain the balance between model accuracy

在预训练阶段,我们在 14.8T 的高质量多样化 Token 上训练 DeepSeek-V3。预训练过程非常稳定。在整个训练过程中,我们没有遇到任何不可恢复的损失峰值,也不需要回滚。接下来,我们对 DeepSeek-V3 进行了两阶段的上下文长度扩展。在第一阶段,最大上下文长度扩展到 32K,在第二阶段进一步扩展到 128K。随后,我们对 DeepSeek-V3 的基础模型进行了后训练,包括监督微调 (SFT) 和强化学习 (RL),以使其与人类偏好对齐并进一步释放其潜力。在后训练阶段,我们从 DeepSeekR1 系列模型中蒸馏出推理能力,同时精心保持模型准确性的平衡。

Table 1 | Training costs of DeepSeek-V3, assuming the rental price of H800 is $\mathbb{9}2$ per GPU hour.

| 训练成本 | 预训练 | 上下文扩展 | 后训练 | 总计 |

|---|---|---|---|---|

| H800 GPU 小时数(美元) | 2664K $5.328M | 119K $0.238M | 5K $0.01M | 2788K $5.576M |

表 1 | DeepSeek-V3 的训练成本,假设 H800 的租赁价格为每 GPU 小时 $\mathbb{9}2$。

and generation length.

生成长度

We evaluate DeepSeek-V3 on a comprehensive array of benchmarks. Despite its economical training costs, comprehensive evaluations reveal that DeepSeek-V3-Base has emerged as the strongest open-source base model currently available, especially in code and math. Its chat version also outperforms other open-source models and achieves performance comparable to leading closed-source models, including GPT-4o and Claude-3.5-Sonnet, on a series of standard and open-ended benchmarks.

我们在多个基准测试上对 DeepSeek-V3 进行了全面评估。尽管训练成本较低,但综合评估显示,DeepSeek-V3-Base 已成为当前最强的开源基础模型,尤其是在代码和数学领域。其聊天版本也在多个标准和开放式基准测试中超越了其他开源模型,并达到了与 GPT-4o 和 Claude-3.5-Sonnet 等领先闭源模型相当的性能。

Lastly, we emphasize again the economical training costs of DeepSeek-V3, summarized in Table 1, achieved through our optimized co-design of algorithms, frameworks, and hardware. During the pre-training stage, training DeepSeek-V3 on each trillion tokens requires only 180K H800 GPU hours, i.e., 3.7 days on our cluster with 2048 H800 GPUs. Consequently, our pretraining stage is completed in less than two months and costs 2664K GPU hours. Combined with 119K GPU hours for the context length extension and 5K GPU hours for post-training, DeepSeek-V3 costs only 2.788M GPU hours for its full training. Assuming the rental price of the $_{\mathrm{H800}},\mathrm{GPU}$ is $\mathbb{S}2$ per GPU hour, our total training costs amount to only $\mathbb{55.576M}$ . Note that the aforementioned costs include only the official training of DeepSeek-V3, excluding the costs associated with prior research and ablation experiments on architectures, algorithms, or data.

最后,我们再次强调 DeepSeek-V3 的经济训练成本,总结在表 1 中,这是通过我们对算法、框架和硬件的优化协同设计实现的。在预训练阶段,训练 DeepSeek-V3 每万亿 Token 仅需 180K H800 GPU 小时,即在我们拥有 2048 个 H800 GPU 的集群上仅需 3.7 天。因此,我们的预训练阶段在不到两个月内完成,消耗了 2664K GPU 小时。加上上下文长度扩展所需的 119K GPU 小时和后训练所需的 5K GPU 小时,DeepSeek-V3 的完整训练仅消耗 2.788M GPU 小时。假设 $_{\mathrm{H800}},\mathrm{GPU}$ 的租赁价格为每小时 $\mathbb{S}2$,我们的总训练成本仅为 $\mathbb{55.576M}$。需要注意的是,上述成本仅包括 DeepSeek-V3 的正式训练,不包括先前在架构、算法或数据上的研究和消融实验的相关成本。

Our main contribution includes:

我们的主要贡献包括:

Architecture: Innovative Load Balancing Strategy and Training Objective

架构:创新的负载均衡策略与训练目标

• On top of the efficient architecture of DeepSeek-V2, we pioneer an auxiliary-loss-free strategy for load balancing, which minimizes the performance degradation that arises from encouraging load balancing. • We investigate a Multi-Token Prediction (MTP) objective and prove it beneficial to model performance. It can also be used for speculative decoding for inference acceleration.

• 在 DeepSeek-V2 的高效架构基础上,我们率先提出了一种无辅助损失的负载均衡策略,该策略最大限度地减少了因鼓励负载均衡而导致的性能下降。

• 我们研究了多 Token 预测 (Multi-Token Prediction, MTP) 目标,并证明其对模型性能有益。它还可用于推测解码 (speculative decoding) 以加速推理。

Pre-Training: Towards Ultimate Training Efficiency

预训练:追求极致的训练效率

• We design an FP8 mixed precision training framework and, for the first time, validate the feasibility and effectiveness of FP8 training on an extremely large-scale model. • Through the co-design of algorithms, frameworks, and hardware, we overcome the communication bottleneck in cross-node MoE training, achieving near-full computationcommunication overlap. This significantly enhances our training efficiency and reduces the training costs, enabling us to further scale up the model size without additional overhead. • At an economical cost of only 2.664M H800 GPU hours, we complete the pre-training of DeepSeek-V3 on 14.8T tokens, producing the currently strongest open-source base model. The subsequent training stages after pre-training require only 0.1M GPU hours.

• 我们设计了一个FP8混合精度训练框架,并首次在超大规模模型上验证了FP8训练的可行性和有效性。

• 通过算法、框架和硬件的协同设计,我们克服了跨节点MoE训练中的通信瓶颈,实现了近乎完全的计算-通信重叠。这显著提升了我们的训练效率,降低了训练成本,使我们能够在没有额外开销的情况下进一步扩大模型规模。

• 在仅花费2.664M H800 GPU小时的经济成本下,我们完成了DeepSeek-V3在14.8T tokens上的预训练,生成了目前最强的开源基础模型。预训练后的后续训练阶段仅需0.1M GPU小时。

Post-Training: Knowledge Distillation from DeepSeek-R1

后训练:从 DeepSeek-R1 进行知识蒸馏

• We introduce an innovative methodology to distill reasoning capabilities from the longChain-of-Thought (CoT) model, specifically from one of the DeepSeek R1 series models, into standard LLMs, particularly DeepSeek-V3. Our pipeline elegantly incorporates the

• 我们引入了一种创新方法,从长链思维(CoT)模型(特别是DeepSeek R1系列模型之一)中提取推理能力,并将其融入标准大语言模型(LLM),特别是DeepSeek-V3。我们的流程巧妙地结合了...

verification and reflection patterns of R1 into DeepSeek-V3 and notably improves its reasoning performance. Meanwhile, we also maintain control over the output style and length of DeepSeek-V3.

将 R1 的验证和反思模式整合到 DeepSeek-V3 中,显著提升了其推理性能。同时,我们也保持了对 DeepSeek-V3 输出风格和长度的控制。

Summary of Core Evaluation Results

核心评估结果总结

• Knowledge: (1) On educational benchmarks such as MMLU, MMLU-Pro, and GPQA, DeepSeek-V3 outperforms all other open-source models, achieving 88.5 on MMLU, 75.9 on MMLU-Pro, and 59.1 on GPQA. Its performance is comparable to leading closed-source models like GPT-4o and Claude-Sonnet-3.5, narrowing the gap between open-source and closed-source models in this domain. (2) For factuality benchmarks, DeepSeek-V3 demonstrates superior performance among open-source models on both SimpleQA and Chinese SimpleQA. While it trails behind GPT-4o and Claude-Sonnet-3.5 in English factual knowledge (SimpleQA), it surpasses these models in Chinese factual knowledge (Chinese SimpleQA), highlighting its strength in Chinese factual knowledge. • Code, Math, and Reasoning: (1) DeepSeek-V3 achieves state-of-the-art performance on math-related benchmarks among all non-long-CoT open-source and closed-source models. Notably, it even outperforms o1-preview on specific benchmarks, such as MATH-500, demonstrating its robust mathematical reasoning capabilities. (2) On coding-related tasks, DeepSeek-V3 emerges as the top-performing model for coding competition benchmarks, such as Live Code Bench, solidifying its position as the leading model in this domain. For engineering-related tasks, while DeepSeek-V3 performs slightly below Claude-Sonnet-3.5, it still outpaces all other models by a significant margin, demonstrating its competitiveness across diverse technical benchmarks.

• 知识:(1) 在教育基准测试如 MMLU、MMLU-Pro 和 GPQA 上,DeepSeek-V3 超越了所有其他开源模型,在 MMLU 上达到 88.5 分,在 MMLU-Pro 上达到 75.9 分,在 GPQA 上达到 59.1 分。其表现与领先的闭源模型如 GPT-4o 和 Claude-Sonnet-3.5 相当,缩小了开源与闭源模型在这一领域的差距。(2) 在事实性基准测试中,DeepSeek-V3 在 SimpleQA 和中文 SimpleQA 上均表现出色,领先于其他开源模型。虽然在英语事实知识 (SimpleQA) 上略逊于 GPT-4o 和 Claude-Sonnet-3.5,但在中文事实知识 (中文 SimpleQA) 上超越了这些模型,突显了其在中文事实知识方面的优势。

• 代码、数学和推理:(1) DeepSeek-V3 在所有非长链推理的开源和闭源模型中,在数学相关基准测试上达到了最先进的性能。值得注意的是,它在特定基准测试如 MATH-500 上甚至超越了 o1-preview,展示了其强大的数学推理能力。(2) 在编码相关任务中,DeepSeek-V3 成为编码竞赛基准测试(如 Live Code Bench)中表现最佳的模型,巩固了其在这一领域的领先地位。在工程相关任务中,尽管 DeepSeek-V3 的表现略低于 Claude-Sonnet-3.5,但仍以显著优势领先于所有其他模型,展示了其在多样化技术基准测试中的竞争力。

In the remainder of this paper, we first present a detailed exposition of our DeepSeek-V3 model architecture (Section 2). Subsequently, we introduce our infrastructures, encompassing our compute clusters, the training framework, the support for FP8 training, the inference deployment strategy, and our suggestions on future hardware design. Next, we describe our pre-training process, including the construction of training data, hyper-parameter settings, longcontext extension techniques, the associated evaluations, as well as some discussions (Section 4). Thereafter, we discuss our efforts on post-training, which include Supervised Fine-Tuning (SFT), Reinforcement Learning (RL), the corresponding evaluations, and discussions (Section 5). Lastly, we conclude this work, discuss existing limitations of DeepSeek-V3, and propose potential directions for future research (Section 6).

在本文的剩余部分,我们首先详细介绍了我们的 DeepSeek-V3 模型架构(第 2 节)。随后,我们介绍了我们的基础设施,包括计算集群、训练框架、对 FP8 训练的支持、推理部署策略以及我们对未来硬件设计的建议。接下来,我们描述了我们的预训练过程,包括训练数据的构建、超参数设置、长上下文扩展技术、相关评估以及一些讨论(第 4 节)。之后,我们讨论了我们在训练后的努力,包括监督微调(SFT)、强化学习(RL)、相应的评估和讨论(第 5 节)。最后,我们总结了这项工作,讨论了 DeepSeek-V3 的现有局限性,并提出了未来研究的潜在方向(第 6 节)。

2. Architecture

2. 架构

We first introduce the basic architecture of DeepSeek-V3, featured by Multi-head Latent Attention (MLA) (DeepSeek-AI, 2024c) for efficient inference and Deep Seek MoE (Dai et al., 2024) for economical training. Then, we present a Multi-Token Prediction (MTP) training objective, which we have observed to enhance the overall performance on evaluation benchmarks. For other minor details not explicitly mentioned, DeepSeek-V3 adheres to the settings of DeepSeekV2 (DeepSeek-AI, 2024c).

我们首先介绍 DeepSeek-V3 的基本架构,其特点是采用多头潜在注意力机制 (Multi-head Latent Attention, MLA) (DeepSeek-AI, 2024c) 以实现高效推理,以及 Deep Seek MoE (Dai et al., 2024) 以实现经济的训练。接着,我们提出了一种多 Token 预测 (Multi-Token Prediction, MTP) 训练目标,我们观察到该目标能够提升在评估基准上的整体性能。对于未明确提及的其他细节,DeepSeek-V3 遵循 DeepSeekV2 (DeepSeek-AI, 2024c) 的设置。

2.1. Basic Architecture

2.1. 基础架构

The basic architecture of DeepSeek-V3 is still within the Transformer (Vaswani et al., 2017) framework. For efficient inference and economical training, DeepSeek-V3 also adopts MLA and Deep Seek MoE, which have been thoroughly validated by DeepSeek-V2. Compared with DeepSeek-V2, an exception is that we additionally introduce an auxiliary-loss-free load balancing strategy (Wang et al., 2024a) for Deep Seek MoE to mitigate the performance degradation induced by the effort to ensure load balance. Figure 2 illustrates the basic architecture of DeepSeek-V3, and we will briefly review the details of MLA and Deep Seek MoE in this section.

DeepSeek-V3 的基本架构仍然在 Transformer (Vaswani et al., 2017) 框架内。为了实现高效的推理和经济性的训练,DeepSeek-V3 还采用了 MLA 和 Deep Seek MoE,这些已经在 DeepSeek-V2 中得到了充分验证。与 DeepSeek-V2 相比,一个例外是我们额外引入了一种无辅助损失的负载均衡策略 (Wang et al., 2024a) 用于 Deep Seek MoE,以减轻因确保负载均衡而导致的性能下降。图 2 展示了 DeepSeek-V3 的基本架构,我们将在本节简要回顾 MLA 和 Deep Seek MoE 的细节。

Figure 2 | Illustration of the basic architecture of DeepSeek-V3. Following DeepSeek-V2, we adopt MLA and Deep Seek MoE for efficient inference and economical training.

图 2 | DeepSeek-V3 的基本架构示意图。我们延续了 DeepSeek-V2 的设计,采用 MLA 和 Deep Seek MoE 来实现高效的推理和经济性的训练。

2.1.1. Multi-Head Latent Attention

2.1.1. 多头潜在注意力 (Multi-Head Latent Attention)

For attention, DeepSeek-V3 adopts the MLA architecture. Let $d$ denote the embedding dimension, $n_{h}$ denote the number of attention heads, $d_{h}$ denote the dimension per head, and $\mathbf{h}_{t}\in\mathbb{R}^{d}$ denote the attention input for the $t^{\th}$ -th token at a given attention layer. The core of MLA is the low-rank joint compression for attention keys and values to reduce Key-Value (KV) cache during inference:

对于注意力机制,DeepSeek-V3 采用了 MLA 架构。设 $d$ 表示嵌入维度,$n_{h}$ 表示注意力头的数量,$d_{h}$ 表示每个头的维度,$\mathbf{h}_{t}\in\mathbb{R}^{d}$ 表示给定注意力层中第 $t^{\th}$ 个 Token 的注意力输入。MLA 的核心是对注意力键和值进行低秩联合压缩,以减少推理过程中的键值 (KV) 缓存:

where $c_{t}^{K V}\in\mathbb{R}^{d_{c}}$ is the compressed latent vector for keys and values; $d_{c}(\ll d_{h}n_{h})$ indicates the KV compression dimension; $\hat{W}^{D K V}\in\mathbb{R}^{d_{c}\times d}$ denotes the down-projection matrix; $W^{U K}$ , $W^{U V}\in\mathbb{R}^{d_{h}n_{h}\times d_{c}}$ are the up-projection matrices for keys and values, respectively; $W^{K R}\in\mathbb{R}^{d_{h}^{R}\times d}$ is the matrix used to produce the decoupled key that carries Rotary Positional Embedding (RoPE) (Su et al., 2024); RoPE(·) denotes the operation that applies RoPE matrices; and $\left[\cdot;\cdot\right]$ denotes concatenation. Note that for MLA, only the blue-boxed vectors (i.e., $\mathbf{c}{t}^{K V}$ and $\mathbf{k}{t}^{R}$ ) need to be cached during generation, which results in significantly reduced KV cache while maintaining performance comparable to standard Multi-Head Attention (MHA) (Vaswani et al., 2017).

其中 $\mathbf{c}{t}^{K V}\in\mathbb{R}^{d{c}}$ 是键和值的压缩潜在向量;$d_{c}(\ll d_{h}n_{h})$ 表示键值压缩维度;$\hat{W}^{D K V}\in\mathbb{R}^{d_{c}\times d}$ 表示下投影矩阵;$W^{U K}$ 和 $W^{U V}\in\mathbb{R}^{d_{h}n_{h}\times d_{c}}$ 分别是键和值的上投影矩阵;$W^{K R}\in\mathbb{R}^{d_{h}^{R}\times d}$ 是用于生成携带旋转位置嵌入 (RoPE) (Su et al., 2024) 的解耦键的矩阵;RoPE(·) 表示应用 RoPE 矩阵的操作;$\left[\cdot;\cdot\right]$ 表示连接操作。需要注意的是,对于 MLA,在生成过程中只需要缓存蓝色框中的向量(即 $\mathbf{c}{t}^{K V}$ 和 $\mathbf{k}{t}^{R}$),这显著减少了键值缓存,同时保持了与标准多头注意力 (MHA) (Vaswani et al., 2017) 相当的性能。

For the attention queries, we also perform a low-rank compression, which can reduce the activation memory during training:

对于注意力查询,我们还执行了低秩压缩,这可以减少训练期间的激活内存:

where $\mathbf{c}{t}^{Q};\in;\mathbb{R}^{d{c}^{\prime}}$ is the compressed latent vector for queries; $d_{c}^{\prime}(\ll,d_{h}n_{h})$ denotes the query compression dimension; $W^{D\bar{Q}}\in\mathbb{R}^{d_{c}^{\prime}\times d},W^{U Q}\in\mathbb{R}^{d_{h}n_{h}\times d_{c}^{\prime}}$ are the down-projection and up-projection matrices for queries, respectively; and $W^{Q R}\in\mathbb{R}^{d_{h}^{R}n_{h}\times d_{c}^{\prime}}$ is the matrix to produce the decoupled queries that carry RoPE.

其中 $\mathbf{c}{t}^{Q};\in;\mathbb{R}^{d{c}^{\prime}}$ 是查询的压缩潜在向量;$d_{c}^{\prime}(\ll,d_{h}n_{h})$ 表示查询压缩维度;$W^{D\bar{Q}}\in\mathbb{R}^{d_{c}^{\prime}\times d},W^{U Q}\in\mathbb{R}^{d_{h}n_{h}\times d_{c}^{\prime}}$ 分别是查询的下投影和上投影矩阵;$W^{Q R}\in\mathbb{R}^{d_{h}^{R}n_{h}\times d_{c}^{\prime}}$ 是生成携带 RoPE 的解耦查询的矩阵。

Ultimately, the attention queries $\left(\mathbf{q}{t,i}\right)$ , keys $(\mathbf{k}{j,i})$ , and values $(\mathbf{v}{j,i}^{C})$ are combined to yield the final attention output $\mathbf{u}{t}$ :

最终,注意力查询 $\left(\mathbf{q}{t,i}\right)$、键 $(\mathbf{k}{j,i})$ 和值 $(\mathbf{v}{j,i}^{C})$ 被组合起来,生成最终的注意力输出 $\mathbf{u}{t}$:

where $W^{O}\in\mathbb{R}^{d\times d_{h}n_{h}}$ denotes the output projection matrix.

其中 $W^{O}\in\mathbb{R}^{d\times d_{h}n_{h}}$ 表示输出投影矩阵。

2.1.2. Deep Seek MoE with Auxiliary-Loss-Free Load Balancing

2.1.2. 深度搜索 MoE 与无辅助损失负载均衡

Basic Architecture of Deep Seek MoE. For Feed-Forward Networks (FFNs), DeepSeek-V3 employs the Deep Seek MoE architecture (Dai et al., 2024). Compared with traditional MoE architectures like GShard (Lepikhin et al., 2021), Deep Seek MoE uses finer-grained experts and isolates some experts as shared ones. Let $\mathbf{u}{t}$ denote the FFN input of the $t^{\th}$ -th token, we compute the FFN output $\mathbf{h}{t}^{\prime}$ as follows:

Deep Seek MoE 的基础架构。对于前馈网络 (FFNs),DeepSeek-V3 采用了 Deep Seek MoE 架构 (Dai et al., 2024)。与传统的 MoE 架构(如 GShard (Lepikhin et al., 2021))相比,Deep Seek MoE 使用了更细粒度的专家,并将部分专家隔离为共享专家。设 $\mathbf{u}{t}$ 表示第 $t^{\th}$ 个 Token 的 FFN 输入,我们计算 FFN 输出 $\mathbf{h}{t}^{\prime}$ 如下:

where $N_{s}$ and $N_{r}$ denote the numbers of shared experts and routed experts, respectively; $\mathrm{FFN}{i}^{(s)}(\cdot)$ and $\mathrm{FFN}{i}^{(r)}(\cdot)$ denote the 𝑖-th shared expert and the $i\footnote{C o r r e s p o n d i n g a u t h o r.T e l:~+86-1088236095.E-m a i l a d d e n s c o n s t r a d d e n s c o n s t i o n s t i c a l l o r.}$ -th routed expert, respectively; $K_{r}$ denotes the number of activated routed experts; $g_{i,t}$ is the gating value for the $i^{\th}$ -th expert; $s_{i,t}$ is the token-to-expert affinity; $\mathbf{e}_{i}$ is the centroid vector of the $i^{\th}$ -th routed expert; and $\mathrm{Topk}(\cdot,K)$ denotes the set comprising $K$ highest scores among the affinity scores calculated for the $t$ -th token and all routed experts. Slightly different from DeepSeek-V2, DeepSeek-V3 uses the sigmoid function to compute the affinity scores, and applies a normalization among all selected affinity scores to produce the gating values.

其中 $N_{s}$ 和 $N_{r}$ 分别表示共享专家和路由专家的数量;$\mathrm{FFN}{i}^{(s)}(\cdot)$ 和 $\mathrm{FFN}{i}^{(r)}(\cdot)$ 分别表示第 $i$ 个共享专家和第 $i$ 个路由专家;$K_{r}$ 表示激活的路由专家数量;$g_{i,t}$ 是第 $i$ 个专家的门控值;$s_{i,t}$ 是 Token 到专家的亲和度;$\mathbf{e}_{i}$ 是第 $i$ 个路由专家的质心向量;$\mathrm{Topk}(\cdot,K)$ 表示在第 $t$ 个 Token 和所有路由专家之间计算的亲和度得分中,包含 $K$ 个最高得分的集合。与 DeepSeek-V2 略有不同,DeepSeek-V3 使用 sigmoid 函数计算亲和度得分,并在所有选定的亲和度得分之间进行归一化以生成门控值。

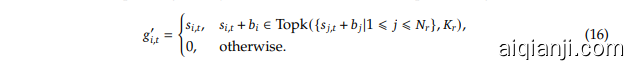

Auxiliary-Loss-Free Load Balancing. For MoE models, an unbalanced expert load will lead to routing collapse (Shazeer et al., 2017) and diminish computational efficiency in scenarios with expert parallelism. Conventional solutions usually rely on the auxiliary loss (Fedus et al., 2021; Lepikhin et al., 2021) to avoid unbalanced load. However, too large an auxiliary loss will impair the model performance (Wang et al., 2024a). To achieve a better trade-off between load balance and model performance, we pioneer an auxiliary-loss-free load balancing strategy (Wang et al., 2024a) to ensure load balance. To be specific, we introduce a bias term $b_{i}$ for each expert and add it to the corresponding affinity scores $s_{i,t}$ to determine the top-K routing:

无辅助损失负载均衡。对于 MoE 模型,专家负载不均衡会导致路由崩溃 (Shazeer et al., 2017),并在专家并行场景中降低计算效率。传统解决方案通常依赖辅助损失 (Fedus et al., 2021; Lepikhin et al., 2021) 来避免负载不均衡。然而,过大的辅助损失会损害模型性能 (Wang et al., 2024a)。为了在负载均衡和模型性能之间取得更好的平衡,我们首创了一种无辅助损失的负载均衡策略 (Wang et al., 2024a) 来确保负载均衡。具体来说,我们为每个专家引入一个偏置项 $b_{i}$,并将其添加到相应的亲和度分数 $s_{i,t}$ 中以确定 top-K 路由:

Note that the bias term is only used for routing. The gating value, which will be multiplied with the FFN output, is still derived from the original affinity score $s_{i,t}$ . During training, we keep monitoring the expert load on the whole batch of each training step. At the end of each step, we will decrease the bias term by $\gamma$ if its corresponding expert is overloaded, and increase it by $\gamma$ if its corresponding expert is under loaded, where $\gamma$ is a hyper-parameter called bias update speed. Through the dynamic adjustment, DeepSeek-V3 keeps balanced expert load during training, and achieves better performance than models that encourage load balance through pure auxiliary losses.

需要注意的是,偏置项仅用于路由。与FFN输出相乘的门控值仍然来源于原始的亲和度得分 $s_{i,t}$ 。在训练过程中,我们持续监控每个训练步骤中整个批次的专家负载。在每个步骤结束时,如果对应的专家过载,我们会将偏置项减少 $\gamma$ ;如果对应的专家负载不足,则增加 $\gamma$ ,其中 $\gamma$ 是一个称为偏置更新速度的超参数。通过这种动态调整,DeepSeek-V3 在训练过程中保持了专家负载的平衡,并且比仅通过纯辅助损失来鼓励负载平衡的模型表现更好。

Complementary Sequence-Wise Auxiliary Loss. Although DeepSeek-V3 mainly relies on the auxiliary-loss-free strategy for load balance, to prevent extreme imbalance within any single sequence, we also employ a complementary sequence-wise balance loss:

互补序列辅助损失。尽管 DeepSeek-V3 主要依赖无辅助损失的策略来实现负载均衡,但为了防止任何单个序列内的极端不平衡,我们还采用了互补的序列平衡损失:

where the balance factor $\alpha$ is a hyper-parameter, which will be assigned an extremely small value for DeepSeek-V3; $\mathbb{1}(\cdot)$ denotes the indicator function; and $T$ denotes the number of tokens in a sequence. The sequence-wise balance loss encourages the expert load on each sequence to be balanced.

其中平衡因子 $\alpha$ 是一个超参数,对于 DeepSeek-V3 将被赋予一个极小的值;$\mathbb{1}(\cdot)$ 表示指示函数;$T$ 表示序列中的 Token 数量。序列级别的平衡损失鼓励每个序列上的专家负载保持平衡。

Figure 3 | Illustration of our Multi-Token Prediction (MTP) implementation. We keep the complete causal chain for the prediction of each token at each depth.

图 3 | 我们的多 Token 预测 (MTP) 实现示意图。我们在每个深度保留完整的因果链以预测每个 Token。

Node-Limited Routing. Like the device-limited routing used by DeepSeek-V2, DeepSeek-V3 also uses a restricted routing mechanism to limit communication costs during training. In short, we ensure that each token will be sent to at most 𝑀nodes, which are selected according to the sum of the highest $\frac{K_{r}}{M}$ affinity scores of the experts distributed on each node. Under this constraint, our MoE training framework can nearly achieve full computation-communication overlap.

节点限制路由。与 DeepSeek-V2 使用的设备限制路由类似,DeepSeek-V3 也采用了受限的路由机制来限制训练期间的通信成本。简而言之,我们确保每个 Token 最多只会被发送到 𝑀 个节点,这些节点是根据分布在每个节点上的专家中亲和度得分最高的 $\frac{K_{r}}{M}$ 的总和来选择的。在这种约束下,我们的 MoE 训练框架几乎可以实现计算与通信的完全重叠。

No Token-Dropping. Due to the effective load balancing strategy, DeepSeek-V3 keeps a good load balance during its full training. Therefore, DeepSeek-V3 does not drop any tokens during training. In addition, we also implement specific deployment strategies to ensure inference load balance, so DeepSeek-V3 also does not drop tokens during inference.

无Token丢弃。由于有效的负载均衡策略,DeepSeek-V3在整个训练过程中保持了良好的负载平衡。因此,DeepSeek-V3在训练期间不会丢弃任何Token。此外,我们还实施了特定的部署策略以确保推理负载平衡,因此DeepSeek-V3在推理期间也不会丢弃Token。

2.2. Multi-Token Prediction

2.2. 多Token预测

Inspired by Gloeckle et al. (2024), we investigate and set a Multi-Token Prediction (MTP) objective for DeepSeek-V3, which extends the prediction scope to multiple future tokens at each position. On the one hand, an MTP objective densifies the training signals and may improve data efficiency. On the other hand, MTP may enable the model to pre-plan its representations for better prediction of future tokens. Figure 3 illustrates our implementation of MTP. Different from Gloeckle et al. (2024), which parallelly predicts $D$ additional tokens using independent output heads, we sequentially predict additional tokens and keep the complete causal chain at each prediction depth. We introduce the details of our MTP implementation in this section.

受 Gloeckle 等人 (2024) 的启发,我们为 DeepSeek-V3 研究并设定了多 Token 预测 (MTP) 目标,该目标将预测范围扩展到每个位置的多个未来 Token。一方面,MTP 目标增加了训练信号的密度,可能会提高数据效率。另一方面,MTP 可能使模型能够预先规划其表示,以更好地预测未来的 Token。图 3 展示了我们的 MTP 实现。与 Gloeckle 等人 (2024) 使用独立的输出头并行预测 $D$ 个额外 Token 不同,我们按顺序预测额外的 Token,并在每个预测深度保持完整的因果链。我们在本节中介绍了 MTP 实现的细节。

MTP Modules. To be specific, our MTP implementation uses $D$ sequential modules to predict $D$ additional tokens. The $k$ -th MTP module consists of a shared embedding layer $\operatorname{Emb}(\cdot)$ , a shared output head OutHead $(\cdot)$ , a Transformer block $\mathrm{TRM}{k}(\cdot){\cdot}$ , and a projection matrix $M_{k}\in\mathbb{R}^{d\times2d}$ . For the $i\cdot$ -th input token $t_{i},$ at the $k$ -th prediction depth, we first combine the representation of the $i$ -th token at the $(k-1)$ -th depth $\mathbf{h}{i}^{k-1}\in\mathbb{R}^{d}$ and the embedding of the $(i+k)$ -th token $E m b(t{i+k})\in\mathbb{R}^{d}$

MTP 模块。具体来说,我们的 MTP 实现使用 $D$ 个顺序模块来预测 $D$ 个额外的 Token。第 $k$ 个 MTP 模块由一个共享的嵌入层 $\operatorname{Emb}(\cdot)$、一个共享的输出头 OutHead $(\cdot)$、一个 Transformer 块 $\mathrm{TRM}{k}(\cdot){\cdot}$ 和一个投影矩阵 $M_{k}\in\mathbb{R}^{d\times2d}$ 组成。对于第 $i$ 个输入 Token $t_{i},$ 在第 $k$ 个预测深度,我们首先将第 $(k-1)$ 个深度的第 $i$ 个 Token 的表示 $\mathbf{h}{i}^{k-1}\in\mathbb{R}^{d}$ 和第 $(i+k)$ 个 Token 的嵌入 $E m b(t{i+k})\in\mathbb{R}^{d}$ 结合起来。

where $\left[\cdot;\cdot\right]$ denotes concatenation. Especially, when $k=1,\mathbf{h}{i}^{k-1}$ refers to the representation given by the main model. Note that for each MTP module, its embedding layer is shared with the main model. The combined $\mathbf{h}{i}^{\prime k}$ serves as the input of the Transformer block at the $k$ -th depth to produce the output representation at the current depth $\mathbf{h}_{i}^{k}$ :

其中 $\left[\cdot;\cdot\right]$ 表示拼接。特别地,当 $k=1$ 时,$\mathbf{h}{i}^{k-1}$ 指的是主模型给出的表示。注意,对于每个 MTP 模块,其嵌入层与主模型共享。组合后的 $\mathbf{h}{i}^{\prime k}$ 作为第 $k$ 层深度的 Transformer 块的输入,以生成当前深度的输出表示 $\mathbf{h}_{i}^{k}$:

where $T$ represents the input sequence length and $i{:}j$ denotes the slicing operation (inclusive of both the left and right boundaries). Finally, taking $\mathbf{h}{i}^{k}$ as the input, the shared output head will compute the probability distribution for the $k$ -th additional prediction token $P{i+1+k}^{k}\in\mathbb{R}^{V}.$ , where is the vocabulary size:

其中 $T$ 表示输入序列长度,$i{:}j$ 表示切片操作(包括左右边界)。最后,以 $\mathbf{h}{i}^{k}$ 作为输入,共享的输出头将计算第 $k$ 个额外预测 Token 的概率分布 $P{i+1+k}^{k}\in\mathbb{R}^{V}$,其中 $V$ 是词汇表大小:

The output head OutHead $(\cdot)$ linearly maps the representation to logits and subsequently applies the Softmax(·) function to compute the prediction probabilities of the $k$ -th additional token. Also, for each MTP module, its output head is shared with the main model. Our principle of maintaining the causal chain of predictions is similar to that of EAGLE (Li et al., 2024b), but its primary objective is speculative decoding (Leviathan et al., 2023; Xia et al., 2023), whereas we utilize MTP to improve training.

输出头 OutHead $(\cdot)$ 将表示线性映射到 logits,随后应用 Softmax(·) 函数来计算第 $k$ 个附加 token 的预测概率。此外,对于每个 MTP 模块,其输出头与主模型共享。我们保持预测因果链的原则与 EAGLE (Li et al., 2024b) 类似,但其主要目标是推测解码 (Leviathan et al., 2023; Xia et al., 2023),而我们利用 MTP 来改进训练。

MTP Training Objective. For each prediction depth, we compute a cross-entropy loss $\mathcal{L}_{\mathrm{MTP}}^{k}$

MTP 训练目标。对于每个预测深度,我们计算交叉熵损失 $\mathcal{L}_{\mathrm{MTP}}^{k}$。

where $T$ denotes the input sequence length, $t_{i}$ denotes the ground-truth token at the $i\cdot$ -th position, and $P_{i}^{k}[t_{i}]$ denotes the corresponding prediction probability of $t_{i},$ given by the $k$ -th MTP module. Finally, we compute the average of the MTP losses across all depths and multiply it by a weighting factor $\lambda$ to obtain the overall MTP loss ${\mathcal{L}}_{\mathrm{MTP}}$ , which serves as an additional training objective for DeepSeek-V3:

其中 $T$ 表示输入序列长度,$t_{i}$ 表示第 $i$ 个位置的真实 Token,$P_{i}^{k}[t_{i}]$ 表示第 $k$ 个 MTP 模块给出的 $t_{i}$ 的对应预测概率。最后,我们计算所有深度的 MTP 损失的平均值,并乘以权重因子 $\lambda$ 以获得整体 MTP 损失 ${\mathcal{L}}_{\mathrm{MTP}}$,作为 DeepSeek-V3 的额外训练目标:

MTP in Inference. Our MTP strategy mainly aims to improve the performance of the main model, so during inference, we can directly discard the MTP modules and the main model can function independently and normally. Additionally, we can also repurpose these MTP modules for speculative decoding to further improve the generation latency.

推理中的 MTP。我们的 MTP 策略主要旨在提高主模型的性能,因此在推理过程中,我们可以直接丢弃 MTP 模块,主模型可以独立且正常地运行。此外,我们还可以将这些 MTP 模块重新用于推测解码,以进一步减少生成延迟。

3. Infrastructures

3. 基础设施

3.1. Compute Clusters

3.1. 计算集群

DeepSeek-V3 is trained on a cluster equipped with 2048 NVIDIA H800 GPUs. Each node in the H800 cluster contains 8 GPUs connected by NVLink and NVSwitch within nodes. Across different nodes, InfiniBand (IB) interconnects are utilized to facilitate communications.

DeepSeek-V3 在配备 2048 个 NVIDIA H800 GPU 的集群上进行训练。H800 集群中的每个节点包含 8 个 GPU,通过节点内的 NVLink 和 NVSwitch 连接。不同节点之间使用 InfiniBand (IB) 互连以促进通信。

3.2. Training Framework

3.2. 训练框架

The training of DeepSeek-V3 is supported by the HAI-LLM framework, an efficient and lightweight training framework crafted by our engineers from the ground up. On the whole, DeepSeek-V3 applies 16-way Pipeline Parallelism (PP) (Qi et al., 2023a), 64-way Expert Parallelism (EP) (Lepikhin et al., 2021) spanning 8 nodes, and ZeRO-1 Data Parallelism (DP) (Rajbhandari et al., 2020).

DeepSeek-V3 的训练由 HAI-LLM 框架支持,这是一个由我们的工程师从头构建的高效且轻量级的训练框架。总体而言,DeepSeek-V3 应用了 16 路流水线并行 (Pipeline Parallelism, PP) (Qi et al., 2023a)、跨 8 个节点的 64 路专家并行 (Expert Parallelism, EP) (Lepikhin et al., 2021),以及 ZeRO-1 数据并行 (Data Parallelism, DP) (Rajbhandari et al., 2020)。

In order to facilitate efficient training of DeepSeek-V3, we implement meticulous engineering optimization s. Firstly, we design the DualPipe algorithm for efficient pipeline parallelism. Compared with existing PP methods, DualPipe has fewer pipeline bubbles. More importantly, it overlaps the computation and communication phases across forward and backward processes, thereby addressing the challenge of heavy communication overhead introduced by cross-node expert parallelism. Secondly, we develop efficient cross-node all-to-all communication kernels to fully utilize IB and NVLink bandwidths and conserve Streaming Multiprocessors (SMs) dedicated to communication. Finally, we meticulously optimize the memory footprint during training, thereby enabling us to train DeepSeek-V3 without using costly Tensor Parallelism (TP).

为了高效训练 DeepSeek-V3,我们实施了精细的工程优化。首先,我们设计了 DualPipe 算法以实现高效的流水线并行。与现有的流水线并行方法相比,DualPipe 的流水线气泡更少。更重要的是,它在前向和后向过程中重叠了计算和通信阶段,从而解决了跨节点专家并行引入的通信开销大的挑战。其次,我们开发了高效的跨节点全对全通信内核,以充分利用 IB 和 NVLink 带宽,并节省专用于通信的流式多处理器 (SMs)。最后,我们精心优化了训练期间的内存占用,从而使得我们能够在无需使用昂贵的张量并行 (TP) 的情况下训练 DeepSeek-V3。

3.2.1. DualPipe and Computation-Communication Overlap

3.2.1. DualPipe 与计算-通信重叠

For DeepSeek-V3, the communication overhead introduced by cross-node expert parallelism results in an inefficient computation-to-communication ratio of approximately 1:1. To tackle this challenge, we design an innovative pipeline parallelism algorithm called DualPipe, which not only accelerates model training by effectively overlapping forward and backward computationcommunication phases, but also reduces the pipeline bubbles.

对于 DeepSeek-V3,跨节点专家并行引入的通信开销导致计算与通信的比例约为 1:1,效率较低。为了解决这一挑战,我们设计了一种创新的流水线并行算法,称为 DualPipe,该算法不仅通过有效重叠前向和后向计算通信阶段来加速模型训练,还减少了流水线气泡。

The key idea of DualPipe is to overlap the computation and communication within a pair of individual forward and backward chunks. To be specific, we divide each chunk into four components: attention, all-to-all dispatch, MLP, and all-to-all combine. Specially, for a backward chunk, both attention and MLP are further split into two parts, backward for input and backward for weights, like in ZeroBubble (Qi et al., 2023b). In addition, we have a PP communication component. As illustrated in Figure 4, for a pair of forward and backward chunks, we rearrange these components and manually adjust the ratio of GPU SMs dedicated to communication versus computation. In this overlapping strategy, we can ensure that both all-to-all and PP communication can be fully hidden during execution. Given the efficient overlapping strategy, the full DualPipe scheduling is illustrated in Figure 5. It employs a bidirectional pipeline scheduling, which feeds micro-batches from both ends of the pipeline simultaneously and a significant portion of communications can be fully overlapped. This overlap also ensures that, as the model further scales up, as long as we maintain a constant computation-to-communication ratio, we can still employ fine-grained experts across nodes while achieving a near-zero all-to-all communication overhead.

DualPipe 的核心思想是在一对独立的前向和后向块中重叠计算和通信。具体来说,我们将每个块分为四个部分:注意力 (attention)、全对全分发 (all-to-all dispatch)、MLP 和全对全合并 (all-to-all combine)。特别地,对于后向块,注意力和 MLP 都进一步分为两部分,即输入的后向和权重的后向,类似于 ZeroBubble (Qi et al., 2023b)。此外,我们还有一个 PP 通信部分。如图 4 所示,对于一对前向和后向块,我们重新排列这些部分,并手动调整 GPU SMs 用于通信与计算的比例。在这种重叠策略中,我们可以确保全对全和 PP 通信在执行过程中完全隐藏。鉴于这种高效的重叠策略,完整的 DualPipe 调度如图 5 所示。它采用了双向流水线调度,同时从流水线的两端输入微批次,并且大部分通信可以完全重叠。这种重叠还确保了随着模型的进一步扩展,只要我们保持恒定的计算与通信比例,我们仍然可以在节点之间使用细粒度的专家,同时实现接近零的全对全通信开销。

Figure 5 | Example DualPipe scheduling for 8 PP ranks and 20 micro-batches in two directions. The micro-batches in the reverse direction are symmetric to those in the forward direction, so we omit their batch ID for illustration simplicity. Two cells enclosed by a shared black border have mutually overlapped computation and communication.

图 5 | 8 个 PP 等级和 20 个微批次在两个方向上的 DualPipe 调度示例。反向的微批次与正向的微批次对称,因此为了简化说明,我们省略了它们的批次 ID。由共享黑色边框包围的两个单元格具有相互重叠的计算和通信。

| 方法 | Bubble | 参数 | 激活 |

|---|---|---|---|

| 1F1B | (PP -1)(F + B) | 1x | PP |

| ZB1P | (PP - 1)(F + B - 2W) | 1x | PP |

| DualPipe (Ours) | (P -1)(F&B+ B - 3W) | 2x | PP+1 |

Table 2 | Comparison of pipeline bubbles and memory usage across different pipeline parallel methods. $F$ denotes the execution time of a forward chunk, $B$ denotes the execution time of a full backward chunk, 𝑊denotes the execution time of a "backward for weights" chunk, and $F&B$ denotes the execution time of two mutually overlapped forward and backward chunks.

表 2 | 不同流水线并行方法中的流水线气泡和内存使用情况对比。$F$ 表示前向块 (forward chunk) 的执行时间,$B$ 表示完整反向块 (full backward chunk) 的执行时间,$W$ 表示“权重反向块” (backward for weights chunk) 的执行时间,$F&B$ 表示两个相互重叠的前向和反向块的执行时间。

In addition, even in more general scenarios without a heavy communication burden, DualPipe still exhibits efficiency advantages. In Table 2, we summarize the pipeline bubbles and memory usage across different PP methods. As shown in the table, compared with ZB1P (Qi et al., 2023b) and 1F1B (Harlap et al., 2018), DualPipe significantly reduces the pipeline bubbles while only increasing the peak activation memory by $\frac{1}{P P}$ times. Although DualPipe requires keeping two copies of the model parameters, this does not significantly increase the memory consumption since we use a large EP size during training. Compared with Chimera (Li and Hoefler, 2021), DualPipe only requires that the pipeline stages and micro-batches be divisible by 2, without requiring micro-batches to be divisible by pipeline stages. In addition, for DualPipe, neither the bubbles nor activation memory will increase as the number of micro-batches grows.

此外,即使在通信负担不重的更一般场景中,DualPipe 仍然展现出效率优势。在表 2 中,我们总结了不同流水线并行 (PP) 方法中的流水线气泡和内存使用情况。如表所示,与 ZB1P (Qi et al., 2023b) 和 1F1B (Harlap et al., 2018) 相比,DualPipe 显著减少了流水线气泡,同时仅将峰值激活内存增加了 $\frac{1}{P P}$ 倍。尽管 DualPipe 需要保留两份模型参数副本,但由于我们在训练时使用了较大的 EP 大小,这并不会显著增加内存消耗。与 Chimera (Li and Hoefler, 2021) 相比,DualPipe 仅要求流水线阶段和微批次可被 2 整除,而不要求微批次可被流水线阶段整除。此外,对于 DualPipe 来说,无论是气泡还是激活内存都不会随着微批次数量的增加而增加。

3.2.2. Efficient Implementation of Cross-Node All-to-All Communication

3.2.2. 跨节点全对全通信的高效实现

In order to ensure sufficient computational performance for DualPipe, we customize efficient cross-node all-to-all communication kernels (including dispatching and combining) to conserve the number of SMs dedicated to communication. The implementation of the kernels is codesigned with the MoE gating algorithm and the network topology of our cluster. To be specific, in our cluster, cross-node GPUs are fully interconnected with IB, and intra-node communications are handled via NVLink. NVLink offers a bandwidth of 160 GB/s, roughly 3.2 times that of IB $(50,\mathrm{GB}/\mathrm{s})$ . To effectively leverage the different bandwidths of IB and NVLink, we limit each token to be dispatched to at most 4 nodes, thereby reducing IB traffic. For each token, when its routing decision is made, it will first be transmitted via IB to the GPUs with the same in-node index on its target nodes. Once it reaches the target nodes, we will endeavor to ensure that it is instantaneously forwarded via NVLink to specific GPUs that host their target experts, without being blocked by subsequently arriving tokens. In this way, communications via IB and NVLink are fully overlapped, and each token can efficiently select an average of 3.2 experts per node without incurring additional overhead from NVLink. This implies that, although DeepSeek-V3 selects only 8 routed experts in practice, it can scale up this number to a maximum of 13 experts (4 nodes $\times,3.2$ experts/node) while preserving the same communication cost. Overall, under such a communication strategy, only 20 SMs are sufficient to fully utilize the bandwidths of IB and NVLink.

为了确保 DualPipe 具备足够的计算性能,我们定制了高效的跨节点全对全通信内核(包括分发和合并),以减少专用于通信的 SM 数量。这些内核的实现与 MoE 门控算法和我们集群的网络拓扑共同设计。具体来说,在我们的集群中,跨节点 GPU 通过 IB 完全互连,节点内通信则通过 NVLink 处理。NVLink 提供 160 GB/s 的带宽,大约是 IB $(50,\mathrm{GB}/\mathrm{s})$ 的 3.2 倍。为了有效利用 IB 和 NVLink 的不同带宽,我们将每个 token 分发的节点数限制为最多 4 个,从而减少 IB 流量。对于每个 token,当路由决策完成后,它将首先通过 IB 传输到目标节点上具有相同节点内索引的 GPU。一旦到达目标节点,我们将尽力确保它通过 NVLink 即时转发到托管其目标专家的特定 GPU,而不会被后续到达的 token 阻塞。通过这种方式,IB 和 NVLink 的通信完全重叠,每个 token 可以高效地选择每个节点平均 3.2 个专家,而不会产生额外的 NVLink 开销。这意味着,尽管 DeepSeek-V3 在实践中仅选择 8 个路由专家,但它可以将此数量扩展到最多 13 个专家(4 个节点 $\times,3.2$ 个专家/节点),同时保持相同的通信成本。总体而言,在这种通信策略下,仅需 20 个 SM 即可充分利用 IB 和 NVLink 的带宽。

In detail, we employ the warp specialization technique (Bauer et al., 2014) and partition 20 SMs into 10 communication channels. During the dispatching process, (1) IB sending, (2) IB-to-NVLink forwarding, and (3) NVLink receiving are handled by respective warps. The number of warps allocated to each communication task is dynamically adjusted according to the actual workload across all SMs. Similarly, during the combining process, (1) NVLink sending, (2) NVLink-to-IB forwarding and accumulation, and (3) IB receiving and accumulation are also handled by dynamically adjusted warps. In addition, both dispatching and combining kernels overlap with the computation stream, so we also consider their impact on other SM computation kernels. Specifically, we employ customized PTX (Parallel Thread Execution) instructions and auto-tune the communication chunk size, which significantly reduces the use of the L2 cache and the interference to other SMs.

具体来说,我们采用了 warp specialization 技术 (Bauer et al., 2014),并将 20 个 SM 划分为 10 个通信通道。在调度过程中,(1) IB 发送、(2) IB 到 NVLink 转发以及 (3) NVLink 接收分别由各自的 warp 处理。分配给每个通信任务的 warp 数量会根据所有 SM 的实际工作负载动态调整。同样,在合并过程中,(1) NVLink 发送、(2) NVLink 到 IB 转发和累加以及 (3) IB 接收和累加也由动态调整的 warp 处理。此外,调度和合并的内核与计算流重叠,因此我们还考虑了它们对其他 SM 计算内核的影响。具体来说,我们采用了定制的 PTX (Parallel Thread Execution) 指令,并自动调整通信块大小,这显著减少了 L2 缓存的使用以及对其他 SM 的干扰。

3.2.3. Extremely Memory Saving with Minimal Overhead

3.2.3. 极低内存占用与最小开销

In order to reduce the memory footprint during training, we employ the following techniques.

为了减少训练期间的内存占用,我们采用了以下技术。

Re computation of RMSNorm and MLA Up-Projection. We recompute all RMSNorm operations and MLA up-projections during back-propagation, thereby eliminating the need to persistently store their output activation s. With a minor overhead, this strategy significantly reduces memory requirements for storing activation s.

重新计算 RMSNorm 和 MLA 上投影。我们在反向传播期间重新计算所有 RMSNorm 操作和 MLA 上投影,从而消除了持久存储其输出激活的需求。通过少量的开销,该策略显著减少了存储激活的内存需求。

Exponential Moving Average in CPU. During training, we preserve the Exponential Moving Average (EMA) of the model parameters for early estimation of the model performance after learning rate decay. The EMA parameters are stored in CPU memory and are updated asynchronously after each training step. This method allows us to maintain EMA parameters without incurring additional memory or time overhead.

CPU 中的指数移动平均。在训练过程中,我们保留模型参数的指数移动平均 (EMA),以便在学习率衰减后对模型性能进行早期估计。EMA 参数存储在 CPU 内存中,并在每个训练步骤后异步更新。这种方法使我们能够在不增加额外内存或时间开销的情况下维护 EMA 参数。

Shared Embedding and Output Head for Multi-Token Prediction. With the DualPipe strategy, we deploy the shallowest layers (including the embedding layer) and deepest layers (including the output head) of the model on the same PP rank. This arrangement enables the physical sharing of parameters and gradients, of the shared embedding and output head, between the MTP module and the main model. This physical sharing mechanism further enhances our memory efficiency.

共享嵌入和输出头用于多Token预测。通过DualPipe策略,我们将模型的最浅层(包括嵌入层)和最深层(包括输出头)部署在同一个PP rank上。这种安排使得MTP模块和主模型之间能够物理共享共享嵌入和输出头的参数和梯度。这种物理共享机制进一步提高了我们的内存效率。

3.3. FP8 Training

3.3. FP8 训练

Inspired by recent advances in low-precision training (Dettmers et al., 2022; Noune et al., 2022; Peng et al., 2023b), we propose a fine-grained mixed precision framework utilizing the FP8 data format for training DeepSeek-V3. While low-precision training holds great promise, it is often limited by the presence of outliers in activation s, weights, and gradients (Fishman et al., 2024; He et al.; Sun et al., 2024). Although significant progress has been made in inference quantization (Frantar et al., 2022; Xiao et al., 2023), there are relatively few studies demonstrating successful application of low-precision techniques in large-scale language model pre-training (Fishman et al., 2024). To address thi或s ch alI len np ge u at-nd> eAf fc ect it iv veal ty i eoxnte_ndL the dynamic range of the FP8 format, we introduce a fine-grained quantization strategy: tile-wise grouping with $1\times N_{c}$ elements or block-wise grouping with $N_{c}\times N_{c}$ ue tle pm ue tn-t>s. ATchtei a vs s a otc iio at ned {dLeq+u1an}tiza- tion overhead is largely mitigated under our increased-precision accumulation process, a critical aspect for achieving accurate FP8 General Matrix Multiplication (GEMM). Moreover, to further reduce memory and communication overhead in MoE training, we cache and dispatch activations in FP8, while storing low-precision optimizer states in BF16. We validate the proposed FP8 mixed precision framework on two model scales similar to DeepSeek-V2-Lite and DeepSeekV2, training for approximately 1 trillion tokens (see more details in Appendix B.1). Notably, compared with the BF16 baseline, the relative loss error of our FP8-training model remains consistently below $0.25%$ , a level well within the acceptable range of training randomness.

受低精度训练(Dettmers et al., 2022; Noune et al., 2022; Peng et al., 2023b)最新进展的启发,我们提出了一种利用 FP8 数据格式的细粒度混合精度框架,用于训练 DeepSeek-V3。虽然低精度训练具有巨大的潜力,但它通常受到激活值、权重和梯度中异常值的限制(Fishman et al., 2024; He et al.; Sun et al., 2024)。尽管在推理量化方面取得了显著进展(Frantar et al., 2022; Xiao et al., 2023),但在大规模语言模型预训练中成功应用低精度技术的研究相对较少(Fishman et al., 2024)。为了解决这一挑战并有效利用 FP8 格式的动态范围,我们引入了一种细粒度的量化策略:使用 $1\times N_{c}$ 元素的瓦片分组或 $N_{c}\times N_{c}$ 元素的块分组。在我们的增加精度累积过程中,量化开销得到了大幅缓解,这是实现精确 FP8 通用矩阵乘法(GEMM)的关键。此外,为了进一步减少 MoE 训练中的内存和通信开销,我们以 FP8 缓存和分发激活值,同时以 BF16 存储低精度优化器状态。我们在与 DeepSeek-V2-Lite 和 DeepSeekV2 相似的两个模型规模上验证了所提出的 FP8 混合精度框架,训练了大约 1 万亿个 token(更多细节见附录 B.1)。值得注意的是,与 BF16 基线相比,我们的 FP8 训练模型的相对损失误差始终保持在 $0.25%$ 以下,这一水平完全在训练随机性的可接受范围内。

Figure 6 | The overall mixed precision framework with FP8 data format. For clarification, only the Linear operator is illustrated.

图 6 | 使用 FP8 数据格式的整体混合精度框架。为清晰起见,仅展示了线性算子。

3.3.1. Mixed Precision Framework

3.3.1. 混合精度框架

Building upon widely adopted techniques in low-precision training (Kalamkar et al., 2019; Narang et al., 2017), we propose a mixed precision framework for FP8 training. In this framework, most compute-density operations are conducted in FP8, while a few key operations are strategically maintained in their original data formats to balance training efficiency and numerical stability. The overall framework is illustrated in Figure 6.

基于广泛采用的低精度训练技术 (Kalamkar et al., 2019; Narang et al., 2017),我们提出了一种用于 FP8 训练的混合精度框架。在该框架中,大多数计算密集型操作以 FP8 进行,而少数关键操作则策略性地保持其原始数据格式,以平衡训练效率和数值稳定性。整体框架如图 6 所示。

Firstly, in order to accelerate model training, the majority of core computation kernels, i.e., GEMM operations, are implemented in FP8 precision. These GEMM operations accept FP8 tensors as inputs and produce outputs in BF16 or FP32. As depicted in Figure 6, all three GEMMs associated with the Linear operator, namely Fprop (forward pass), Dgrad (activation backward pass), and Wgrad (weight backward pass), are executed in FP8. This design theoretically doubles the computational speed compared with the original BF16 method. Additionally, the FP8 Wgrad GEMM allows activation s to be stored in FP8 for use in the backward pass. This significantly reduces memory consumption.

首先,为了加速模型训练,大多数核心计算内核(即 GEMM 操作)都以 FP8 精度实现。这些 GEMM 操作接受 FP8 张量作为输入,并生成 BF16 或 FP32 的输出。如图 6 所示,与 Linear 算子相关的三个 GEMM 操作,即 Fprop(前向传播)、Dgrad(激活反向传播)和 Wgrad(权重反向传播),均在 FP8 中执行。理论上,这种设计使计算速度比原始的 BF16 方法提高了一倍。此外,FP8 Wgrad GEMM 允许将激活存储在 FP8 中,以便在反向传播中使用。这显著减少了内存消耗。

Despite the efficiency advantage of the FP8 format, certain operators still require a higher precision due to their sensitivity to low-precision computations. Besides, some low-cost operators can also utilize a higher precision with a negligible overhead to the overall training cost. For this reason, after careful investigations, we maintain the original precision (e.g., BF16 or FP32) for the following components: the embedding module, the output head, MoE gating modules, normalization operators, and attention operators. These targeted retentions of high precision ensure stable training dynamics for DeepSeek-V3. To further guarantee numerical stability, we store the master weights, weight gradients, and optimizer states in higher precision. While these high-precision components incur some memory overheads, their impact can be minimized through efficient sharding across multiple DP ranks in our distributed training system.

尽管 FP8 格式具有效率优势,但某些算子由于对低精度计算敏感,仍需要更高的精度。此外,一些低成本算子也可以利用更高的精度,而对整体训练成本的影响可以忽略不计。因此,经过仔细研究,我们为以下组件保留了原始精度(例如 BF16 或 FP32):嵌入模块、输出头、MoE 门控模块、归一化算子和注意力算子。这些有针对性的高精度保留确保了 DeepSeek-V3 的训练动态稳定。为了进一步保证数值稳定性,我们将主权重、权重梯度和优化器状态以更高的精度存储。虽然这些高精度组件会带来一些内存开销,但通过在我们的分布式训练系统中跨多个 DP 等级进行高效分片,可以将它们的影响降至最低。

Figure 7 | (a) We propose a fine-grained quantization method to mitigate quantization errors caused by feature outliers; for illustration simplicity, only Fprop is illustrated. (b) In conjunction with our quantization strategy, we improve the FP8 GEMM precision by promoting to CUDA Cores at an interval of $N_{C}=128$ elements MMA for the high-precision accumulation.

图 7 | (a) 我们提出了一种细粒度的量化方法,以减轻由特征异常值引起的量化误差;为了简化说明,仅展示了 Fprop。(b) 结合我们的量化策略,我们通过将 FP8 GEMM 精度提升到 CUDA Cores,以 $N_{C}=128$ 个元素的 MMA 间隔进行高精度累加。

(b) Increasing accumulation precision

(b) 提高累积精度

3.3.2. Improved Precision from Quantization and Multiplication

3.3.2. 量化和乘法带来的精度提升

Based on our mixed precision FP8 framework, we introduce several strategies to enhance lowprecision training accuracy, focusing on both the quantization method and the multiplication process.

基于我们的混合精度 FP8 框架,我们引入了多种策略来提高低精度训练的准确性,重点关注量化方法和乘法过程。

Fine-Grained Quantization. In low-precision training frameworks, overflows and underflows are common challenges due to the limited dynamic range of the FP8 format, which is constrained by its reduced exponent bits. As a standard practice, the input distribution is aligned to the representable range of the FP8 format by scaling the maximum absolute value of the input tensor to the maximum representable value of FP8 (Narang et al., 2017). This method makes lowprecision training highly sensitive to activation outliers, which can heavily degrade quantization accuracy. To solve this, we propose a fine-grained quantization method that applies scaling at a more granular level. As illustrated in Figure 7 (a), (1) for activation s, we group and scale elements on a 1x128 tile basis (i.e., per token per 128 channels); and (2) for weights, we group and scale elements on a 128x128 block basis (i.e., per 128 input channels per 128 output channels). This approach ensures that the quantization process can better accommodate outliers by adapting the scale according to smaller groups of elements. In Appendix B.2, we further discuss the training instability when we group and scale activation s on a block basis in the same way as weights quantization.

细粒度量化。在低精度训练框架中,由于 FP8 格式的动态范围有限,溢出和下溢是常见的挑战,这受到其减少的指数位的限制。作为一种标准做法,输入分布通过将输入张量的最大绝对值缩放到 FP8 的最大可表示值来与 FP8 格式的可表示范围对齐 (Narang et al., 2017)。这种方法使得低精度训练对激活异常值高度敏感,这会严重降低量化精度。为了解决这个问题,我们提出了一种细粒度量化方法,该方法在更细粒度的级别上应用缩放。如图 7 (a) 所示,(1) 对于激活 s,我们在 1x128 的图块基础上对元素进行分组和缩放(即每个 token 每 128 个通道);(2) 对于权重,我们在 128x128 的块基础上对元素进行分组和缩放(即每 128 个输入通道每 128 个输出通道)。这种方法通过根据较小的元素组调整缩放比例,确保量化过程能够更好地适应异常值。在附录 B.2 中,我们进一步讨论了当我们以与权重量化相同的方式在块基础上对激活 s 进行分组和缩放时的训练不稳定性。

One key modification in our method is the introduction of per-group scaling factors along the inner dimension of GEMM operations. This functionality is not directly supported in the standard FP8 GEMM. However, combined with our precise FP32 accumulation strategy, it can

我们方法的一个关键修改是引入了沿 GEMM 操作内部维度的每组缩放因子。这一功能在标准的 FP8 GEMM 中并不直接支持。然而,结合我们精确的 FP32 累加策略,它可以

be efficiently implemented.

高效实现。

Notably, our fine-grained quantization strategy is highly consistent with the idea of microscaling formats (Rouhani et al., 2023b), while the Tensor Cores of NVIDIA next-generation GPUs (Blackwell series) have announced the support for micro scaling formats with smaller quantization granularity (NVIDIA, 2024a). We hope our design can serve as a reference for future work to keep pace with the latest GPU architectures.

值得注意的是,我们的细粒度量化策略与微缩放格式 (microscaling formats) 的理念高度一致 (Rouhani et al., 2023b),而 NVIDIA 下一代 GPU (Blackwell 系列) 的 Tensor Cores 已宣布支持具有更小量化粒度的微缩放格式 (NVIDIA, 2024a)。我们希望我们的设计能够为未来的工作提供参考,以跟上最新的 GPU 架构。

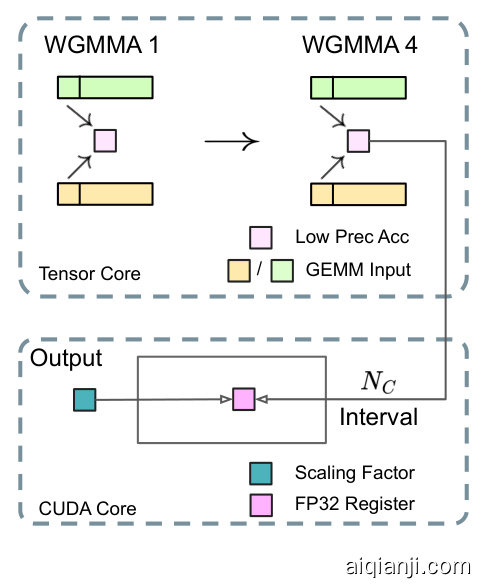

Increasing Accumulation Precision. Low-precision GEMM operations often suffer from underflow issues, and their accuracy largely depends on high-precision accumulation, which is commonly performed in an FP32 precision (Kalamkar et al., 2019; Narang et al., 2017). However, we observe that the accumulation precision of FP8 GEMM on NVIDIA H800 GPUs is limited to retaining around 14 bits, which is significantly lower than FP32 accumulation precision. This problem will become more pronounced when the inner dimension K is large (Wortsman et al., 2023), a typical scenario in large-scale model training where the batch size and model width are increased. Taking GEMM operations of two random matrices with $\mathtt{K}=4096$ for example, in our preliminary test, the limited accumulation precision in Tensor Cores results in a maximum relative error of nearly $2%$ . Despite these problems, the limited accumulation precision is still the default option in a few FP8 frameworks (NVIDIA, 2024b), severely constraining the training accuracy.

提高累加精度。低精度 GEMM 操作经常面临下溢问题,其精度很大程度上依赖于高精度累加,通常以 FP32 精度执行 (Kalamkar et al., 2019; Narang et al., 2017)。然而,我们观察到,在 NVIDIA H800 GPU 上,FP8 GEMM 的累加精度仅限于保留约 14 位,这显著低于 FP32 的累加精度。当内维度 K 较大时 (Wortsman et al., 2023),这一问题将更加明显,这是大规模模型训练中增加批量大小和模型宽度的典型场景。以两个随机矩阵的 GEMM 操作为例,其中 $\mathtt{K}=4096$,在我们的初步测试中,Tensor Cores 中有限的累加精度导致最大相对误差接近 $2%$。尽管存在这些问题,有限的累加精度仍然是少数 FP8 框架 (NVIDIA, 2024b) 中的默认选项,严重限制了训练精度。

In order to address this issue, we adopt the strategy of promotion to CUDA Cores for higher precision (Thakkar et al., 2023). The process is illustrated in Figure 7 (b). To be specific, during MMA (Matrix Multiply-Accumulate) execution on Tensor Cores, intermediate results are accumulated using the limited bit width. Once an interval of $N_{C}$ is reached, these partial results will be copied to FP32 registers on CUDA Cores, where full-precision FP32 accumulation is performed. As mentioned before, our fine-grained quantization applies per-group scaling factors along the inner dimension K. These scaling factors can be efficiently multiplied on the CUDA Cores as the de quantization process with minimal additional computational cost.

为了解决这个问题,我们采用了将计算提升到CUDA Cores以获取更高精度的策略 (Thakkar et al., 2023)。该过程如图7 (b)所示。具体来说,在Tensor Cores上执行MMA(矩阵乘加)时,中间结果使用有限的位宽进行累加。一旦达到$N_{C}$的间隔,这些部分结果将被复制到CUDA Cores上的FP32寄存器中,并在那里执行全精度的FP32累加。如前所述,我们的细粒度量化沿内维度K应用了每组的缩放因子。这些缩放因子可以在CUDA Cores上高效地乘以反量化过程,且额外的计算成本最小。

It is worth noting that this modification reduces the WGMMA (Warpgroup-level Matrix Multiply-Accumulate) instruction issue rate for a single warpgroup. However, on the H800 architecture, it is typical for two WGMMA to persist concurrently: while one warpgroup performs the promotion operation, the other is able to execute the MMA operation. This design enables overlapping of the two operations, maintaining high utilization of Tensor Cores. Based on our experiments, setting $N_{C},=,128$ elements, equivalent to 4 WGMMAs, represents the minimal accumulation interval that can significantly improve precision without introducing substantial overhead.

值得注意的是,这种修改降低了单个 warpgroup 的 WGMMA(Warpgroup-level Matrix Multiply-Accumulate)指令的发出率。然而,在 H800 架构上,通常会有两个 WGMMA 同时存在:当一个 warpgroup 执行提升操作时,另一个 warpgroup 能够执行 MMA 操作。这种设计使得两个操作可以重叠,从而保持 Tensor Core 的高利用率。根据我们的实验,设置 $N_{C},=,128$ 个元素(相当于 4 个 WGMMA)是最小的累加间隔,可以在不引入显著开销的情况下显著提高精度。

Mantissa over Exponents. In contrast to the hybrid FP8 format adopted by prior work (NVIDIA, 2024b; Peng et al., 2023b; Sun et al., 2019b), which uses E4M3 (4-bit exponent and 3-bit mantissa) in Fprop and E5M2 (5-bit exponent and 2-bit mantissa) in Dgrad and Wgrad, we adopt the E4M3 format on all tensors for higher precision. We attribute the feasibility of this approach to our fine-grained quantization strategy, i.e., tile and block-wise scaling. By operating on smaller element groups, our methodology effectively shares exponent bits among these grouped elements, mitigating the impact of the limited dynamic range.

尾数优先于指数。与之前工作采用的混合 FP8 格式(NVIDIA, 2024b; Peng et al., 2023b; Sun et al., 2019b)不同,它们在 Fprop 中使用 E4M3(4 位指数和 3 位尾数),在 Dgrad 和 Wgrad 中使用 E5M2(5 位指数和 2 位尾数),我们在所有张量上采用 E4M3 格式以获得更高的精度。我们将这种方法的可行性归因于我们的细粒度量化策略,即分块和块级缩放。通过在较小的元素组上操作,我们的方法有效地在这些分组元素之间共享指数位,从而减轻了有限动态范围的影响。

Online Quantization. Delayed quantization is employed in tensor-wise quantization frameworks (NVIDIA, 2024b; Peng et al., 2023b), which maintains a history of the maximum absolute values across prior iterations to infer the current value. In order to ensure accurate scales and simplify the framework, we calculate the maximum absolute value online for each 1x128 activation tile or $128\mathtt{x}128$ weight block. Based on it, we derive the scaling factor and then quantize the activation or weight online into the FP8 format.

在线量化。张量级量化框架(NVIDIA, 2024b; Peng et al., 2023b)中采用了延迟量化,该方法通过维护先前迭代中的最大绝对值历史来推断当前值。为了确保准确的缩放比例并简化框架,我们在线计算每个1x128激活块或$128\mathtt{x}128$权重块的最大绝对值。基于此,我们推导出缩放因子,然后在线将激活或权重量化为FP8格式。

3.3.3. Low-Precision Storage and Communication

3.3.3. 低精度存储与通信

In conjunction with our FP8 training framework, we further reduce the memory consumption and communication overhead by compressing cached activation s and optimizer states into lower-precision formats.

结合我们的 FP8 训练框架,我们通过将缓存的激活值和优化器状态压缩为低精度格式,进一步减少了内存消耗和通信开销。

Low-Precision Optimizer States. We adopt the BF16 data format instead of FP32 to track the first and second moments in the AdamW (Loshchilov and Hutter, 2017) optimizer, without incurring observable performance degradation. However, the master weights (stored by the optimizer) and gradients (used for batch size accumulation) are still retained in FP32 to ensure numerical stability throughout training.

低精度优化器状态。我们采用 BF16 数据格式而非 FP32 来跟踪 AdamW (Loshchilov and Hutter, 2017) 优化器中的一阶和二阶矩,而不会导致明显的性能下降。然而,主权重(由优化器存储)和梯度(用于批量大小累积)仍保留在 FP32 中,以确保整个训练过程中的数值稳定性。

Low-Precision Activation. As illustrated in Figure 6, the Wgrad operation is performed in FP8. To reduce the memory consumption, it is a natural choice to cache activation s in FP8 format for the backward pass of the Linear operator. However, special considerations are taken on several operators for low-cost high-precision training:

低精度激活。如图 6 所示,Wgrad 操作在 FP8 中执行。为了减少内存消耗,将激活值以 FP8 格式缓存以用于 Linear 算子的反向传播是一个自然的选择。然而,为了低成本高精度训练,对几个算子进行了特殊考虑:

(1) Inputs of the Linear after the attention operator. These activation s are also used in the backward pass of the attention operator, which makes it sensitive to precision. We adopt a customized E5M6 data format exclusively for these activation s. Additionally, these activation s will be converted from an 1x128 quantization tile to an $128\up x1$ tile in the backward pass. To avoid introducing extra quantization error, all the scaling factors are round scaled, i.e., integral power of 2.

(1) 注意力算子后的线性输入。这些激活值也用于注意力算子的反向传播,这使得它对精度敏感。我们采用定制的 E5M6 数据格式专门用于这些激活值。此外,这些激活值在反向传播过程中将从 1x128 量化块转换为 $128\up x1$ 块。为了避免引入额外的量化误差,所有的缩放因子都是四舍五入的,即 2 的整数幂。

(2) Inputs of the SwiGLU operator in MoE. To further reduce the memory cost, we cache the inputs of the SwiGLU operator and recompute its output in the backward pass. These activation s are also stored in FP8 with our fine-grained quantization method, striking a balance between memory efficiency and computational accuracy.

(2) MoE 中 SwiGLU 算子的输入。为了进一步降低内存成本,我们缓存了 SwiGLU 算子的输入,并在反向传播时重新计算其输出。这些激活值也使用我们的细粒度量化方法以 FP8 格式存储,在内存效率和计算精度之间取得了平衡。

Low-Precision Communication. Communication bandwidth is a critical bottleneck in the training of MoE models. To alleviate this challenge, we quantize the activation before MoE up-projections into FP8 and then apply dispatch components, which is compatible with FP8 Fprop in MoE up-projections. Like the inputs of the Linear after the attention operator, scaling factors for this activation are integral power of 2. A similar strategy is applied to the activation gradient before MoE down-projections. For both the forward and backward combine components, we retain them in BF16 to preserve training precision in critical parts of the training pipeline.

低精度通信。通信带宽是 MoE 模型训练中的一个关键瓶颈。为了缓解这一挑战,我们在 MoE 上投影之前将激活量化为 FP8,然后应用调度组件,这与 MoE 上投影中的 FP8 Fprop 兼容。与注意力算子后的线性输入类似,此激活的缩放因子是 2 的整数幂。类似的策略也应用于 MoE 下投影之前的激活梯度。对于前向和后向组合组件,我们将其保留为 BF16,以保持训练管道关键部分的训练精度。

3.4. Inference and Deployment

3.4. 推理与部署

We deploy DeepSeek-V3 on the H800 cluster, where GPUs within each node are interconnected using NVLink, and all GPUs across the cluster are fully interconnected via IB. To simultaneously ensure both the Service-Level Objective (SLO) for online services and high throughput, we employ the following deployment strategy that separates the prefilling and decoding stages.

我们在 H800 集群上部署了 DeepSeek-V3,其中每个节点内的 GPU 通过 NVLink 互连,集群中的所有 GPU 通过 IB 完全互连。为了同时确保在线服务的服务级别目标 (SLO) 和高吞吐量,我们采用了以下部署策略,将预填充和解码阶段分开。

3.4.1. Prefilling

3.4.1. 预填充

The minimum deployment unit of the prefilling stage consists of 4 nodes with 32 GPUs. The attention part employs 4-way Tensor Parallelism (TP4) with Sequence Parallelism (SP), combined with 8-way Data Parallelism (DP8). Its small TP size of 4 limits the overhead of TP communication. For the MoE part, we use 32-way Expert Parallelism (EP32), which ensures that each expert processes a sufficiently large batch size, thereby enhancing computational efficiency. For the MoE all-to-all communication, we use the same method as in training: first transferring tokens across nodes via IB, and then forwarding among the intra-node GPUs via NVLink. In particular, we use 1-way Tensor Parallelism for the dense MLPs in shallow layers to save TP communication.

预填充阶段的最小部署单元由4个节点和32个GPU组成。注意力部分采用4路张量并行(TP4)与序列并行(SP)结合,同时使用8路数据并行(DP8)。其较小的TP大小为4,限制了TP通信的开销。对于MoE部分,我们使用32路专家并行(EP32),确保每个专家处理足够大的批量大小,从而提高计算效率。对于MoE的全对全通信,我们使用与训练时相同的方法:首先通过IB在节点之间传输Token,然后通过NVLink在节点内的GPU之间转发。特别地,我们在浅层使用1路张量并行来处理密集的MLP,以节省TP通信。

To achieve load balancing among different experts in the MoE part, we need to ensure that each GPU processes approximately the same number of tokens. To this end, we introduce a deployment strategy of redundant experts, which duplicates high-load experts and deploys them redundantly. The high-load experts are detected based on statistics collected during the online deployment and are adjusted periodically (e.g., every 10 minutes). After determining the set of redundant experts, we carefully rearrange experts among GPUs within a node based on the observed loads, striving to balance the load across GPUs as much as possible without increasing the cross-node all-to-all communication overhead. For the deployment of DeepSeek-V3, we set 32 redundant experts for the prefilling stage. For each GPU, besides the original 8 experts it hosts, it will also host one additional redundant expert.

为了实现 MoE 部分中不同专家之间的负载均衡,我们需要确保每个 GPU 处理大致相同数量的 Token。为此,我们引入了一种冗余专家的部署策略,即复制高负载专家并进行冗余部署。高负载专家是基于在线部署期间收集的统计数据检测的,并定期进行调整(例如,每 10 分钟一次)。在确定冗余专家集后,我们根据观察到的负载情况,在节点内的 GPU 之间仔细重新安排专家,力求在不增加跨节点全对全通信开销的情况下,尽可能平衡 GPU 的负载。对于 DeepSeek-V3 的部署,我们为预填充阶段设置了 32 个冗余专家。对于每个 GPU,除了它原本承载的 8 个专家外,还将承载一个额外的冗余专家。

Furthermore, in the prefilling stage, to improve the throughput and hide the overhead of all-to-all and TP communication, we simultaneously process two micro-batches with similar computational workloads, overlapping the attention and MoE of one micro-batch with the dispatch and combine of another.

此外,在预填充阶段,为了提高吞吐量并隐藏 all-to-all 和 TP 通信的开销,我们同时处理两个计算工作量相似的微批次,将一个微批次的注意力机制和 MoE 与另一个微批次的调度和组合重叠。

Finally, we are exploring a dynamic redundancy strategy for experts, where each GPU hosts more experts (e.g., 16 experts), but only 9 will be activated during each inference step. Before the all-to-all operation at each layer begins, we compute the globally optimal routing scheme on the fly. Given the substantial computation involved in the prefilling stage, the overhead of computing this routing scheme is almost negligible.

最后,我们正在探索一种动态冗余策略,即每个 GPU 上托管更多的专家(例如 16 个专家),但在每次推理步骤中只激活 9 个。在每层的 all-to-all 操作开始之前,我们会动态计算全局最优的路由方案。考虑到预填充阶段涉及的大量计算,计算该路由方案的开销几乎可以忽略不计。

3.4.2. Decoding

3.4.2. 解码

During decoding, we treat the shared expert as a routed one. From this perspective, each token will select 9 experts during routing, where the shared expert is regarded as a heavy-load one that will always be selected. The minimum deployment unit of the decoding stage consists of 40 nodes with 320 GPUs. The attention part employs TP4 with SP, combined with DP80, while the MoE part uses EP320. For the MoE part, each GPU hosts only one expert, and 64 GPUs are responsible for hosting redundant experts and shared experts. All-to-all communication of the dispatch and combine parts is performed via direct point-to-point transfers over IB to achieve low latency. Additionally, we leverage the IBGDA (NVIDIA, 2022) technology to further minimize latency and enhance communication efficiency.

在解码过程中,我们将共享专家视为一个路由专家。从这个角度来看,每个 Token 在路由时会选择 9 个专家,其中共享专家被视为一个高负载的专家,始终会被选中。解码阶段的最小部署单元由 40 个节点和 320 个 GPU 组成。注意力部分采用 TP4 和 SP 结合 DP80,而 MoE 部分使用 EP320。对于 MoE 部分,每个 GPU 仅托管一个专家,64 个 GPU 负责托管冗余专家和共享专家。调度和组合部分的全对全通信通过 IB 的直接点对点传输进行,以实现低延迟。此外,我们利用 IBGDA (NVIDIA, 2022) 技术进一步减少延迟并提高通信效率。

Similar to prefilling, we periodically determine the set of redundant experts in a certain interval, based on the statistical expert load from our online service. However, we do not need to rearrange experts since each GPU only hosts one expert. We are also exploring the dynamic redundancy strategy for decoding. However, this requires more careful optimization of the algorithm that computes the globally optimal routing scheme and the fusion with the dispatch kernel to reduce overhead.

与预填充类似,我们根据在线服务的统计专家负载,定期确定某个区间内的冗余专家集。然而,由于每个 GPU 只托管一个专家,我们不需要重新排列专家。我们还在探索解码的动态冗余策略。然而,这需要对计算全局最优路由方案的算法进行更仔细的优化,并与调度内核融合以减少开销。

Additionally, to enhance throughput and hide the overhead of all-to-all communication, we are also exploring processing two micro-batches with similar computational workloads simultaneously in the decoding stage. Unlike prefilling, attention consumes a larger portion of time in the decoding stage. Therefore, we overlap the attention of one micro-batch with the dispatch+MoE $^+$ combine of another. In the decoding stage, the batch size per expert is relatively small (usually within 256 tokens), and the bottleneck is memory access rather than computation. Since the MoE part only needs to load the parameters of one expert, the memory access overhead is minimal, so using fewer SMs will not significantly affect the overall performance. Therefore, to avoid impacting the computation speed of the attention part, we can allocate only a small portion of SMs to dispatch+MoE+combine.

此外,为了提高吞吐量并隐藏全对全通信的开销,我们还在探索在解码阶段同时处理两个计算工作量相似的微批次。与预填充不同,注意力在解码阶段消耗的时间更多。因此,我们将一个微批次的注意力与另一个微批次的调度+MoE$^+$组合重叠。在解码阶段,每个专家的批次大小相对较小(通常在256个Token以内),瓶颈是内存访问而非计算。由于MoE部分只需要加载一个专家的参数,内存访问开销很小,因此使用较少的SM不会显著影响整体性能。因此,为了避免影响注意力部分的计算速度,我们可以只分配一小部分SM给调度+MoE+组合。

3.5. Suggestions on Hardware Design

3.5. 硬件设计建议

Based on our implementation of the all-to-all communication and FP8 training scheme, we propose the following suggestions on chip design to AI hardware vendors.

基于我们对全对全通信和 FP8 训练方案的实现,我们向 AI 硬件供应商提出以下芯片设计建议。

3.5.1. Communication Hardware

3.5.1. 通信硬件

In DeepSeek-V3, we implement the overlap between computation and communication to hide the communication latency during computation. This significantly reduces the dependency on communication bandwidth compared to serial computation and communication. However, the current communication implementation relies on expensive SMs (e.g., we allocate 20 out of the 132 SMs available in the H800 GPU for this purpose), which will limit the computational throughput. Moreover, using SMs for communication results in significant inefficiencies, as tensor cores remain entirely under-utilized.

在 DeepSeek-V3 中,我们实现了计算与通信的重叠,以隐藏计算过程中的通信延迟。与串行计算和通信相比,这显著降低了对通信带宽的依赖。然而,当前的通信实现依赖于昂贵的 SMs(例如,我们在 H800 GPU 的 132 个可用 SMs 中分配了 20 个用于此目的),这将限制计算吞吐量。此外,使用 SMs 进行通信会导致显著的效率低下,因为张量核心(tensor cores)完全未被充分利用。

Currently, the SMs primarily perform the following tasks for all-to-all communication:

目前,SMs 主要执行以下全对全通信任务:

We aspire to see future vendors developing hardware that offloads these communication tasks from the valuable computation unit SM, serving as a GPU co-processor or a network co-processor like NVIDIA SHARP Graham et al. (2016). Furthermore, to reduce application programming complexity, we aim for this hardware to unify the IB (scale-out) and NVLink (scale-up) networks from the perspective of the computation units. With this unified interface, computation units can easily accomplish operations such as read, write, multicast, and reduce across the entire IB-NVLink-unified domain via submitting communication requests based on simple primitives.

我们期望未来的供应商能够开发出将通信任务从宝贵的计算单元 SM 中卸载的硬件,作为 GPU 协处理器或网络协处理器,例如 NVIDIA SHARP Graham 等人 (2016)。此外,为了降低应用程序编程的复杂性,我们希望这种硬件能够从计算单元的角度统一 IB(横向扩展)和 NVLink(纵向扩展)网络。通过这种统一的接口,计算单元可以通过提交基于简单原语的通信请求,轻松完成整个 IB-NVLink 统一域中的读取、写入、多播和归约等操作。

3.5.2. Compute Hardware

3.5.2. 计算硬件

Higher FP8 GEMM Accumulation Precision in Tensor Cores. In the current Tensor Core implementation of the NVIDIA Hopper architecture, FP8 GEMM (General Matrix Multiply) employs fixed-point accumulation, aligning the mantissa products by right-shifting based on the maximum exponent before addition. Our experiments reveal that it only uses the highest 14 bits of each mantissa product after sign-fill right shifting, and truncates bits exceeding this range. However, for example, to achieve precise FP32 results from the accumulation of $32;\mathrm{FP8!\times!FP8}$ multiplications, at least 34-bit precision is required. Thus, we recommend that future chip designs increase accumulation precision in Tensor Cores to support full-precision accumulation, or select an appropriate accumulation bit-width according to the accuracy requirements of training and inference algorithms. This approach ensures that errors remain within acceptable bounds while maintaining computational efficiency.

Tensor Core 中更高的 FP8 GEMM 累加精度。在 NVIDIA Hopper 架构的当前 Tensor Core 实现中,FP8 GEMM(通用矩阵乘法)采用定点累加,通过在加法前根据最大指数右移来对齐尾数乘积。我们的实验表明,它在符号填充右移后仅使用每个尾数乘积的最高 14 位,并截断超出此范围的位。然而,例如,要从 32 个 FP8×FP8 乘法的累加中获得精确的 FP32 结果,至少需要 34 位精度。因此,我们建议未来的芯片设计提高 Tensor Core 中的累加精度,以支持全精度累加,或根据训练和推理算法的精度要求选择合适的累加位宽。这种方法在保持计算效率的同时,确保误差保持在可接受的范围内。

Support for Tile- and Block-Wise Quantization. Current GPUs only support per-tensor quantization, lacking the native support for fine-grained quantization like our tile- and blockwise quantization. In the current implementation, when the $N_{C}$ interval is reached, the partial results will be copied from Tensor Cores to CUDA cores, multiplied by the scaling factors, and added to FP32 registers on CUDA cores. Although the de quantization overhead is significantly mitigated combined with our precise FP32 accumulation strategy, the frequent data movements between Tensor Cores and CUDA cores still limit the computational efficiency. Therefore, we recommend future chips to support fine-grained quantization by enabling Tensor Cores to receive scaling factors and implement MMA with group scaling. In this way, the whole partial sum accumulation and de quantization can be completed directly inside Tensor Cores until the final result is produced, avoiding frequent data movements.

支持分块量化。当前的 GPU 仅支持张量级量化,缺乏对我们分块量化等细粒度量化的原生支持。在当前实现中,当达到 $N_{C}$ 间隔时,部分结果将从 Tensor Core 复制到 CUDA Core,乘以缩放因子,并添加到 CUDA Core 上的 FP32 寄存器中。尽管结合我们精确的 FP32 累加策略,反量化开销得到了显著缓解,但 Tensor Core 和 CUDA Core 之间的频繁数据移动仍然限制了计算效率。因此,我们建议未来的芯片通过使 Tensor Core 能够接收缩放因子并实现分组缩放的 MMA 来支持细粒度量化。这样,整个部分和累加和反量化可以直接在 Tensor Core 内部完成,直到生成最终结果,从而避免频繁的数据移动。

Support for Online Quantization. The current implementations struggle to effectively support online quantization, despite its effectiveness demonstrated in our research. In the existing process, we need to read 128 BF16 activation values (the output of the previous computation) from HBM (High Bandwidth Memory) for quantization, and the quantized FP8 values are then written back to HBM, only to be read again for MMA. To address this inefficiency, we recommend that future chips integrate FP8 cast and TMA (Tensor Memory Accelerator) access into a single fused operation, so quantization can be completed during the transfer of activation s from global memory to shared memory, avoiding frequent memory reads and writes. We also recommend supporting a warp-level cast instruction for speedup, which further facilitates the better fusion of layer normalization and FP8 cast. Alternatively, a near-memory computing approach can be adopted, where compute logic is placed near the HBM. In this case, BF16 elements can be cast to FP8 directly as they are read from HBM into the GPU, reducing off-chip memory access by roughly $50%$ .

支持在线量化。尽管我们的研究证明了在线量化的有效性,但当前的实现难以有效支持这一功能。在现有流程中,我们需要从高带宽内存 (HBM) 中读取 128 个 BF16 激活值(前一次计算的输出)进行量化,然后将量化后的 FP8 值写回 HBM,再重新读取以进行矩阵乘法累加 (MMA)。为了解决这种低效问题,我们建议未来的芯片将 FP8 转换和张量内存加速器 (TMA) 访问集成到一个融合操作中,以便在将激活值从全局内存传输到共享内存的过程中完成量化,从而避免频繁的内存读写。我们还建议支持 warp 级别的转换指令以加速操作,这进一步促进了层归一化和 FP8 转换的更好融合。或者,可以采用近内存计算的方法,将计算逻辑放置在 HBM 附近。在这种情况下,BF16 元素在从 HBM 读取到 GPU 时可以直接转换为 FP8,从而将片外内存访问减少约 $50%$。

Support for Transposed GEMM Operations. The current architecture makes it cumbersome to fuse matrix transposition with GEMM operations. In our workflow, activation s during the forward pass are quantized into 1x128 FP8 tiles and stored. During the backward pass, the matrix needs to be read out, de quantized, transposed, re-quantized into $128\up x1$ tiles, and stored in HBM. To reduce memory operations, we recommend future chips to enable direct transposed reads of matrices from shared memory before MMA operation, for those precisions required in both training and inference. Combined with the fusion of FP8 format conversion and TMA access, this enhancement will significantly streamline the quantization workflow.

支持转置的 GEMM 操作。当前的架构使得将矩阵转置与 GEMM 操作融合变得繁琐。在我们的工作流程中,前向传播中的激活被量化为 1x128 FP8 块并存储。在反向传播期间,矩阵需要被读取、反量化、转置、重新量化为 $128\up x1$ 块,并存储在 HBM 中。为了减少内存操作,我们建议未来的芯片在 MMA 操作之前,能够直接从共享内存中进行矩阵的转置读取,以满足训练和推理中所需的精度。结合 FP8 格式转换和 TMA 访问的融合,这一增强将显著简化量化工作流程。

4. Pre-Training

4. 预训练

4.1. Data Construction

4.1. 数据构建

Compared with DeepSeek-V2, we optimize the pre-training corpus by enhancing the ratio of mathematical and programming samples, while expanding multilingual coverage beyond English and Chinese. Also, our data processing pipeline is refined to minimize redundancy while maintaining corpus diversity. Inspired by Ding et al. (2024), we implement the document packing method for data integrity but do not incorporate cross-sample attention masking during training. Finally, the training corpus for DeepSeek-V3 consists of 14.8T high-quality and diverse tokens in our tokenizer.

与 DeepSeek-V2 相比,我们通过提高数学和编程样本的比例优化了预训练语料库,同时扩展了除英语和中文之外的多语言覆盖范围。此外,我们的数据处理流程经过优化,以在保持语料库多样性的同时最大限度地减少冗余。受 Ding 等人 (2024) 的启发,我们实现了文档打包方法以确保数据完整性,但在训练过程中没有引入跨样本注意力掩码。最终,DeepSeek-V3 的训练语料库在我们的分词器中包含了 14.8T 的高质量和多样化 Token。

In the training process of Deep Seek Code r-V2 (DeepSeek-AI, 2024a), we observe that the Fill-in-Middle (FIM) strategy does not compromise the next-token prediction capability while enabling the model to accurately predict middle text based on contextual cues. In alignment with Deep Seek Code r-V2, we also incorporate the FIM strategy in the pre-training of DeepSeek-V3. To be specific, we employ the Prefix-Suffix-Middle (PSM) framework to structure data as follows: