Talk-to-Edit: Fine-Grained Facial Editing via Dialog

通过对话框进行细粒度的面部编辑

Yuming Jiang1∗ Ziqi Huang1∗ Xingang Pan2 Chen Change Loy1 Ziwei Liu1🖂

1S-Lab, Nanyang Technological University 2The Chinese University of Hong Kong

{yuming002, hu0007qi, ccloy,

ABSTRACT

Facial editing is an important task in vision and graphics with numerous applications. However, existing works are incapable to deliver a continuous and fine-grained editing mode (e.g., editing a slightly smiling face to a big laughing one) with natural interactions with users. In this work, we propose Talk-to-Edit, an interactive facial editing framework that performs fine-grained attribute manipulation through dialog between the user and the system. Our key insight is to model a continual “semantic field” in the GAN latent space. 1) Unlike previous works that regard the editing as traversing straight lines in the latent space, here the fine-grained editing is formulated as finding a curving trajectory that respects fine-grained attribute landscape on the semantic field. 2) The curvature at each step is location-specific and determined by the input image as well as the users’ language requests. 3) To engage the users in a meaningful dialog, our system generates language feedback by considering both the user request and the current state of the semantic field. 00footnotetext: ∗Equal contribution.

人脸编辑是视觉和图形领域的一项重要任务,具有广泛的应用前景。然而,现有的作品无法提供一个连续的和细粒度的编辑模式(例如,编辑一个微笑的脸大笑)与用户的自然互动。在这项工作中,我们提出了 Talk-to-Edit,一个交互式的面部编辑框架,通过用户和系统之间的对话执行细粒度的属性操作。我们的关键点是在 GAN 潜在的空间建模出一个连续的“语义场”。1)与以往的编辑方法不同的是,这种细粒度的编辑方法是在语义场上寻找一条曲线轨迹,尊重语义场上的细粒度属性。2)每个步骤的曲率是特定于位置的,由输入图像和用户的语言请求决定。3)为了让用户参与到一个有意义的对话中,我们的系统通过考虑用户的请求和语义场的当前状态来生成语言反馈。

We also contribute CelebA-Dialog, a visual-language facial editing dataset to facilitate large-scale study. Specifically, each image has manually annotated fine-grained attribute annotations as well as template-based textual descriptions in natural language. Extensive quantitative and qualitative experiments demonstrate the superiority of our framework in terms of 1) the smoothness of fine-grained editing, 2) the identity/attribute preservation, and 3) the visual photorealism and dialog fluency. Notably, user study validates that our overall system is consistently favored by around 80% of the participants. Our project page is https://www.mmlab-ntu.com/project/talkedit/.

我们也贡献了 CelebA-Dialog,一个可视化语言的面部编辑数据集,以方便大规模的研究。具体来说,每个图像都有手动注释的细粒度属性注释,以及自然语言中基于模板的文本描述。大量的定量和定性实验证明了该框架在以下方面的优越性: 1)细粒度编辑的流畅性,2)身份/属性的保留,3)视觉照相写实和对话流畅性。值得注意的是,用户研究证明我们的整个系统一直受到大约80% 的参与者的青睐。我们的项目页面是 https://www.mmlab-ntu.com/project/talkedit/

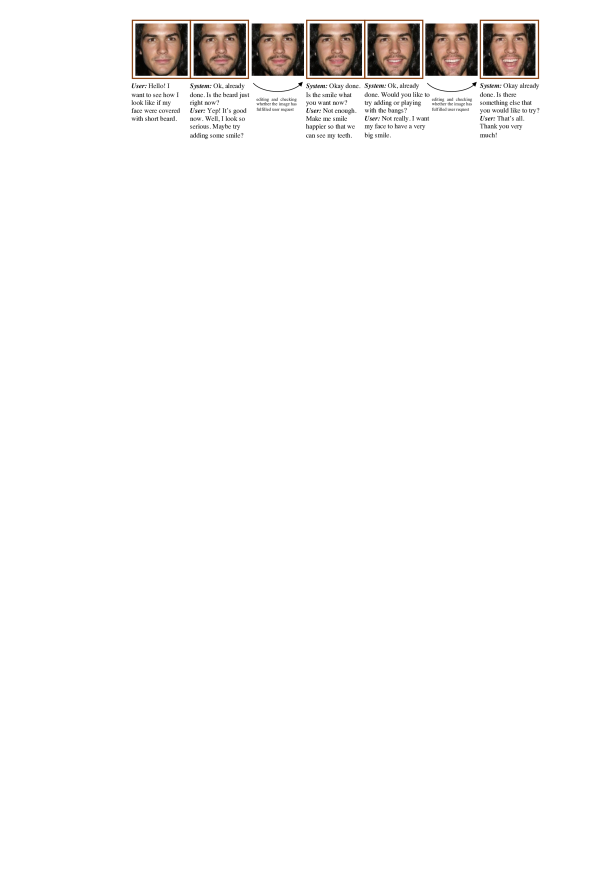

Figure 1: An example of Talk-to-Edit. The user provides a facial image and an editing request. Our system then edits the image accordingly, and provides meaningful language feedback such as clarification or alternative editing suggestions. During editing, the system is able to control the extent of attribute change on a fine-grained scale, and iteratively checks whether the current editing step fulfills the user’s request.

图1: Talk-to-Edit 示例。用户提供面部图像和编辑请求。然后我们的系统会相应地编辑图像,并提供有意义的语言反馈,如澄清或替代的编辑建议。在编辑过程中,系统能够在细粒度范围内控制属性更改的程度,并反复检查当前的编辑步骤是否满足用户的请求

1INTRODUCTION

The goal of facial editing is to enable users to manipulate facial images in their desired ways. Thanks to the advance of deep generative models like GANs [gan, conditionalgan, biggan, pggan, stylegan1, stylegan2], facial editing has witnessed rapid growth in recent years, especially in image fidelity. While there have been several attempts to improve facial editing quality, they often lack interactions with users or require users to follow some fixed control patterns. For instance, image-to-image translation models [cyclegan, stargan, translation_conditional, cross_domain, unsupervised_translation] only translate facial images between several discrete and fixed states, and users cannot give any subjective controls to the system. Other face editing methods offer users some controls, such as a semantic map indicating the image layout [maskgan], a reference image demonstrating the target style [perceptual, universal_styletransfer, yin2019instance, li2021image], and a sentence describing a desired effect [recurrent_attentive, text_as_neural_operator, text_adaptive, prada, tedigan]. However, users have to follow the fixed patterns, which are too demanding and inflexible for most users. Besides, the only feedback provided by the system is the edited image itself.

面部编辑的目标是使用户能够以他们想要的方式操作面部图像。由于像 GANs [ gan,conditionalgan,biggan,pggan,stylegan1,stylegan2]这样的深层生殖模型的发展,近年来,面部编辑技术得到了迅速发展,尤其是在图像逼真度方面。虽然已经有几次尝试来提高面部编辑的质量,但是他们经常缺乏与用户的互动,或者需要用户遵循一些固定的控制模式。例如,图像到图像的转换模型[ cyclegg,stargan,translation _ conditional,cross _ domain,unsupervised _ translation ]只能在多个离散和固定状态之间转换人脸图像,用户不能对系统进行任何主观控制。其他的人脸编辑方法为用户提供了一些控制,比如表示图像布局的语义地图[ maskgan ] ,展示目标样式的参考图像[感知的,通用的,样式转换,yin2019实例,li2021图像] ,以及描述期望效果的句子[循环的,文本作为神经操作符,文本适应的,普拉达的,tedigan 的]。然而,用户必须遵循固定的模式,这对于大多数用户来说要求太高、太不灵活。此外,系统提供的唯一反馈就是编辑后的图像本身。

In terms of the flexibility of interactions, we believe natural language is a good choice for users. Language is not only easy to express and rich in information, but also a natural form for the system to give feedback. Thus, in this work, we make the first attempt towards a dialog-based facial editing framework, namely Talk-to-Edit, where editing is performed round by round via request from the user and feedback from the system.

就交互的灵活性而言,我们相信自然语言是用户的一个很好的选择。语言不仅易于表达,信息量丰富,而且是系统进行反馈的自然形式。因此,在这项工作中,我们首次尝试了一种基于对话框的面部编辑框架,即“对话编辑”,通过用户的请求和系统的反馈,一轮一轮地进行编辑。

In such an interactive scenario, users might not have a clear target in their mind at the beginning of editing and thoughts might change during editing, like tuning an overly laughing face back to a moderate smile. Thus, the editing system is supposed to be capable of performing continuous and fine-grained attribute manipulations. While some approaches [interfacegan1, interfacegan2, unsupervised_discovery, closed_form_factorization, pca] could perform continuous editing to some extent by shifting the latent code of a pre-trained GAN [stylegan1, stylegan2, pggan, biggan], they typically make two assumptions: 1) the attribute change is achieved by traversing along a straight line in the latent space; 2) different identities share the same latent directions. However, these assumptions overlook the non-linear nature of the latent space of GAN, potentially leading to several shortcomings in practice: 1) The identity would drift during editing; 2) When editing an attribute of interest, other irrelevant attributes would be changed as well; 3) Artifacts would appear if the latent code goes along the straight line too far.

在这样一个交互式的场景中,用户在编辑开始时可能没有一个明确的目标,编辑过程中,他们的想法可能会发生变化,比如把一张过度大笑的脸调回到一个温和的微笑。因此,编辑系统应该能够执行连续的和细粒度的属性操作。虽然有些方法[ interfacegan1,interfacegan2,unsupervised _ discovery,closed _ form _ factorization,pca ]可以在一定程度上通过移动预先训练的 GAN [ stylegan1,stylegan2,pggan,biggan ]的潜在代码来执行连续编辑,但它们通常有两个假设: 1)属性变化是通过沿空间中的直线移动来实现的; 2)不同的身份具有相同的潜在方向。然而,这些假设忽略了 GAN 潜在空间的非线性本质,可能导致实践中的几个缺陷: 1)编辑过程中身份会漂移; 2)当编辑一个感兴趣的属性时,其他不相关的属性也会改变; 3)如果潜在代码沿直线走得太远,人工制品就会出现。

To address these challenges, we propose to learn a vector field that describes location-specific directions and magnitudes for attribute changes in the latent space of GAN, which we term as a “semantic field”. Traversing along the curved trajectory takes into account the non-linearity of attribute transition in the latent space, thus achieving more fine-grained and accurate facial editing. Besides, the curves changing the attributes of different identities might be different, which can also be captured by our semantic field with the location-specific property. In this case, the identity of the edited facial image would be better preserved. In practice, the semantic field is implemented as a mapping network, and is trained with fine-grained labels to better leverage its location-specific property, which is more expressive than prior methods supervised by binary labels.

为了应对这些挑战,我们建议学习一个矢量场,描述位置特定的方向和大小的属性变化的潜在空间的 GAN,我们称之为“语义场”。沿着曲线轨迹的移动考虑到了潜在空间中属性转换的非线性,从而实现了更精细和准确的人脸编辑。此外,不同身份的属性变化曲线可能是不同的,这也可以通过我们的位置特定性的语义场捕获。在这种情况下,编辑过的面部图像的身份将得到更好的保存。在实践中,语义场被实现为一个映射网络,并用细粒度的标签进行训练,以更好地利用其位置特定性,这比以前用二进制标签监督的方法更具有表达力。

The above semantic field editing strategy is readily embedded into our dialog system to constitute the whole Talk-to-Edit framework. Specifically, a user’s language request is encoded by a language encoder to guide the semantic field editing part to alter the facial attributes consistent with the language request. After editing, feedback would be given by the system conditioned on previous edits to check for further refinements or offer other editing suggestions. The user may respond to the system feedback for further editing actions, and this dialog-based editing iteration would continue until the user is satisfied with the edited results.

上述语义场编辑策略可以很容易地嵌入到我们的对话系统中,构成整个 Talk-to-Edit 框架。具体来说,语言编码器对用户的语言请求进行编码,引导语义场编辑部分改变与语言请求一致的面部属性。编辑完成后,系统将根据以前的编辑情况提供反馈,以检查是否有进一步的改进或提供其他编辑建议。用户可以响应系统反馈以进行进一步的编辑操作,基于对话框的编辑迭代将继续,直到用户对编辑结果感到满意为止。

To facilitate the learning of semantic field and dialog-based editing, we contribute a large-scale visual-language dataset named CelebA-Dialog. Unlike prior datasets with only binary attribute labels, we annotate images in CelebA with attribute labels of fine granularity. Accompanied with each image, there is also a user request sample and several captions describing these fine-grained facial attributes.

为了方便学习语义场和基于对话框的编辑,我们提供了一个大规模的可视化语言数据集 CelebA-Dialog。与之前只有二进制属性标签的数据集不同,我们在 CelebA 中使用细粒度的属性标签对图像进行注释。伴随每个图像,还有一个用户请求样本和描述这些细粒度的面部属性的几个字幕。

In summary, our main contributions are: 1) We propose to perform fine-grained facial editing via dialog, an easier interactive way for users. 2) To achieve more continuous and fine-grained facial editing, we propose to model a location-specific semantic field. 3) We achieve superior results with better identity preservation and smoother change compared to other counterparts. 4) We contribute a large-scale visual-language dataset CelebA-Dialog, containing fine-grained attribute labels and textual descriptions.

总之,我们的主要贡献是: 1)我们建议通过对话框来执行细粒度的人脸编辑,这是一种为用户提供更简单的交互方式。2)为了实现更加连续和细粒度的人脸编辑,我们提出了一个位置特定的语义场模型。3)与其他同行相比,我们用更好的食品生产履历和更平稳的变化来达到更好的结果。4)我们提供了一个大规模的可视化语言数据集 CelebA-Dialog,其中包含细粒度的属性标签和文本描述。

Figure 2: Illustration of CelebA-Dialog dataset. We show example images and annotations for the smiling attribute. Below the images are the attribute degrees and the corresponding textual descriptions. We also show the fine-grained label distribution of the smiling attribute.

Figure 2: Illustration of CelebA-Dialog dataset. We show example images and annotations for the smiling attribute. Below the images are the attribute degrees and the corresponding textual descriptions. We also show the fine-grained label distribution of the smiling attribute.

2RELATED WORK

Semantic Facial Editing. Several methods have been proposed for editing specific attributes such as age progression [age_progression_gan, recurrent_face_aging], hair synthesis [hair_synthesis, hair_modeling], and smile generation [smile_generation]. Unlike these attribute-specific methods relying on facial priors such as landmarks, our method is able to manipulate multiple semantic attributes without using facial priors. Image-to-image translation methods [cyclegan, stargan, translation_conditional, cross_domain, unsupervised_translation] have shown impressive results on facial editing. However, they are insufficient to perform continuous editing because images are translated between two discrete domains.

语义面部编辑。人们已经提出了几种编辑特定属性的方法,如年龄增长[年龄增长] ,循环脸部老化] ,头发合成[头发合成] ,头发造型] ,微笑的产生[微笑的产生]。与这些依赖于面部先验(如地标)的特定属性方法不同,我们的方法能够在不使用面部先验的情况下操纵多个语义属性。图像到图像的翻译方法[ cyclegg,stargan,translation _ conditional,cross _ domain,unsupervised _ translation ]在人脸编辑方面取得了令人印象深刻的成果。但是,它们不足以执行连续编辑,因为图像是在两个离散域之间转换的。

Recently, latent space based manipulation methods [zhu2016generative, brock2016neural] are drawing increasing attention due to the advancement of GAN models like StyleGAN [karras2019style, karras2020analyzing]. These approaches typically discover semantically meaningful directions in the latent space of a pretrained GAN so that moving the latent code along these directions could achieve desired editing in the image space. Supervised methods find directions to edit the attributes of interest using attribute labels [interfacegan1, interfacegan2, enjoy_your_editing], while unsupervised methods exploit semantics learned by the pretrained GAN to discover the most important and distinguishable directions [unsupervised_discovery, pca, closed_form_factorization]. InterFaceGAN [interfacegan1, interfacegan2] finds a hyperplane in the latent space to separate semantics into a binary state and then uses the normal vector of the hyperplane as the editing direction. A recent work [enjoy_your_editing] learns a transformation supervised by binary attribute labels and directly adds the transformation direction to the latent code to achieve one-step editing. Some approaches [jahanian2019steerability, abdal2021styleflow] consider the non-linear property of latent space. Different from existing methods, we learn a location-specific field in the latent space supervised by fine-grained labels to achieve precise fine-grained editing and to preserve facial identities.

近年来,随着 StyleGAN [ karras2019 style,karras2020analyzing ]等 GAN 模型的发展,基于潜在空间的操作方法[ zhu2016generative,brock2016neural ]受到越来越多的关注。这些方法通常在预训练的 GAN 的潜在空间中发现语义上有意义的方向,从而沿着这些方向移动潜在代码可以在图像空间中实现所需的编辑。有监督的方法使用属性标签[ interfacegan1,interfacegan2,enjoy your _ editing ]找到感兴趣属性的编辑方向,而无监督的方法利用预先训练的 GAN 学到的语义发现最重要和最可分辨的方向[无监督的发现,主成分分析,封闭的形式因子分解]。InterFaceGAN [ interfacegan1,interfacegan2]在潜在空间中找到一个超平面,将语义分离为二进制状态,然后使用超平面的法向量作为编辑方向。最近的作品[ enjoy _ your _ editing ]学习了一个由二进制属性标签监督的转换,并直接向潜在代码添加转换方向,以实现一步编辑。一些方法[ jahanian2019steerability,abdal2021styleflow ]考虑了潜空间的非线性特性。不同于现有的方法,我们学习一个位置特定的领域在潜在的空间监督的细粒度标签,以实现精确的细粒度编辑和保存人脸身份。

Language-based Image Editing. The flexibility of natural language has attracted researchers to propose a number of text-to-image generation [stackgan, generative_text_to_image, attngan, tedigan] and manipulation [recurrent_attentive, text_as_neural_operator, text_adaptive, prada, tedigan] approaches. For example, given an input image, TediGAN [tedigan] generates a new image conditioned on a text description. Some other approaches [benchmark, sequential_attngan, codraw, neural_painter, sscr, liu2020describe, li2020manigan] allow users to give requests in the form of natural language but do not provide meaningful feedback, clarification, suggestion, or interaction. Chatpainter [chatpainter] synthesizes an image conditioned on a completed dialog, but could not talk to users round by round to edit images. Unlike existing systems that simply “listen” to users to edit, our dialog-based editing system is able to “talk” to users, edit the image according to user requests, clarify with users about their intention especially on fine-grained attribute details, and offer other editing options for users to explore.

基于语言的图像编辑。自然语言的灵活性吸引了研究人员提出了一些文本到图像的生成方法[ stackgan,生成文本到图像,attigan,tedigan ]和操作方法[ recursive,text as neural _ operator,text _ adaptive,prada,tedigan ]。例如,给定一个输入图像,TediGAN [ TediGAN ]根据文本描述生成一个新图像。其他一些方法[ benchmark,sequential _ attgan,codraw,neural _ painter,sscr,liu2020describe,li2020manigan ]允许用户以自然语言的形式提出请求,但不提供有意义的反馈、澄清、建议或交互。聊天画家(聊天画家)根据已完成的对话框合成一个图像,但不能一圈一圈地与用户交谈来编辑图像。不像现有的系统,只是“听”用户编辑,我们的对话框编辑系统能够“谈话”用户,编辑图像根据用户的要求,澄清用户的意图,特别是细粒度的属性细节,并提供其他编辑选项,让用户探索。

3CELEBA-DIALOG DATASET

In the dialog-based facial editing scenarios, many rounds of edits are needed till users are satisfied with the edited images. To this end, the editing system should be able to generate continuous and fine-grained facial editing results, which contain intermediate states translating source images to target images. However, for most facial attributes, binary labels are not enough to precisely express the attribute degrees. Consequently, methods trained with only binary labels could not perform natural fine-grained facial editing. Specifically, they are not able to generate plausible results when attribute degrees become larger. Thus, fine-grained facial attribute labels are vital to providing supervision for fine-grained facial editing. Moreover, the system should also be aware of the attribute degrees of edited images so that it could provide precise feedback or suggestions to users, which also needs fine-grained labels for training.

在基于对话框的人脸编辑场景中,需要进行多轮编辑,直到用户对编辑后的图像满意为止。为此,编辑系统应该能够生成连续和细粒度的人脸编辑结果,其中包含将源图像转换为目标图像的中间状态。然而,对于大多数人脸属性,二进制标签并不足以精确地表达属性度。因此,只使用二进制标签进行训练的方法不能执行自然的细粒度人脸编辑。具体来说,当属性度变大时,它们不能产生合理的结果。因此,细粒度的面部属性标签对于为细粒度的面部编辑提供监督是至关重要的。此外,该系统还应知道编辑图像的属性程度,以便能够向用户提供准确的反馈或建议,这也需要细粒度的标签进行训练。

Motivated by these, we contribute a large-scale visual-language face dataset named CelebA-Dialog. The CelebA-Dialog dataset has the following properties: 1) Facial images are annotated with rich fine-grained labels, which classify one attribute into multiple degrees according to its semantic meaning; 2) Accompanied with each image, there are captions describing the attributes and a user request sample. The CelebA-Dialog dataset is built as follows:

受此激励,我们贡献了一个大规模的可视化语言面部数据集,名为 CelebA-Dialog。CelebA-Dialog 数据集具有以下属性: 1)面部图像带有丰富的细粒度标签注释,根据语义将一个属性分为多个等级; 2)伴随每个图像,有描述属性的字幕和用户请求样本。CelebA-Dialog 数据集构建如下:

Figure 3: Overview of Talk-to-Edit Pipeline. In round t, we receive the input image It and its corresponding latent code zt from the last round. Then the Language Encoder E extracts the editing encoding er from the user request rt, and feeds er to the Semantic Field F to guide the editing process. The latent code zt is iteratively moved along field lines by adding the field vector f=F(zt) to zt, and a pretrained predictor is used to check whether the target degree is achieved. Finally, the edited image It+1 will be output at the end of one round. Based on the editing encoding er, the Talk module gives language feedback such as clarification and alternative editing suggestions.Data Source. CelebA dataset [celeba] is a well-known large-scale face attributes dataset, which contains 202,599 images. With each image, there are forty binary attribute annotations. Due to its large-scale property and diversity, we choose to annotate fine-grained labels for images in CelebA dataset. Among forty binary attributes, we select five attributes whose degrees cannot be exhaustively expressed by binary labels. The selected five attributes are Bangs, Eyeglasses, Beard, Smiling, and Young (Age).

Figure 3: Overview of Talk-to-Edit Pipeline. In round t, we receive the input image It and its corresponding latent code zt from the last round. Then the Language Encoder E extracts the editing encoding er from the user request rt, and feeds er to the Semantic Field F to guide the editing process. The latent code zt is iteratively moved along field lines by adding the field vector f=F(zt) to zt, and a pretrained predictor is used to check whether the target degree is achieved. Finally, the edited image It+1 will be output at the end of one round. Based on the editing encoding er, the Talk module gives language feedback such as clarification and alternative editing suggestions.Data Source. CelebA dataset [celeba] is a well-known large-scale face attributes dataset, which contains 202,599 images. With each image, there are forty binary attribute annotations. Due to its large-scale property and diversity, we choose to annotate fine-grained labels for images in CelebA dataset. Among forty binary attributes, we select five attributes whose degrees cannot be exhaustively expressed by binary labels. The selected five attributes are Bangs, Eyeglasses, Beard, Smiling, and Young (Age).

Fine-grained Annotations. For Bangs, we classify the degrees according to the proportion of the exposed forehead. There are 6 fine-grained labels in total: 100%, 80%, 60%, 40%, 20%, and 0%. The fine-grained labels for eyeglasses are annotated according to the thickness of glasses frames and the type of glasses (ordinary / sunglasses). The annotations of beard are labeled according to the thickness of the beard. And the metrics for smiling are the ratio of exposed teeth and open mouth. As for the age, we roughly classify the age into six categories: below 15, 15-30, 30-40, 40-50, 50-60, and above 60. In Fig. 2, we provide examples on the fine-grained annotations of the smiling attribute. For more detailed definitions and examples of fine-grained labels for each attribute, please refer to the supplementary files.

Textual Descriptions. For every image, we provide fine-grained textual descriptions which are generated via a pool of templates. The captions for each image contain one caption describing all the five attributes and five individual captions for each attribute. Some caption examples are given in Fig. 2. Besides, for every image, we also provide an editing request sample conditioned on the captions. For example, a serious-looking face is likely to be requested to add a smile.

4OUR APPROACH

The pipeline of Talk-to-Edit system is depicted in Fig. 3. The whole system consists of three major parts: user request understanding, semantic field manipulation, and system feedback. The initial inputs to the whole system are an image I and a user’s language request r. A language encoder E is first employed to interpret the user request into the editing encoding er, indicating the attribute of interest, changing directions, etc. Then the editing encoding er and the corresponding latent code z is fed into the “semantic field” F to find the corresponding vectors fz to change the specific attribute degrees. After one round of editing, the system will return the edited image I′ and provide reasonable feedback to the user. The editing will continue until the user is satisfied with the editing result.

4.1USER REQUEST UNDERSTANDING

Given a user’s language request r, we use a language encoder E to extract the editing encoding er as follows:

| er=E(r) | (1) |

|---|

The editing encoding er, together with the dialog and editing history, and the current state of the semantic field, will decide and instruct the semantic field whether to perform an edit in the current round of dialog. The editing encoding er contains the following information: 1) request type, 2) the attribute of interest, 3) the editing direction, and 4) the change of degree.

Users’ editing requests are classified into three types: 1) describe the attribute and specify the target degree, 2) describe the attribute of interest and indicate the relative degree of change, 3) describe the attribute and only the editing direction without specifying the degree of change. We use template-based method to generate the three types of user requests and then train the language encoder.

4.2SEMANTIC FIELD FOR FACIAL EDITING

Figure 4: (a) Training Scheme of Semantic Field. Predictor loss, identity keeping loss and discriminator loss are adopted to ensure the location-specific property of semantic field. (b) Illustration of Semantic Field in Latent Space. Different colors represent latent space regions with different attribute scores. The boundary between two colored regions is an equipotential subspace. Existing methods are represented by the trajectory 1⃝, where latent code is shifted along a fixed direction throughout editing. Our method is represented by trajectory 2⃝, where latent code is moved along location-specific directions.Given an input image I∈R3×H×W and a pretrained GAN generator G, similar to previous latent space based manipulation methods [interfacegan1, interfacegan2, enjoy_your_editing, pan20202d], we need to firstly inverse the corresponding latent code z∈Rd such that I=G(z), and then find the certain vector fz∈Rd which can change the attribute degree. Note that adopting the same vector for all faces is vulnerable to identity change during editing, as different faces could have different fz. Thus, the vector should be location-specific, \ie, the vector is not only unique to different identities but also varies during editing. Motivated by this, we propose to model the latent space as a continual “semantic field”, \ie, a vector field that assigns a vector to each latent code.

Figure 4: (a) Training Scheme of Semantic Field. Predictor loss, identity keeping loss and discriminator loss are adopted to ensure the location-specific property of semantic field. (b) Illustration of Semantic Field in Latent Space. Different colors represent latent space regions with different attribute scores. The boundary between two colored regions is an equipotential subspace. Existing methods are represented by the trajectory 1⃝, where latent code is shifted along a fixed direction throughout editing. Our method is represented by trajectory 2⃝, where latent code is moved along location-specific directions.Given an input image I∈R3×H×W and a pretrained GAN generator G, similar to previous latent space based manipulation methods [interfacegan1, interfacegan2, enjoy_your_editing, pan20202d], we need to firstly inverse the corresponding latent code z∈Rd such that I=G(z), and then find the certain vector fz∈Rd which can change the attribute degree. Note that adopting the same vector for all faces is vulnerable to identity change during editing, as different faces could have different fz. Thus, the vector should be location-specific, \ie, the vector is not only unique to different identities but also varies during editing. Motivated by this, we propose to model the latent space as a continual “semantic field”, \ie, a vector field that assigns a vector to each latent code.

Definition of Continual Semantic Field. For a latent code z in the latent space, suppose its corresponding image I has a score s for a certain attribute. By finding a proper vector fz and then adding the vector to z, the attribute score s will be changed to s′. Intuitively, the vector fz to increase the attribute score for the latent code z is the gradient of s with respect to z.

Mathematically, the attribute score is a scalar field, denoted as S:Rd↦R. The gradient of attribute score field S with respect to the latent code is a vector field, which we term as “semantic field”. The semantic field F:Rd↦Rd can be defined as follows:

| F=∇S. | (2) |

|---|

For a specific latent code z, the direction of its semantic field vector fz is the direction in which the attribute score s increases the fastest.

In the latent space, if we want to change the attribute score s of a latent code z, all we need is to move z along the latent direction in the semantic field. Due to the location-specific property of the semantic field, the trajectory of changing the attribute score from sa to sb is curved. The formula for changing attribute score is expressed as:

| sa+∫zbzafz⋅dz=sb, | (3) |

|---|

where za is the initial latent code and zb is the end point. As the semantic field is continuous and location-specific, continuous facial editing can be easily achieved by traversing the latent space along the semantic field line.

Discretization of Semantic Field. Though the attribute score field and semantic field in the real world are both continual, in practice, we need to discretize the continual field to approximate the real-world continual one. Thus, the discrete version of Eq. (3) can be expressed as:

| sa+N∑i=1fzi⋅Δzi=sb. | (4) |

|---|

The semantic field F is implemented as a mapping network. For a latent code z, we could obtain its corresponding semantic field vector via fz=F(z). Then one step of latent code shifting is achieved by:

| z′ | =z+αfz | ||

|---|---|---|---|

| =z+αF(z), | |||

| - | - | - | - |

where α is the step size, which is set to α=1 in this work. Since fz is supposed to change the attribute degree, the edited image I′=G(z′) should have a different attribute score from the original image I=G(z). During editing, we repeat Eq. (5) until the desired attribute score is reached.

As illustrated in Fig. 4, to train the mapping network so that it has the property of a semantic field, a pretrained fine-grained attribute predictor P is employed to supervise the learning of semantic field. The predictor has two main functions: one is to push the output vector to change the attribute of interest in a correct direction, and the other is to keep the other irrelevant attributes unchanged. Suppose we have k attributes in total. The fine-grained attributes of the original image can be denoted as (a1,a2,...,ai,...,ak), where ai∈{0,1,...,C} are the discrete class labels indicating the attribute degree. When we train the semantic field for the i-th attribute, the target attributes labels y of the edited image I′ should be (a1,a2,...,ai+1,...,ak). With the target attribute labels, we can optimize the desired semantic field using the cross-entropy loss, then the predictor loss Lpred is expressed as follows:

| Lpred=−k∑i=1C∑c=0yi,clog(pi,c), | (6) |

|---|

where C denotes the number of fine-grained classes, yi,c is the binary indicator with respect to the target class, and pi,c is the softmax output of predictor P, \ie, p=P(I′).

As the location-specific property of the semantic field allows different identities to have different vectors, we further introduce an identity keeping loss [wang2021gfpgan, taigman2016unsupervised] to better preserve the face identity when shifting the latent codes along the semantic field. Specifically, we employ an off-the-shelf face recognition model to extract discriminative features, and the extracted features during editing should be as close as possible. The identity keeping loss Lid is defined as follows:

| Lid=∥∥Face(I′)−Face(I)∥∥1, | (7) |

|---|

where Face(⋅) is the pretrained face recognition model [deng2019arcface].

Moreover, to avoid unrealistic artifacts in edited images, we could further leverage the pretrained discriminator D coupled with the face generator as follows:

| Ldisc=−D(I′). | (8) |

|---|

To summarize, we use the following loss functions to supervise the learning of semantic field:

| Ltotal=λpredLpred+λidLid+λdiscLdisc, | (9) |

|---|

where λpred, λid and λdisc are weights for predictor loss, identity keeping loss and discriminator loss respectively.

Figure 5: Qualitative Comparison. We compare our approach with InterfaceGAN, Multiclass SVM and Enjoy Your Editing. Our editing results are more realistic. Besides, our method is less likely to change the identity and other attributes.

Figure 5: Qualitative Comparison. We compare our approach with InterfaceGAN, Multiclass SVM and Enjoy Your Editing. Our editing results are more realistic. Besides, our method is less likely to change the identity and other attributes.

4.3SYSTEM FEEDBACK

The system Talk module provides natural language feedback as follows:

| feedbackt=Talk(feedbackt−1,r,s,er,h), | (10) |

|---|

where r is the user request, s is the current system state, er is the editing encoding, and h is the editing history.

The feedback provided by the system comes from one of the three categories: 1) checking if the attribute degree of the edited image meets users’ expectations, 2) providing alternative editing suggestions or options, and 3) asking for further user instructions.

| missingmissing |

|---|

| Methods |

| missingmissing |

| InterfaceGAN |

| Multiclass SVM |

| Enjoy Your Editing |

| Talk-to-Edit (Ours) |

| Talk-to-Edit (Ours) * |

| missingmissing |

Table 1: Quantitative Comparisons. We report Identity / Attribute preservation metrics. A lower identity score (smaller feature distance) means the identity is better preserved, and a lower attribute score (smaller cross-entropy) means the irrelevant attributes are less changed. Our method has a superior performance in terms of identity and attribute preservation. Figure 6: Results of dialog-based facial editing. The whole process is driven by the dialog between the user and the system.

Figure 6: Results of dialog-based facial editing. The whole process is driven by the dialog between the user and the system.

5EXPERIMENTS

Evaluation Datasets. We synthesize the evaluation dataset by sampling latent codes from the StyleGAN pretrained on CelebA dataset [celeba]. Using latent codes, we then generate corresponding images. When comparing with other latent space based manipulation methods, we use the latent code for editing directly to avoid the error introduced by GAN-inversion methods. Considering computation resources, we compare our method with baselines on 128×128 images.

Evaluation Metrics. We evaluate the performance of facial editing methods in terms of identity and attribute preservation as well as the photorealism of edited images. To evaluate the identity preservation, we extract the features of the images before and after editing with FaceNet [schroff2015facenet], and compute their euclidean distance. As for the irrelevant attribute preservation, we use a retrained attribute predictor to output a cross-entropy score indicating whether the predicted attribute is consistent with its ground-truth label.

Apart from the aforementioned metrics, we also conduct a user study. Two groups of editing results (one is our result, the other is another method) are provided to participants. The participants are supposed to compare two groups of editing images and then choose the more suitable group for each of the following questions: 1) Which group of images is more visually realistic? 2) Which group of images has more continuous changes? 3) After editing, which group of images better preserves the identity?

Figure 7: High-Resolution Image Editing. Our method can be generalized to 1024×1024 images.

Figure 7: High-Resolution Image Editing. Our method can be generalized to 1024×1024 images. Figure 8: Real Image Editing. Given a real image, we first inverse the image and find its corresponding latent code in latent space. We firstly add bangs and then add smiling.

Figure 8: Real Image Editing. Given a real image, we first inverse the image and find its corresponding latent code in latent space. We firstly add bangs and then add smiling. Figure 9: User Study. (a) The percentage of participants favoring our results against existing methods. Our results are preferred by the majority of participants. (b) Over half of the participants think the system feedback is natural.

Figure 9: User Study. (a) The percentage of participants favoring our results against existing methods. Our results are preferred by the majority of participants. (b) Over half of the participants think the system feedback is natural. Figure 10: Cosine Similarity. We compute the average cosine similarity between the initial direction and directions of later steps. As the attribute class changes, the cosine similarity decreases, indicating that the editing trajectories for most facial images are curved.### 5.1COMPARISON METHODS

Figure 10: Cosine Similarity. We compute the average cosine similarity between the initial direction and directions of later steps. As the attribute class changes, the cosine similarity decreases, indicating that the editing trajectories for most facial images are curved.### 5.1COMPARISON METHODS

InterfaceGAN. InterfaceGAN [interfacegan2] uses a single direction to perform continuous editing. The direction is obtained by computing the normal vector of the binary SVM boundary.

Multiclass SVM. We further propose an extended version of InterfaceGAN, named Multiclass SVM, where fine-grained labels are used to get multiple SVM boundaries. During the editing, directions will be constantly switched.

Enjoy Your Editing. Enjoy your editing [enjoy_your_editing] learns a mapping network to generate an identity-specific direction, and it keeps fixed during editing for one identity.

5.2QUANTITATIVE EVALUATION

Identity/Attribute Preservation. To fairly compare the continuous editing results with existing methods, we produce our results purely based on semantic field manipulation and language is not involved. We compute the identity preservation and attribute preservation scores for the editing results of baseline methods. Table 1 shows the quantitative comparison results. Our method achieves the best identity and attribute preservation scores.

Ablation Study. The location-specific property of semantic field has the following two indications: 1) the trajectory to edit one identity might be a curve instead of a straight line; 2) the editing trajectories are unique to individual identities. The superiority over InterfaceGAN and Enjoy Your Editing validates that the curved trajectory is vital for continuous editing and we will provide further analysis in Section 5.4. Compared to Multiclass SVM, our results confirm the necessity of different directions for different identities.

5.3QUALITATIVE EVALUATION

Visual Photorealism. Qualitative comparisons are shown in Fig. D. The results of our method displayed are edited on W+ space. Our proposed method is less likely to generate artifacts compared to previous methods. Besides, when the edited attribute comes to higher degrees, our method can still generate plausible editing results while keeping the identity unchanged.

User Study. We conduct a user study, where users are asked the aforementioned questions and they need to choose the better images. A total number of 27 participants are involved and they are required to compare 25 groups of images. We mix the editing results of different attributes together in the user study. The results of user study are shown in Fig. 9 (a). The results indicate that the majority of users prefer our proposed method in terms of image photorealism, editing smoothness, and identity preservation.

00footnotetext: ∗ edits on W+ space. Others edit on Z space.Dialog Fluency. In Fig. 6, we show a dialog example, where the system is asked to add beard for the young guy in the picture. After adding the beard into a desired one, the system then continues to edit the smile as required by the user. The system could talk to the user smoothly in the whole dialog. To further evaluate the fluency of dialog, we invite seven participants to compare six pairs of dialog. In each pair of dialog, one is generated by the system, and the other is revised by a human. Participants need to decide which one is more natural or if they are indistinguishable. The results are shown in Fig. 9 (b). Over half of the participants think the system feedback is natural and fluent.

5.4FURTHER ANALYSIS

High-Resolution Facial Editing. Since our editing method is a latent space manipulation based method, it can be extended to images with any resolutions as long as the pretrained GAN is available. Apart from editing results on 128×128 images shown in previous parts, we also provide some 1024×1024 resolution editing results in Fig. 7.

Location-specific Property of Semantic Field. When traversing the semantic field, the trajectory to change the attribute degree is determined by the curvature at each step, and thus it is curved. To further verify this hypothesis, we randomly sample 100 latent codes and then continuously add eyeglasses for the corresponding 1024×1024 images. For every editing direction, we compute its cosine similarity with the initial direction. The average cosine similarity against the attribute class change is plotted in Fig. 10. We observe that the cosine similarity tends to decrease as the attribute class change increases. It confirms that the editing direction could constantly change according to its current location, and thus the location-specific property is vital for continuous editing and identity preservation.

Real Image Editing. In Fig. 8, we show an example of real image editing results. The image is firstly inversed by the inversion method proposed by Pan \etal[pan2020exploiting]. The inversion process would finetune the weight of StyleGAN, and we observe that the trained semantic field still works.

6CONCLUSION

In this paper, we present a dialog-based fine-grained facial editing system named Talk-to-Edit. The desired facial editing is driven by users’ language requests and the system is able to provide feedback to users to make the facial editing more feasible. By modeling the non-linearity property of the GAN latent space using semantic field, our proposed method is able to deliver more continuous and fine-grained editing results. We also contribute a large-scale visual-language facial attribute dataset named CelebA-Dialog, which we believe would be beneficial to fine-grained and language driven facial editing tasks. In future work, the performance of real facial image editing can be further improved by incorporating more robust GAN-inversion methods and adding stronger identity keeping regularization. We also hope to deal with more complex text requests by leveraging advanced pretrained language models.

Acknowledgement. This study is supported by NTU NAP, MOE AcRF Tier 1 (2021-T1-001-088), and under the RIE2020 Industry Alignment Fund – Industry Collaboration Projects (IAF-ICP) Funding Initiative, as well as cash and in-kind contribution from the industry partner(s).

REFERENCES

SUPPLEMENTARY

In this supplementary file, we will explain the detailed annotation definition of CelebA-Dialog Dataset in Section A. Then we will introduce implementation details in Section B. In Section C, we will give more detailed explanations on experiments, including evaluation dataset, evaluation metrics and implementation details on comparison methods. Then we provide more visual results in Section D. Finally, we will discuss failure cases in Section E.

APPENDIX A CELEBA-DIALOG DATASET ANNOTATIONS

For each image, fine-grained attribute annotations and textual descriptions are provided. In Table A - A, we give detailed definitions of fine-grained attribute annotations. With each fine-grained attribute labe