Using GANs to Create Fantastical Creatures

Tuesday, November 17, 2020

Posted by Andeep Singh Toor, Stadia Software Engineer and Fred Bertsch, Software Engineer, Google Research, Brain TeamCreating art for digital video games takes a high degree of artistic creativity and technical knowledge, while also requiring game artists to quickly iterate on ideas and produce a high volume of assets, often in the face of tight deadlines. What if artists had a paintbrush that acted less like a tool and more like an assistant? A machine learning model acting as such a paintbrush could reduce the amount of time necessary to create high-quality art without sacrificing artistic choices, perhaps even enhancing creativity.

Today, we present Chimera Painter, a trained machine learning (ML) model that automatically creates a fully fleshed out rendering from a user-supplied creature outline. Employed as a demo application, Chimera Painter adds features and textures to a creature outline segmented with body part labels, such as “wings” or “claws”, when the user clicks the “transform” button. Below is an example using the demo with one of the preset creature outlines.

作者:Stadia软件工程师Andeep Singh Toor和Brain Team Google Research软件工程师Fred Bertsch为数字视频游戏创作艺术需要高度的艺术创造力和技术知识,同时还要求游戏美术师经常迭代创意并产生大量资产(通常面对紧迫的期限)。如果艺术家使用的画笔不像工具,而更像助手,该怎么办?充当此类画笔的机器学习模型可以减少创建高质量艺术所需的时间,而不会牺牲艺术选择,甚至可能增强创造力。

今天,我们介绍Chimera Painter,这是一种训练有素的机器学习(ML)模型,该模型可以根据用户提供的生物轮廓自动创建完全充实的渲染。作为演示应用程序,当用户单击“变换”按钮时,Chimera Painter会将特征和纹理添加到按身体部位标签(例如“机翼”或“爪”)分割的生物轮廓。以下是使用带有预设生物轮廓之一的演示的示例。

Using an image imported to Chimera Painter or generated with the tools provided, an artist can iteratively construct or modify a creature outline and use the ML model to generate realistic looking surface textures. In this example, an artist (Lee Dotson) customizes one of the creature designs that comes pre-loaded in the Chimera Painter demo.

使用导入到Chimera Painter或使用提供的工具生成的图像,艺术家可以迭代地构造或修改生物轮廓,并使用ML模型生成逼真的表面纹理。在此示例中,艺术家(李·多森)(Lee Dotson)自定义了Chimera Painter演示中预先加载的其中一种生物设计。

In this post, we describe some of the challenges in creating the ML model behind Chimera Painter and demonstrate how one might use the tool for the creation of video game-ready assets.

在这篇文章中,我们描述了在Chimera Painter背后创建ML模型时遇到的一些挑战,并演示了如何使用该工具来创建可用于视频游戏的资产。

Prototyping for a New Type of Model 新型模型的原型

In developing an ML model to produce video-game ready creature images, we created a digital card game prototype around the concept of combining creatures into new hybrids that can then battle each other. In this game, a player would begin with cards of real-world animals (e.g., an axolotl or a whale) and could make them more powerful by combining them (making the dreaded Axolotl-Whale chimera). This provided a creative environment for demonstrating an image-generating model, as the number of possible chimeras necessitated a method for quickly designing large volumes of artistic assets that could be combined naturally, while still retaining identifiable visual characteristics of the original creatures.

在开发ML模型以生成可用于视频游戏的生物图像时,我们围绕将生物组合成新的可相互竞争的混合体的概念,创建了一个数字纸牌游戏原型。在此游戏中,玩家将以现实世界中的动物(例如,x或鲸)的牌开始,并可以通过组合它们(使它们成为可怕的Axolotl-Whale嵌合体)来使其更加强大。这提供了一个演示图像生成模型的创造性环境,因为可能的嵌合体数量需要一种方法来快速设计可自然组合的大量艺术资产,同时仍保留原始生物的可识别视觉特征。

Since our goal was to create high-quality creature card images guided by artist input, we experimented with generative adversarial networks (GANs), informed by artist feedback, to create creature images that would be appropriate for our fantasy card game prototype. GANs pair two convolutional neural networks against each other: a generator network to create new images and a discriminator network to determine if these images are samples from the training dataset (in this case, artist-created images) or not. We used a variant called a conditional GAN, where the generator takes a separate input to guide the image generation process. Interestingly, our approach was a strict departure from other GAN efforts, which typically focus on photorealism.

由于我们的目标是在艺术家的指导下创建高质量的生物卡片图像,因此我们在艺术家反馈的指导下尝试了生成对抗网络(GAN),以创建适合于我们的幻想卡片游戏原型的生物图像。GAN将两个卷积神经网络相互配对:用于生成新图像的生成器网络和用于确定这些图像是否是训练数据集中的样本(在这种情况下,是由艺术家创建的图像)的鉴别器网络。我们使用了一种称为条件GAN的变体,其中生成器采用单独的输入来指导图像生成过程。有趣的是,我们的方法严格偏离了其他GAN的工作,后者通常侧重于写实主义。

To train the GANs, we created a dataset of full color images with single-species creature outlines adapted from 3D creature models. The creature outlines characterized the shape and size of each creature, and provided a segmentation map that identified individual body parts. After model training, the model was tasked with generating multi-species chimeras, based on outlines provided by artists. The best performing model was then incorporated into Chimera Painter. Below we show some sample assets generated using the model, including single-species creatures, as well as the more complex multi-species chimeras.

为了训练GAN,我们创建了全彩色图像的数据集,其中具有根据3D生物模型改编的单物种生物轮廓。该生物轮廓线描绘了每个生物的形状和大小,并提供了可识别各个身体部位的分割图。经过模型训练后,模型的任务是根据艺术家提供的轮廓生成多物种的嵌合体。然后将性能最好的模型合并到Chimera Painter中。下面,我们显示了使用该模型生成的一些示例资产,包括单物种生物以及更复杂的多物种嵌合体。

Generated card art integrated into the card game prototype showing basic creatures (bottom row) and chimeras from multiple creatures, including an Antlion-Porcupine, Axolotl-Whale, and a Crab-Antion-Moth (top row). More info about the game itself is detailed in this Stadia Research presentation. |

生成的纸艺已集成到纸牌游戏原型中,其中显示了基本生物(下排)和来自多个生物的嵌合体,包括蚂蚁-豪猪,A,鲸鱼和螃蟹-Antion-飞蛾(上排)。在Stasta Research的演讲中,详细介绍了有关游戏本身的更多信息。

Learning to Generate Creatures with Structure 学习生成具有结构的生物

使用GAN生成生物时,一个问题是,渲染图像的细微或低对比度部分时,可能会失去解剖和空间连贯性,尽管这些对人类而言具有很高的感知重要性。这样的示例可以包括眼睛,手指,甚至在具有相似纹理的重叠身体部位之间进行区分(请参阅下面的亲切名称BoggleDog)。

An issue with using GANs for generating creatures was the potential for loss of anatomical and spatial coherence when rendering subtle or low-contrast parts of images, despite these being of high perceptual importance to humans. Examples of this can include eyes, fingers, or even distinguishing between overlapping body parts with similar textures (see the affectionately named BoggleDog below).

|GAN-generated image showing mismatched body parts.

| GAN生成的图像显示了不匹配的身体部位。 |

Generating chimeras required a new non-photographic fantasy-styled dataset with unique characteristics, such as dramatic perspective, composition, and lighting. Existing repositories of illustrations were not appropriate to use as datasets for training an ML model, because they may be subject to licensing restrictions, have conflicting styles, or simply lack the variety needed for this task.

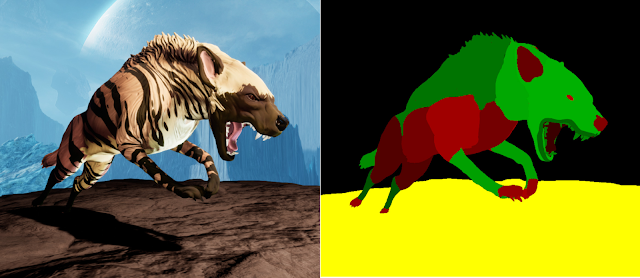

To solve this, we developed a new artist-led, semi-automated approach for creating an ML training dataset from 3D creature models, which allowed us to work at scale and rapidly iterate as needed. In this process, artists would create or obtain a set of 3D creature models, one for each creature type needed (such as hyenas or lions). Artists then produced two sets of textures that were overlaid on the 3D model using the Unreal Engine — one with the full color texture (left image, below) and the other with flat colors for each body part (e.g., head, ears, neck, etc), called a “segmentation map” (right image, below). This second set of body part segments was given to the model at training to ensure that the GAN learned about body part-specific structure, shapes, textures, and proportions for a variety of creatures.

生成嵌合体需要一个新的非摄影幻想风格的数据集,该数据集必须具有独特的特征,例如戏剧性的视角,构图和照明。现有的插图存储库不适合用作训练ML模型的数据集,因为它们可能会受到许可限制,样式冲突或仅缺少此任务所需的多样性。

为了解决这个问题,我们开发了一种新的艺术家主导的半自动化方法,用于从3D生物模型创建ML训练数据集,这使我们能够进行大规模工作并根据需要快速迭代。在此过程中,艺术家将创建或获得一组3D生物模型,每种所需的生物类型(例如鬣狗或狮子)都应建立一个模型。然后,艺术家使用虚幻引擎制作了两套叠加在3D模型上的纹理—一种具有全彩色纹理(下图,左图),另一种具有每个身体部位(例如头,耳,颈等)的单色,称为“分割图”(下图,右图)。在训练中将第二部分身体部位细分提供给模型,以确保GAN了解到各种生物特定于身体部位的结构,形状,纹理和比例。

|

|---|

| Example dataset training image and its paired segmentation map. |

The 3D creature models were all placed in a simple 3D scene, again using the Unreal Engine. A set of automated scripts would then take this 3D scene and interpolate between different poses, viewpoints, and zoom levels for each of the 3D creature models, creating the full color images and segmentation maps that formed the training dataset for the GAN. Using this approach, we generated 10,000+ image + segmentation map pairs per 3D cr