Improving Diffusers Package for High-Quality Image Generation

Overcoming token size limitations, custom model loading, LoRa support, textual inversion support, and more

Goodbye Babel, generated by Andrew Zhu using Diffusers in pure PythonStable Diffusion WebUI from AUTOMATIC1111 has proven to be a powerful tool for generating high-quality images using the Diffusion model. However, while the WebUI is easy to use, data scientists, machine learning engineers, and researchers often require more control over the image generation process. This is where the diffusers package from huggingface comes in, providing a way to run the Diffusion model in Python and allowing users to customize their models and prompts to generate images to their specific needs.

Despite its potential, the Diffusers package has several limitations that prevent it from generating images as good as those produced by the Stable Diffusion WebUI. The most significant of these limitations include:

- The inability to use custom models in the

.safetensorfile format; - The 77 prompt token limitation;

- A lack of LoRA support;

- And the absence of image scale-up functionality (also known as HighRes in Stable Diffusion WebUI);

- Low performance and high VRAM usage by default.

This article aims to address these limitations and enable the Diffusers package to generate high-quality images comparable to those produced by the Stable Diffusion WebUI. With the enhancement solutions provided, data scientists, machine learning engineers, and researchers can enjoy greater control and flexibility in their image generation processes while also achieving exceptional results. In the following sections, we will explore the various strategies and techniques that can be used to overcome these limitations and unlock the full potential of the Diffusers package.

Note that please follow this link to install all required CUDA and Python packages if it is your first time running Stable Diffusion.

[## Installation

Install 🤗 Diffusers for whichever deep learning library you're working with. 🤗 Diffusers is tested on Python 3.7+…

huggingface.co

](https://huggingface.co/docs/diffusers/installation)

1. Load Up Local Model files in .safetensor Format

Users can easily spin up diffusers to generate an image like this:

from diffusers import DiffusionPipeline

pipeline = DiffusionPipeline.from_pretrained("runwayml/stable-diffusion-v1-5")

pipeline.to("cuda")

image = pipeline("A cute cat playing piano").images[0]

image.save("image_of_cat_playing_piano.png")

You may not satisfy with either the output image or the performance. Let’s deal with the problems one by one. First, let’s load up a custom model in .safetensor format located anywhere on your machine. you can’t just load the model file like this:

pipeline = DiffusionPipeline.from_pretrained("/model/custom_model.safetensors")

Here are the detailed steps to covert .safetensor file to diffusers format:

Step 1. Pull all diffusers code from GitHub

git clone https://github.com/huggingface/diffusers.git

Step 2. Under the scripts<span> </span>folder locate the file: convert_original_stable_diffusion_to_diffusers.py

In your terminal, run this command to convert .safetensor file to Diffusers format. Remember to change the — checkpoint_path value to represent your case.

python convert_original_stable_diffusion_to_diffusers.py --from_safetensors --checkpoint_path="D:\stable-diffusion-webui\models\Stable-diffusion\deliberate_v2.safetensors" --dump_path='D:\sd_models\deliberate_v2' --device='cuda:0'

Step 3. Now you can load up the pipeline using the newly converted model file, here is the complete code:

from diffusers import DiffusionPipeline

pipeline = DiffusionPipeline.from_pretrained(

r"D:\sd_models\deliberate_v2"

)

pipeline.to("cuda")

image = pipeline("A cute cat playing piano").images[0]

image.save("image_of_cat_playing_piano.png")

You should be able to convert and use any models you download from huggingface or civitai.com.

Cat playing piano generated by the above code## 2. Boost the Performance of Diffusers

Generating high-quality images can be a time-consuming process even for the latest 3xxx and 4xxx Nvidia RTX GPUs. By default, Diffuers package comes with non-optimized settings. Two solutions can be applied to greatly boost performance.

Here is the interaction speed before applying the following solution, only about 2.x iterations per second in RTX 3070 TI 8G RAM to generate a 512x512 image

- Use Half Precision Weights

The first solution is to use half precision weights. Half precision weights use 16-bit floating-point numbers instead of the traditional 32-bit numbers. This reduces the memory required for storing weights and speeds up computation, which can significantly improve the performance of the Diffusers package.

According to this video, reducing float precision from FP32 to FP16 will also enable the Tensor Cores.

I had another article to test out how fast GPU Tensor cores can boost the computation speed.

[## How Fast GPU Computation Can Be

A comparison of matrix arithmetic calculation in CPU and GPU with Python and PyTorch

towardsdatascience.com

](https://towardsdatascience.com/how-fast-gpu-computation-can-be-41e8cff75974)

Here is how to enable FP16 in diffusers, Just adding two lines of code will boost the performance by 500%, with almost no image quality impacts.

from diffusers import DiffusionPipeline

import torch # <----- Line 1 added

pipeline = DiffusionPipeline.from_pretrained(

r"D:\sd_models\deliberate_v2"

,torch_dtype = torch.float16 # <----- Line 2 Added

)

pipeline.to("cuda")

image = pipeline("A cute cat playing piano").images[0]

image.save("image_of_cat_playing_piano.png")

Now the iteration speed boosts to 10.x iteration per second. A 5x times faster.

- Use Xformers

Xformers is an open-source library that provides a set of high-performance transformers for various natural language processing (NLP) tasks. It is built on top of PyTorch and aims to provide efficient and scalable transformer models that can be easily integrated into existing NLP pipelines. (Nowadays, are there any models that don’t use Transformer? :P)

Install Xformers by pip install xformers , then we can easily switch diffusers to use xformers by one line code.

...

pipeline.to("cuda")

pipeline.enable_xformers_memory_efficient_attention() <--- one line added

...

This one-line code boosts performance by another 20%.

3. Remove the 77 prompt tokens limitation

In the current version of Diffusers, there is a limitation of 77 prompt tokens that can be used in the generation of images.

Fortunately, there is a solution to this problem. By using the “lpw_stable_diffusion” pipeline provided by the community, you can unlock the 77 prompt token limitation and generate high-quality images with longer prompts.

To use the “lpw_stable_diffusion” pipeline, you can use the following code:

pipeline = DiffusionPipeline.from_pretrained(

model_path,

custom_pipeline="lpw_stable_diffusion", #<--- code added

torch_dtype=torch.float16

)

In this code, we are initializing a new DiffusionPipeline object using the “from_pretrained” method. We are specifying the path to the pre-trained model and setting the “custom_pipeline” argument to “lpw_stable_diffusion”. This tells Diffusers to use the “lpw_stable_diffusion” pipeline, which unlocks the 77 prompt token limitation.

Now, let’s use a long prompt string to test it out. Here is the complete code:

from diffusers import DiffusionPipeline

import torch

pipeline = DiffusionPipeline.from_pretrained(

r"D:\sd_models\deliberate_v2"

,custom_pipeline = "lpw_stable_diffusion" #<--- code added

,torch_dtype = torch.float16

)

pipeline.to("cuda")

pipeline.enable_xformers_memory_efficient_attention()

prompt = """

Babel tower falling down, walking on the starlight, dreamy ultra wide shot

, atmospheric, hyper realistic, epic composition, cinematic, octane render

, artstation landscape vista photography by Carr Clifton & Galen Rowell, 16K resolution

, Landscape veduta photo by Dustin Lefevre & tdraw, detailed landscape painting by Ivan Shishkin

, DeviantArt, Flickr, rendered in Enscape, Miyazaki, Nausicaa Ghibli, Breath of The Wild

, 4k detailed post processing, artstation, rendering by octane, unreal engine

"""

image = pipeline(prompt).images[0]

image.save("goodbye_babel_tower.png")

And you will get an image like this:

Goodby Babel, generated by Andrew Zhu using diffusersIf you still see a warning message like: Token indices sequence length is longer than the specified maximum sequence length for this model ( *** > 77 ) . Running this sequence through the model will result in indexing errors. It is normal, you can just ignore it.

4. Use Custom LoRA with Diffusers

Despite the claims of LoRA support in Diffusers, users still face limitations when it comes to loading local LoRA files in the .safetensor file format. This can be a significant obstacle for users to use the LoRA from the community.

To overcome this limitation, I have created a function that allows users to load LoRA files with weighted numbers in real time. This function can be used to load LoRA files and their corresponding weights to a Diffusers model, enabling the generation of high-quality images with LoRA data.

Here is the function body:

from safetensors.torch import load_file

def __load_lora(

pipeline

,lora_path

,lora_weight=0.5

):

state_dict = load_file(lora_path)

LORA_PREFIX_UNET = 'lora_unet'

LORA_PREFIX_TEXT_ENCODER = 'lora_te'

alpha = lora_weight

visited = []

# directly update weight in diffusers model

for key in state_dict:

# as we have set the alpha beforehand, so just skip

if '.alpha' in key or key in visited:

continue

if 'text' in key:

layer_infos = key.split('.')[0].split(LORA_PREFIX_TEXT_ENCODER+'_')[-1].split('_')

curr_layer = pipeline.text_encoder

else:

layer_infos = key.split('.')[0].split(LORA_PREFIX_UNET+'_')[-1].split('_')

curr_layer = pipeline.unet

# find the target layer

temp_name = layer_infos.pop(0)

while len(layer_infos) > -1:

try:

curr_layer = curr_layer.__getattr__(temp_name)

if len(layer_infos) > 0:

temp_name = layer_infos.pop(0)

elif len(layer_infos) == 0:

break

except Exception:

if len(temp_name) > 0:

temp_name += '_'+layer_infos.pop(0)

else:

temp_name = layer_infos.pop(0)

# org_forward(x) + lora_up(lora_down(x)) * multiplier

pair_keys = []

if 'lora_down' in key:

pair_keys.append(key.replace('lora_down', 'lora_up'))

pair_keys.append(key)

else:

pair_keys.append(key)

pair_keys.append(key.replace('lora_up', 'lora_down'))

# update weight

if len(state_dict[pair_keys[0]].shape) == 4:

weight_up = state_dict[pair_keys[0]].squeeze(3).squeeze(2).to(torch.float32)

weight_down = state_dict[pair_keys[1]].squeeze(3).squeeze(2).to(torch.float32)

curr_layer.weight.data += alpha * torch.mm(weight_up, weight_down).unsqueeze(2).unsqueeze(3)

else:

weight_up = state_dict[pair_keys[0]].to(torch.float32)

weight_down = state_dict[pair_keys[1]].to(torch.float32)

curr_layer.weight.data += alpha * torch.mm(weight_up, weight_down)

# update visited list

for item in pair_keys:

visited.append(item)

return pipeline

The logic is extracted from the convert_lora_safetensor_to_diffusers.py of the diffusers git repo.

Take one of the famous LoRA:MoXin for example. you can use the __load_lora function like this:

from diffusers import DiffusionPipeline

import torch

pipeline = DiffusionPipeline.from_pretrained(

r"D:\sd_models\deliberate_v2"

,custom_pipeline = "lpw_stable_diffusion"

,torch_dtype = torch.float16

)

lora = (r"D:\sd_models\Lora\Moxin_10.safetensors",0.8)

pipeline = __load_lora(pipeline=pipeline,lora_path=lora[0],lora_weight=lora[1])

pipeline.to("cuda")

pipeline.enable_xformers_memory_efficient_attention()

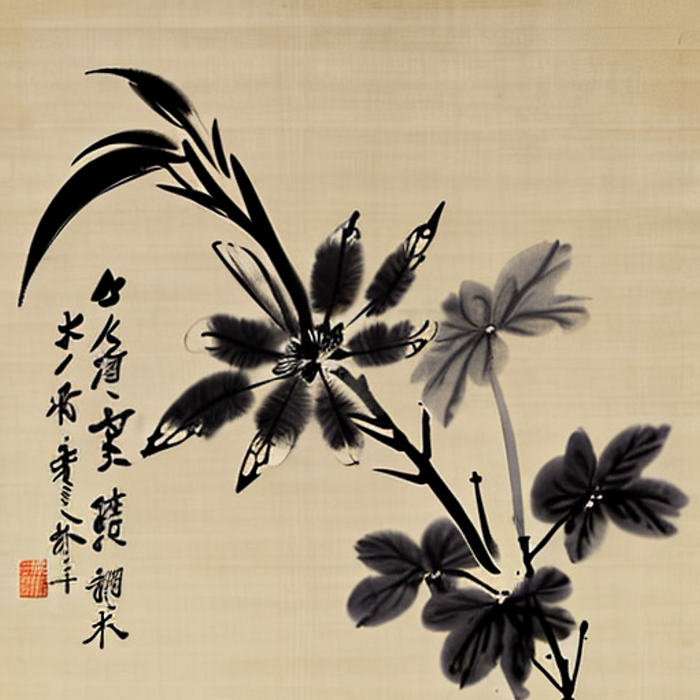

prompt = """

shukezouma,negative space,shuimobysim

a branch of flower, traditional chinese ink painting

"""

image = pipeline(prompt).images[0]

image.save("a branch of flower.png")

The prompt will generate an image like this:

a branch of flower, generated by Andrew Zhu using diffusersYou can call multiple times of __load_lora() to load several LoRAs for one generation.

With this function, you can now load LoRA files with weighted numbers in real time and use them to generate high-quality images with Diffusers. The LoRA loading is pretty fast, usually taking only 1–2 seconds, way better than converting and using(which will generate another model file in GB size).

5. Use Custom Textural Inversions with Diffusers

Using custom Texture Inversions with Diffusers package can be a powerful way to generate high-quality images. However, the official documentation of Diffusers suggests that users need to train their own Textual Inversions which can take up to an hour on a V100 GPU. This may not be practical for many users who want to generate images quickly.

So I investigated it and found a solution that can enable diffusers to use a textual inversion just like in Stable Diffusion WebUI. Below is the function I created to load a custom Textual Inversion.

def load_textual_inversion(

learned_embeds_path

, text_encoder

, tokenizer

, token = None

, weight = 0.5

):

'''

Use this function to load textual inversion model in model initilization stage

or image generation stage.

'''

loaded_learned_embeds = torch.load(learned_embeds_path, map_location="cpu")

string_to_token = loaded_learned_embeds['string_to_token']

string_to_param = loaded_learned_embeds['string_to_param']

# separate token and the embeds

trained_token = list(string_to_token.keys())[0]

embeds = string_to_param[trained_token]

embeds = embeds[0] * weight

# cast to dtype of text_encoder

dtype = text_encoder.get_input_embeddings().weight.dtype

embeds.to(dtype)

# add the token in tokenizer

token = token if token is not None else trained_token

num_added_tokens = tokenizer.add_tokens(token)

if num_added_tokens == 0:

#print(f"The tokenizer already contains the token {token}.The new token will replace the previous one")

raise ValueError(f"The tokenizer already contains the token {token}. Please pass a different `token` that is not already in the tokenizer.")

# resize the token embeddings

text_encoder.resize_token_embeddings(len(tokenizer))

# get the id for the token and assign the embeds

token_id = tokenizer.convert_tokens_to_ids(token)

text_encoder.get_input_embeddings().weight.data[token_id] = embeds

return (tokenizer,text_encoder)

In the load_textual_inversion() function, you need to provide the following arguments:

learned_embeds_path: Path to the pre-trained textual inversion model file in .pt or .bin format.text_encoder: Text encoder object obtained from the Diffusion Pipeline.tokenizer: Tokenizer object obtained from the Diffusion Pipeline.token: Optional argument specifying the prompt token. By default, it is set to None. it is the keyword that will trigger the textual inversion in your promptweight: Optional argument specifying the weight of the textual inversion. By default, I set it to 0.5. you can