StyleGAN network blending

Last touched August 25, 2020

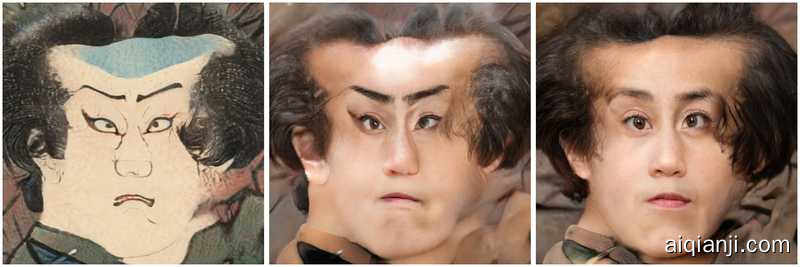

Making Ukiyo-e portraits real

In my previous post about attempting to create an ukiyo-e portrait generator I introduced a concept I called “layer swapping” in order to mix two StyleGAN models[1]. The aim was to blend a base model and another created from that using transfer learning, the fine-tuned model. The method was different to simply interpolating the weights of the two models[2] as it allows you to control independently which model you got low and high resolution features from; in my example I wanted to get the pose from normal photographs, and the texture/style from ukiyo-e prints[3].

在我以前的尝试创建浮世绘人像生成器的文章中,我介绍了一个称为“图层交换”的概念,以便混合两个StyleGAN模型[1]。目的是将基本模型与使用迁移学习的基础模型(即经过**微调的模型)**融合在一起。该方法不同于简单地插值两个模型的权重[2]因为它可以让您独立控制您从哪个型号获得了低分辨率和高分辨率功能;在我的示例中,我想从普通照片中获得姿势,从浮世绘照片中获得质感/样式[3]。

The above example worked ok, but after the a recent Twitter thread popped up again on model interpolation, I realised that I had missed a really obvious variation on my earlier experiments. Rather than taking the low resolution layers (pose) from normal photos and high res layers (texture) from ukiyo-e I figured it would surely be interesting to try the other way round[4].

上面的示例工作正常,但是在模型插值中再次弹出一个最近的Twitter线程之后,我意识到我错过了早期实验中的一个非常明显的变化。我认为,与其从普通照片中获取低分辨率图层(姿势)以及从浮世绘中获取高分辨率图层(纹理),不如尝试另一种方法,这肯定很有趣。[4]。

It was indeed interesting and deeply weird too! Playing around with the different levels at which the swap occurs gives some control over how realistic the images are. If you’ve saved a bunch of network snapshots during transfer learning the degree to which the networks have diverged also give some interesting effects.

这确实很有趣,而且也很奇怪!在交换发生的不同级别上进行操作,可以控制图像的逼真度。如果您在传输过程中保存了一堆网络快照,则学习网络的分歧程度也会产生一些有趣的效果。

You also see some wonderfully weird effects because of the fact that ukiyo-e artists almost never drew faces straight on. As the original faces model is mostly straight-on the model has a somewhat tough time adapting to this change.

您还会看到一些奇特的怪异效果,因为浮世绘艺术家几乎从未画过脸。由于原始的面孔模型大部分是直截了当的,因此模型很难适应这种变化。

Blending other StyleGAN models

You can also swap models which are trained on very different domains (but one still has to be fine-tuned from the other). For example the Frea Buckler model trained by Derrick Schultz. Swapping out the original FFHQ trained model into this one is in a sense replacing the rendering to be of the faces model, but the structure to be from the new one.

您还可以交换在非常不同的域上训练的模型(但是仍然必须对另一个域进行微调)。例如,德里克·舒尔茨(Derrick Schultz)训练的Frea Buckler模型。将原始的FFHQ训练模型替换为该模型在某种意义上替代了面部模型的渲染,但结构来自新模型。

As well as being pretty mesmerising it seems to give some hints as to how the model transferred domains. It looks like it’s mostly adapted features corresponding to the background of the original images to serve as the structural elements of the new model. Many of the new images look like an “over the shoulder” view point, and the original faces have been pushed out of frame (although as noticed they are still lurking there deep in the model). Although you could probably understand these details of the mechansim of domain transfer using some statistical analysis of the network weights and internal activations, this is quite a simple and pretty way of getting an intuition.

除了令人着迷之外,它似乎还提供了一些有关模型如何转移域的提示。看起来它是与原始图像的背景相对应的,经过改编的功能,可以用作新模型的结构元素。许多新图像看起来像是“肩膀上方”的视点,并且原始面孔已被推出框架(尽管注意到它们仍然潜伏在模型的深处)。尽管您可以通过对网络权重和内部激活的统计分析来了解域转移机制的这些细节,但这是获得直觉的一种非常简单而漂亮的方法。

Get the code and blend your own networks

I’ve shared an initial version of some code to blend two networks in this layer swapping manner (with some interpolation thrown into the mix) in my StyleGAN2 fork (see the blend_models.py file). There’s also an example colab notebook to show how to blend some StyleGAN models[5], in the example I use a small faces model and one I trained on satellite images of the earth above.

I plan to write a more detailed article on some of the effects of different blending strategies and models but for now the rest of this is documenting some of the amazing things others have done with the approach.

Over to you StyleGAN Twitter!

Disneyfication

Shortly after sharing my code and approach, some of the wonderful StyleGAN community on Twitter started trying things out. The first really amazing network blend was by Doron Adler, he mixed a model fine-tuned on just a few images of Disney/Dreamworks/Pixar characters to give these uncannily cartoonish characters.

He also used a StyleGAN encoder to find the latent representation of a real face in the “real face” model then generate an image from the representation using the “blended” model with amazing semi-real cartoonification results:

I think this approach would make a great way of generating a paired image dataset for training a pix2pixHD model, i.e. the StyleGAN Distillation approach.

Resolution dependent interpolation: foxes, ponies, people, and furries

It was originally Arfa who asked me to share some of the layer swapping code I had been working on. He followed up by combining both the weight interpolation and layer swapping ideas, combining a bunch of different models (with some neat visualisations):

The results are pretty amazing, this sort of “resolution dependent model interpolation” is the logical generalisation of both the interpolation and swapping ideas. It looks like it gives a completely new axis of control over a generative model (assuming you have some fine-tuned models which can be combined). Take these example frames from one of the above videos:

On the left is the output of the anime model, on the right the my little pony model, and in the middle the mid-resolution layers have been transplanted from my little pony into anime. This essentially introduces middle resolution features such as the eyes and nose from my little pony into anime characters!

Parameter tuning

Nathan Shipley made some beautiful experiments trying to get the Toonification effect just right by adjusting two of the key parameters: the amount of transfer learning to apply (measured in thousands of iterations) and the resolution layer from which to swap. By tuning these two you can pick out just the degree of Toonificaiton to apply, see this lovely figure made by Nathan:

Then he applied First Order Motion model and you get some pretty amazing results:

Going further

I think there’s lots of potential to look at these blending strategies further, in particular not only interpolating between models dependent on the resolution, but also differently for different channels. If you can identify the subset of neurons which correspond (for example) to the my little pony eyes you could swap those specifically into the anime model, and be able to modify the eyes without affecting other features, such as the nose. Simple clustering of the internal activations has already been shown to be an effective way of identifying neurons which correspond to attributes in the image in the Editing in Style paper so this seems pretty straightforward to try!

- I did all this work using StyleGAN2, but have generally taken to referring to both versions 1 and 2 as StyleGAN, StyleGAN 1 is just config-a in the StyleGAN 2 code.↩

- Interpolating two StyleGAN models has been used quite a bit by many on Twitter to mix models for interesting results. And as far as I’m aware the idea first cropped up in generative models in the ESRGAN paper.↩

- If this sounds a little bit like style-transfer then you’re not far off there are some similarities. In face StyleGAN’s architecture was inspired by networks designed for style transfer.↩

- It seems to be a general rule that neural networks are better at adding texture than removing it.↩

- If you’re in search of some models to blend then see collection of pretrained stylegan models (I intend to add a field as to which ones have been fine-tuned in the near future.)↩