KDnuggets Exclusive: Interview with Yann LeCun, Deep Learning Expert, Director of Facebook AI Lab

Tags: Andrew Ng, Deep Learning, Facebook, Interview, NYU, Support Vector Machines, Vladimir Vapnik, Yann LeCun

We discuss what enabled Deep Learning to achieve remarkable successes recently, his argument with Vapnik about (deep) neural nets vs kernel (support vector) machines, and what kind of AI can we expect from Facebook.

本文主要讨论是什么给深度学习带来了今日如此令世人瞩目的成绩,Yann Lecun和Vapnik关于神经网络和核函数(支持向量机)的争论,以及Facebook理想中的AI是什么样子的。

By Gregory Piatetsky, KDnuggets.

Gregory Piatetsky,KDD会议创始人,是1989,1991和1993年KDD的主席,SIGKDD第一个服务奖章获得者,KDnuggets网站和周刊的维护者。

Prof. Yann LeCun](http://yann.lecun.com/) has been much in the news lately, as one of the leading experts in Deep Learning - a breakthrough advance in machine learning which has been achieving amazing successes, as a founding Director of NYU Center for Data Science, and as the newly appointed Director of the AI Research Lab at Facebook. See his bio at the end of this post and you can learn more about his work at yann.lecun.com/.

[

He is extremely busy, combining his new job at Facebook and his old job at NYU, so I am very pleased that he agreed to answer a few questions for KDnuggets readers.

Gregory Piatetsky: 1. Artificial Neural networks have been studied for 50 years, but only recently they have achieved remarkable successes, in such difficult tasks as speech and image recognition, with Deep Learning Networks. What factors enabled this success - big data, algorithms, hardware?

问:人工神经网络的研究已经有五十多年了,但是最近才有非常令人瞩目的结果,在诸如语音和图像识别这些比较难的问题上,是什么因素让深度学习网络胜出了呢?数据?算法?硬件?

Yann LeCun: Despite a commonly-held belief, there have been numerous successful applications of neural nets since the late 80's.

Deep learning has come to designate any learning method that can train a system with more than 2 or 3 non-linear hidden layers.

Around 2003, Geoff Hinton, Yoshua Bengio and myself initiated a kind of "conspiracy" to revive the interest of the machine learning community in the problem of learning representations (as opposed to just learning simple classifiers). It took until 2006-2007 to get some traction, primarily through new results on unsupervised training (or unsupervised pre-training, followed by supervised fine-tuning), with work by Geoff Hinton, Yoshua Bengio, Andrew Ng and myself.

But much of the recent practical applications of deep learning use purely supervised learning based on back-propagation, altogether not very different from the neural nets of the late 80's and early 90's.

答:虽然大部分人的感觉是人工神经网络最近几年才迅速崛起,但实际上上个世纪八十年代以后,就有很多成功的应用了。深度学习指的是,任何可以训练 多于两到三个非线性隐含层模型的学习算法。大概是2003年,Geoff Hinton,Yoshua Bengio和我策划并鼓动机器学习社区将兴趣放在表征学习这个问题上(和简单的分类器学习不同)。直到2006-2007年左右才有了点味道,主要是通 过无监督学习的结果(或者说是无监督预训练,伴随监督算法的微调),这部分工作是Geoff Hinton,Yoshua Bengio,Andrew Ng和我共同进行的。

但是大多数最近那些有效果的深度学习,用得还是纯监督学习加上后向传播算法,跟上个世纪八十年代末九十年代初的神经网络没太大区别。

What's different is that we can run very large and very deep networks on fast GPUs (sometimes with billions of connections, and 12 layers) and train them on large datasets with millions of examples. We also have a few more tricks than in the past, such as a regularization method called "drop out", rectifying non-linearity for the units, different types of spatial pooling, etc.

Many successful applications, particularly for image recognition use the convolutional network architecture (ConvNet), a concept I developed at Bell Labs in the late 80s and early 90s. At Bell Labs in the mid 1990s we commercially deployed a number of ConvNet-based systems for reading the amount on bank check automatically (printed or handwritten).

At some point in the late 1990s, one of these systems was reading 10 to 20% of all the checks in the US. Interest in ConvNet was rekindled in the last 5 years or so, with nice work from my group, from Geoff Hinton, Andrew Ng, and Yoshua Bengio, as from Jurgen Schmidhuber's group at IDSIA in Switzerland, and from NEC Labs in California. ConvNets are now widely used by Facebook, Google, Microsoft, IBM, Baidu, NEC and others for image and speech recognition. [GP: A student of Yann Lecun recently won Dogs vs Cats competition on Kaggle using a version of ConvNet, achieving 98.9% accuracy.]

区别在于,我们现在可以在速度很快的GPU上跑非常大非常深层的网络(比如有时候有十亿连接,12层),而且还可以用大规模数据集里面的上百万的样本来训练。过去我们还有一些训练技巧,比如有个正则化的方法叫做 dropout ,还有克服神经元的非线性问题,以及不同类型的空间池化(spatial pooling)等等。

很多成功的应用,尤其是在图像识别上,都采用的是卷积神经网络( ConvNet ),是我上个世纪八九十年代在贝尔实验室开发出来的。后来九十年代中期,贝尔实验室商业化了一批基于卷积神经网络的系统,用于识别银行支票(印刷版和手写版均可识别)。

经过了一段时间,其中一个系统识别了全美大概10%到20%的支票。最近五年,对于卷积神经网络的兴趣又卷土重来了,很多漂亮的工作,我的研究小组有参 与,以及Geoff Hinton,Andrew Ng和Yoshua Bengio,还有瑞士IDSI的AJargen Schmidhuber,以及加州的NEC。卷积神经网络现在被Google,Facebook,IBM,百度,NEC以及其他互联网公司广泛使用,来进 行图像和语音识别。

GP: 2. Deep learning is not an easy to use method. What tools, tutorials would you recommend to data scientists, who want to learn more and use it on their data? Your opinion of Pylearn2, Theano?

问:深度学习可不是一个容易用的方法,你能给大家推荐一些工具和教程么?大家都挺想从在自己的数据上跑跑深度学习。

Yann LeCun: There are two main packages:* Torch7, and

They have slightly different philosophies and relative advantages and disadvantages. Torch7 is an extension of the LuaJIT language that adds multi-dimensional arrays and numerical library. It also includes an object-oriented package for deep learning, computer vision, and such. The main advantage of Torch7 is that LuaJIT is extremely fast, in addition to being very flexible (it's a compiled version of the popular Lua language).

Theano+Pylearn2 has the advantage of using Python (it's widely used, and has lots of libraries for many things), and the disadvantage of using Python (it's slow).

答:基本上工具有两个推荐:

- Torch7

- Theano + Pylearn2

他们的设计哲学不尽相同,各有千秋。Torch7是LuaJIT语言的一个扩展,提供了多维数组和数值计算库。它还包括一个面向对象的深度学习开 发包,可用于计算机视觉等研究。Torch7的主要优点在于LuaJIT非常快,使用起来也非常灵活(它是流行脚本语言Lua的编译版本)。

Theano加上Pylearn先天就有Python语言带来的优势(Python是广泛应用的脚本语言,很多领域都有对应的开发库),劣势也是应为用Python,速度慢。

GP: 3. You and I have met a while ago at a scientific advisory meeting of KXEN, where Vapnik's Statistical Learning Theory and SVM were a major topic. What is the relationship between Deep Learning and Support Vector Machines / Statistical Learning Theory?

问:咱俩很久以前在KXEN的科学咨询会议上见过,当时Vapnik的概率学习理论和支持向量机(SVM)是比较主流的。深度学习和支持向量机/概率学习理论有什么关联?

Yann LeCun: Vapnik and I were in nearby office at Bell Labs in the early 1990s, in Larry Jackel's Adaptive Systems Research Department. Convolutional nets, Support Vector Machines, Tangent Distance, and several other influential methods were invented within a few meters of each other, and within a few years of each other. When AT&T spun off Lucent In 1995, I became the head of that department which became the Image Processing Research Department at AT&T Labs - Research. Machine Learning members included Yoshua Bengio, Leon Bottou, and Patrick Haffner, and Vladimir Vapnik. Visitors and interns included Bernhard Scholkopf, Jason Weston, Olivier Chapelle, and others.

答:1990年前后,我和Vapnik在贝尔实验室共事,归属于Larry Jackel的自适应系统研究部,我俩办公室离得很近。卷积神经网络,支持向量机,正切距离以及其他后来有影响的方法都是在这发明出来的,问世时间也相差 无几。1995年AT&T拆分朗讯以后,我成了这个部门的领导,部门后来改成了AT&T实验室的图像处理研究部。部门当时的机器学习专家 有Yoshua Bengio, Leon Bottou,Patrick Haffner以及Vladimir Vapnik,还有几个访问学者以及实习生。

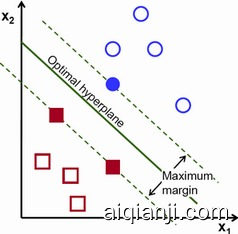

Vapnik and I often had lively discussions about the relative merits of (deep) neural nets and kernel machines. Basically, I have always been interested in solving the problem of learning features or learning representations. I had only a moderate interest in kernel methods because they did nothing to address this problem. Naturally, SVMs are wonderful as a generic classification method with beautiful math behind them. But in the end, they are nothing more than simple two-layer systems. The first layer can be seen as a set of units (one per support vector) that measure a kind of similarity between the input vector and each support vector using the kernel function. The second layer linearly combines these similarities.

Vapnik and I often had lively discussions about the relative merits of (deep) neural nets and kernel machines. Basically, I have always been interested in solving the problem of learning features or learning representations. I had only a moderate interest in kernel methods because they did nothing to address this problem. Naturally, SVMs are wonderful as a generic classification method with beautiful math behind them. But in the end, they are nothing more than simple two-layer systems. The first layer can be seen as a set of units (one per support vector) that measure a kind of similarity between the input vector and each support vector using the kernel function. The second layer linearly combines these similarities.

我和Vapnik经常讨论深度网络和核函数的相对优缺点。基本来讲,我一直对于解决特征学习和表征学习感兴趣。我对核方法兴趣一般,因为它们不能 解决我的问题。老实说,支持向量机作为通用分类方法来讲,是非常不错的。但是话说回来,它们也只不过是简单的两层模型,第一层是用核函数来计算输入数据和 支持向量之间相似度的单元集合。第二层则是线性组合了这些相似度。

It's a two-layer system in which the first layer is trained with the simplest of all unsupervised learning method: simply store the training samples as prototypes in the units. Basically, varying the smoothness of the kernel function allows us to interpolate between two simple methods: linear classification, and template matching. I got in trouble about 10 years ago by saying that kernel methods were a form of glorified template matching. Vapnik, on the other hand, argued that SVMs had a very clear way of doing capacity control. An SVM with a "narrow" kernel function can always learn the training set perfectly, but its generalization error is controlled by the width of the kernel and the sparsity of the dual coefficients. Vapnik really believes in his bounds. He worried that neural nets didn't have similarly good ways to do capacity control (although neural nets do have generalization bounds, since they have finite VC dimension).

第一层就是用最简单的无监督模型训练的,即将训练数据作为原型单元存储起来。基本上来说,调节核函数的平滑性,产生了两种简单的分类方法:线性分 类和模板匹配。大概十年前,由于评价核方法是一种包装美化过的模板匹配,我惹上了麻烦。Vapnik,站在我对立面,他描述支持向量机有非常清晰的扩展控 制能力。“窄”核函数所产生的支持向量机,通常在训练数据上表现非常好,但是其普适性则由核函数的宽度以及对偶系数决定。Vapnik对自己得出的结果非 常自信。他担心神经网络没有类似这样简单的方式来进行扩展控制(虽然神经网络根本没有普适性的限制,因为它们都是无限的VC维)。

My counter argument was that the ability to do capacity control was somewhat secondary to the ability to compute highly complex function with a limited amount of computation. Performing image recognition with invariance to shifts, scale, rotation, lighting conditions, and background clutter was impossible (or extremely inefficient) for a kernel machine operating at the pixel level. But it was quite easy for deep architectures such as convolutional nets.

我反驳了他,相比用有限计算能力来计算高复杂度函数这种能力,扩展控制只能排第二。图像识别的时候,移位、缩放、旋转、光线条件以及背景噪声等等问题,会导致以像素做特征的核函数非常低效。但是对于深度架构比如卷积网络来说却是小菜一碟。

GP: 4. Congratulations on your recent appointment as the head of Facebook new AI Lab. What can you tell us about AI and Machine Learning advances we can expect from Facebook in the next couple of years?

问:祝贺你成为Facebook人工智能实验室的主任。你能给讲讲未来几年Facebook在人工智能和机器学习上能有什么产出么?

Yann LeCun: Thank you! it's a very exciting opportunity. Basically, Facebook's main objective is to enable communication between people. But people today are bombarded with information from friends, news organizations, websites, etc. Facebook helps people sift through this mass of information. But that requires to know what people are interested in, what motivates them, what entertains them, what makes them learn new things. This requires an understanding of people that only AI can provide. Progress in AI will allow us to understand content, such as text, images, video, speech, audio, music, among other things.

答:非常谢谢你,这个职位是个非常难得的机会。基本上来讲,Facebook的主要目标是让人与人更好的沟通。但是 当今的人们被来自朋友、新闻、网站等等信息来源狂哄乱炸。 Facebook 帮助人们来在信息洪流中找到正确的方向。这就需要Facebook 能知道人们对什么感兴趣,什么是吸引人的,什么让人快乐,什么让人们学到新东西。 这些知识,只有人工智能可以提供。人工智能的进展,将让我们理解各种内容,比如文字,图片,视频,语音,声音,音乐等等。

Gregory Piatetsky: 5. Looking longer term, how far will AI go? Will we reach Singularity as described by Ray Kurzweil?

问:长期来看,你觉得人工智能会变成什么样?我们会不会达到Ray Kurzweil所谓的奇点?

Yann LeCun: We will have intelligent machines. It's clearly a matter of time. We will have machines that, without being very smart, will do useful things, like drive our cars autonomously.

答:我们肯定会拥有智能机器。这只是时间问题。我们肯定会有那种虽然不是非常聪明,但是可以做有用事情的机器,比如无人驾驶车。

How long will it take? AI researchers have a long history of under-estimated the difficulties of building intelligent machines. I'll use an analogy: making progress in research is like driving a car to a destination. When we find a new paradigm or a new set of techniques, it feels like we are driving a car on a highway and nothing can stop us until we reach the destination.

至于这需要多长时间?人工智能研究者之前很长的一段时间都低估了制造智能机器的难度。我可以打个比方:研究进展就好像开车去目的地。当我们在研究上发现了新的技术,就类似在高速路上开车一样,无人可挡,直达目的地。

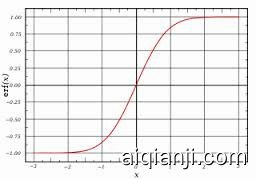

But the reality is that we are really driving in a thick fog and we don't realize that our highway is really a parking lot with a brick wall at the far end. Many smart people have made that mistake, and every new wave in AI was followed by a period of unbounded optimism, irrational hype, and a backlash. It happened with "perceptrons", "rule-based systems", "neural nets", "graphical models", "SVM", and may happen with "deep learning", until we find something else. But these paradigms were never complete failures. They all left new tools, new concepts, and new algorithms.

但是现实情况是,我们是在一片浓雾里开车,我们没有意识到,研究发现的所谓的高速公路,其实只是一个停车场,前方的尽头有一个砖墙。很多聪明人都 犯了这个错误,人工智能的每一个新浪潮,都会带来这么一段从盲目乐观到不理智最后到沮丧的阶段。感知机技术、基于规则的专家系统、神经网络、图模型、支持 向量机甚至是深度学习,无一例外,直到我们找到新的技术。当然这些技术,从来就不是完全失败的,它们为我们带来了新的工具、概念和算法。

Although I do believe we will eventually build machines that will rival humans in intelligence, I don't really believe in the singularity. We feel like we are on an exponentially growing curve of progress. But we could just as well be on a sigmoid curve. Sigmoids very much feel like exponentials at first. Also, the singularity assumes more than an exponential, it assumes an asymptote. The difference between dynamic evolutions that follow linear, quadratic, exponential, asymptotic, or sigmoidal shapes ar