Netflix Recommendations: Beyond the 5 stars (Part 1)

Netflix Technology BlogFollow

Apr 6, 2012 · 9 min read

by Xavier Amatriain and Justin Basilico (Personalization Science and Engineering)

In this two-part blog post, we will open the doors of one of the most valued Netflix assets: our recommendation system. In Part 1, we will relate the Netflix Prize to the broader recommendation challenge, outline the external components of our personalized service, and highlight how our task has evolved with the business. In Part 2, we will describe some of the data and models that we use and discuss our approach to algorithmic innovation that combines offline machine learning experimentation with online AB testing. Enjoy… and remember that we are always looking for more star talent to add to our great team, so please take a look at our jobs page.

在这个由两部分组成的博客文章中,我们将打开Netflix最有价值的资产之一:推荐系统。

在第1部分中,我们将讨论 推荐比赛Netflix Prize 和相关的获胜算法,概述我们个性化服务的外部组件,并强调我们的任务是如何随着业务发展而变化的。

在第2部分中,我们将描述一些我们使用的数据和模型,并讨论将离线机器学习实验与在线AB测试相结合的算法创新方法。并请记住,我们一直在寻找更多的明星人才来加入我们的优秀团队,所以请查看我们的工作页面。

The Netflix Prize and the Recommendation Problem Netflix Prize和推荐问题

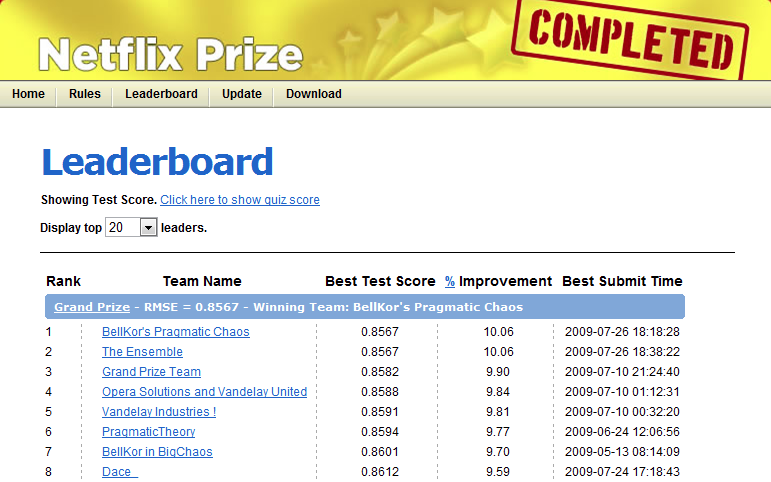

In 2006 we announced the Netflix Prize, a machine learning and data mining competition for movie rating prediction. We offered $1 million to whoever improved the accuracy of our existing system called Cinematch by 10%. We conducted this competition to find new ways to improve the recommendations we provide to our members, which is a key part of our business. However, we had to come up with a proxy question that was easier to evaluate and quantify: the root mean squared error (RMSE) of the predicted rating. The race was on to beat our RMSE of 0.9525 with the finish line of reducing it to 0.8572 or less.

最早在2006年,Netflix举办了一个名叫Netflix Prize的比赛,用机器学习和数据挖掘来做电影评分预测的竞赛。我们提供了100万美元来作为奖金,如果有人可以把现在我们的系统Cinematch 的准确率提高10%。我们的目标是寻找新的方法来改进向会员提供的建议,这是我们业务的关键部分。我们提出一个指标来进行评估和量化:对预测的评分计算均方根误差(RMSE)。最优算法最终打破了我们的的记录,将0.9525的RMSE,降低到0.8572或更小

A year into the competition, the Korbell team won the first Progress Prize with an 8.43% improvement. They reported more than 2000 hours of work in order to come up with the final combination of 107 algorithms that gave them this prize. And, they gave us the source code. We looked at the two underlying algorithms with the best performance in the ensemble: Matrix Factorization (which the community generally called SVD, Singular Value Decomposition) and Restricted Boltzmann Machines (RBM). SVD by itself provided a 0.8914 RMSE, while RBM alone provided a competitive but slightly worse 0.8990 RMSE. A linear blend of these two reduced the error to 0.88. To put these algorithms to use, we had to work to overcome some limitations, for instance that they were built to handle 100 million ratings, instead of the more than 5 billion that we have, and that they were not built to adapt as members added more ratings. But once we overcame those challenges, we put the two algorithms into production, where they are still used as part of our recommendation engine.

参加比赛的第一年,Korbell团队以8.43%的提升赢得了第一名的进步奖。他们报告了2000多个小时的工作,以便最终得出107个算法的组合,从而获得了该奖项。并且,他们给了我们源代码。我们研究了整体中性能最佳的两种基础算法:Matrix Factorization矩阵分解(社区通常将其称为SVD,奇异值分解)和受限玻尔兹曼机(RBM)。SVD本身提供了0.8914 RMSE,而RBM本身提供了竞争性但略差的0.8990 RMSE。这两者的线性混合将误差降低至0.88。为了使用这些算法,我们必须努力克服一些局限性,例如,它们被构建为可处理1亿个评级,而不是我们拥有的50亿以上,并且其构建不适应成员的添加更多评分。但是,一旦克服了这些挑战,便可以将这两种算法投入生产,它们被用作推荐引擎的一部分。

If you followed the Prize competition, you might be wondering what happened with the final Grand Prize ensemble that won the $1M two years later. This is a truly impressive compilation and culmination of years of work, blending hundreds of predictive models to finally cross the finish line. We evaluated some of the new methods offline but the additional accuracy gains that we measured did not seem to justify the engineering effort needed to bring them into a production environment. Also, our focus on improving Netflix personalization had shifted to the next level by then. In the remainder of this post we will explain how and why it has shifted.

如果继续跟踪竞赛发展,您可能会想知道两年后赢得100万美元奖金的最终大奖是什么算法。这是非常令人印象深刻的工作,它融合了数百种预测模型,最终跨越了终点线。我们离线评估了一些新方法,但是我们测得的额外的准确性提高似乎不足以证明将应该将它们引入生产环境。此外,到那时,我们对改善Netflix个性化的关注已转移到一个新的水平。在本文的其余部分中,我们将说明其变化方式和原因。

From US DVDs to Global Streaming 从美国DVD到全球流媒体

One of the reasons our focus in the recommendation algorithms has changed is because Netflix as a whole has changed dramatically in the last few years. Netflix launched an instant streaming service in 2007, one year after the Netflix Prize began. Streaming has not only changed the way our members interact with the service, but also the type of data available to use in our algorithms. For DVDs our goal is to help people fill their queue with titles to receive in the mail over the coming days and weeks; selection is distant in time from viewing, people select carefully because exchanging a DVD for another takes more than a day, and we get no feedback during viewing. For streaming members are looking for something great to watch right now; they can sample a few videos before settling on one, they can consume several in one session, and we can observe viewing statistics such as whether a video was watched fully or only partially.

我们对推荐算法的关注发生了变化的原因之一是因为Netflix在过去几年中整体发生了巨大变化。Netflix奖开始一年后的2007年,Netflix推出了即时流媒体服务。流不仅改变了我们的成员与服务交互的方式,而且改变了可用于我们的算法的数据类型。对于DVD,我们的目标是帮助人们在接下来的几天和几周内将标题接收到他们的队列中;选择的时间与观看时间相距遥远,人们进行了仔细的选择,因为将DVD换成另一盘需要一天以上的时间,并且在观看过程中我们没有任何反馈。对于流媒体会员来说,他们正在寻找一些值得关注的东西。他们可以先预览一些视频,然后再选择一个,在一个会话中会看好几个视频。

Another big change was the move from a single website into hundreds of devices. The integration with the Roku player and the Xbox were announced in 2008, two years into the Netflix competition. Just a year later, Netflix streaming made it into the iPhone. Now it is available on a multitude of devices that go from a myriad of Android devices to the latest AppleTV.

另一个重大变化是从单个网站迁移到数百个设备。与Roku播放器和Xbox的集成于2008年宣布,距Netflix竞赛已经两年了。仅仅一年之后,Netflix的流媒体就将其应用到了iPhone中。现在,它可以在从无数的Android设备到最新的AppleTV的众多设备上使用。

Two years ago, we went international with the launch in Canada. In 2011, we added 43 Latin-American countries and territories to the list. And just recently, we launched in UK and Ireland. Today, Netflix has more than 23 million subscribers in 47 countries. Those subscribers streamed 2 billion hours from hundreds of different devices in the last quarter of 2011. Every day they add 2 million movies and TV shows to the queue and generate 4 million ratings.

两年前,我们通过在加拿大的发布而走向国际。2011年,我们将43个拉丁美洲国家和地区添加到了列表中。就在最近,我们在英国和爱尔兰推出了产品。如今,Netflix在47个国家/地区拥有2300万订户。这些订户在2011年最后一个季度从数百种不同的设备中流式传输了20亿小时的节目。每天,他们将200万部电影和电视节目添加到队列中,并产生400万个收视率。

We have adapted our personalization algorithms to this new scenario in such a way that now 75% of what people watch is from some sort of recommendation. We reached this point by continuously optimizing the member experience and have measured significant gains in member satisfaction whenever we improved the personalization for our members. Let us now walk you through some of the techniques and approaches that we use to produce these recommendations.

我们已经针对这种新情况调整了个性化算法,以使现在人们观看的内容中有75%来自某种推荐。我们通过不断优化会员体验达到了这一点,并在改善会员的个性化水平时测得会员满意度的显着提高。现在让我们为您介绍一些用于产生这些建议的技术和方法。

Everything is a Recommendation 万事皆推荐

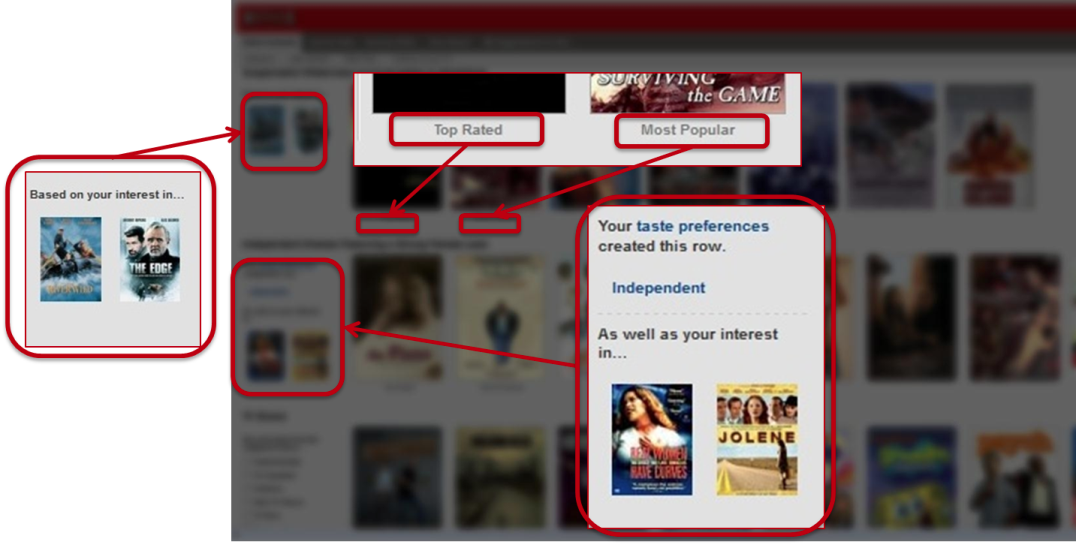

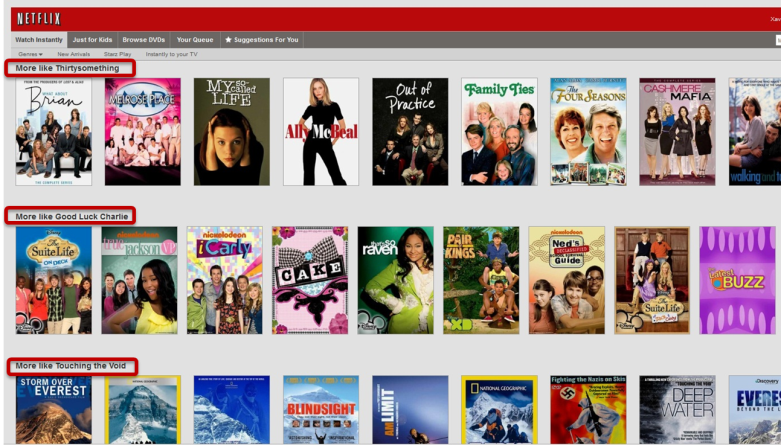

We have discovered through the years that there is tremendous value to our subscribers in incorporating recommendations to personalize as much of Netflix as possible. Personalization starts on our homepage, which consists of groups of videos arranged in horizontal rows. Each row has a title that conveys the intended meaningful connection between the videos in that group. Most of our personalization is based on the way we select rows, how we determine what items to include in them, and in what order to place those items.

我们发现我们记录了用户这些年的订阅,可以使Netflix个性化推荐具有巨大价值。个性化从我们的首页开始,该首页由水平排列的视频组组成。每行都有一个标题,传达了该组视频之间预期的有意义的联系。我们的大多数个性化设置基于我们选择行的方式,我们如何确定要包括在其中的项目以及以什么顺序放置这些项目。

Take as a first example the Top 10 row: this is our best guess at the ten titles you are most likely to enjoy. Of course, when we say “you”, we really mean everyone in your household. It is important to keep in mind that Netflix’ personalization is intended to handle a household that is likely to have different people with different tastes. That is why when you see your Top10, you are likely to discover items for dad, mom, the kids, or the whole family. Even for a single person household we want to appeal to your range of interests and moods. To achieve this, in many parts of our system we are not only optimizing for accuracy, but also for diversity.

首先举一个排在前10名的书:这是我们对您最可能喜欢的十个书名的最佳猜测。当然,当我们说“您”时,我们实际上是指您家中的每个人。重要的是要记住,Netflix的个性化旨在处理可能有不同口味的不同人的家庭。这就是为什么当您看到Top10时,您可能会发现爸爸,妈妈,孩子或整个家庭的物品的原因。即使对于单身家庭,我们也希望吸引您的兴趣爱好和心情。为了实现这一点,在我们系统的许多部分中,我们不仅针对准确性进行了优化,而且还针对多样性进行了优化。

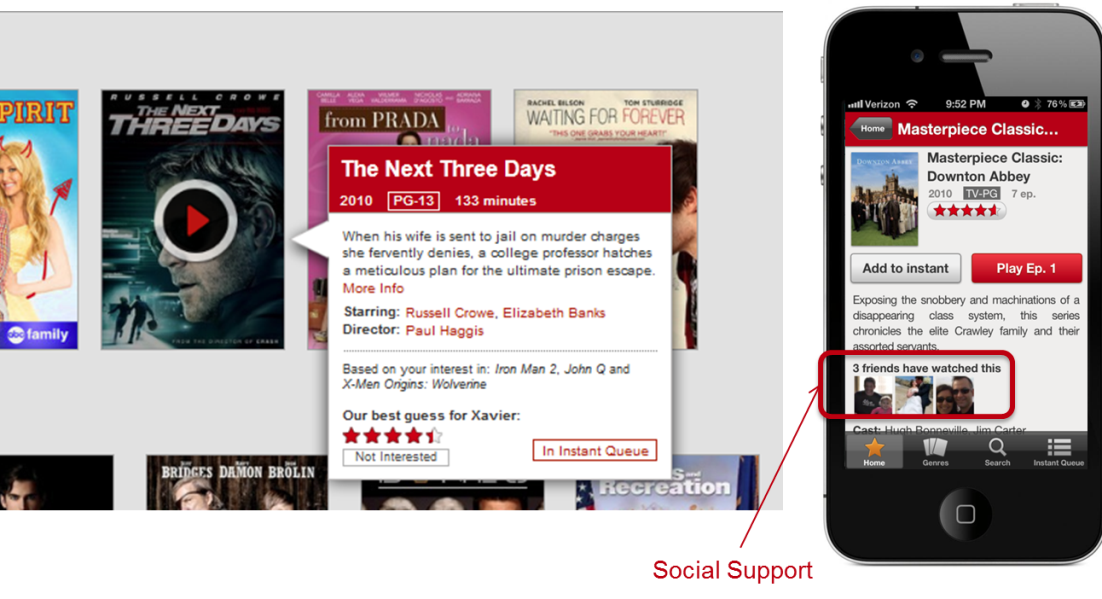

=Another important element in Netflix’ personalization is ==awareness==. We want members to be aware of how we are adapting to their tastes. This not only promotes trust in the system, but encourages members to give feedback that will result in better recommendations.= A different way of promoting trust with the personalization component is to provide **explanations** as to why we decide to recommend a given movie or show. We are not recommending it because it suits our business needs, but because it matches the information we have from you: your explicit taste preferences and ratings, your viewing history, or even your friends’ recommendations.

=Netflix个性化的另一个重要元素是 ==意识==。我们希望成员意识到我们如何适应他们的口味。这不仅可以增强对系统的信任,还可以鼓励成员提供反馈,从而产生更好的建议。=通过个性化组件提高信任度的另一种方法是提供**有关**为什么我们决定推荐给定电影或节目的解释。我们不建议您使用它,因为它适合我们的业务需求,但是因为它与我们从您那里获得的信息相匹配:您明确的口味偏好和评分,您的观看记录,甚至是您朋友的推荐。

On the topic of friends, we recently released our Facebook connect feature in 46 out of the 47 countries we operate — all but the US because of concerns with the VPPA law. Knowing about your friends not only gives us another signal to use in our personalization algorithms, but it also allows for different rows that rely mostly on your social circle to generate recommendations.

关于朋友的话题,我们最近在我们运营的47个国家中的46个国家/地区中的46个国家/地区发布了Facebook连接功能-由于对VPPA法的担忧,除美国以外的所有国家/地区。了解您的朋友不仅为我们提供了在我们的个性化算法中使用的另一种信号,而且还允许主要依靠您的社交圈的不同行生成推荐。

Some of the most recognizable personalization in our service is the collection of “genre” rows. These range from familiar high-level categories like “Comedies” and “Dramas” to highly tailored slices such as “Imaginative Time Travel Movies from the 1980s”. Each row represents 3 layers of personalization: the choice of genre itself, the subset of titles selected within that genre, and the ranking of those titles. Members connect with these rows so well that we measure an increase in member retention by placing the most tailored rows higher on the page instead of lower. As with other personalization elements, freshness and diversity is taken into account when deciding what genres to show from the thousands possible.

在我们的服务中,一些最知名的个性化设置是“genre”行的集合。这些范围从熟悉的高级类别(如“喜剧”和“戏剧”)到高度定制的片段,如“ 1980年代的富有想象力的时空旅行电影”。每行代表3层个性化:类型本身的选择,在该类型中选择的标题子集以及这些标题的排名。成员与这些行的连接非常好,以至于我们通过将最适合的行放在页面的上方而不是较低的位置来衡量成员保留的增加。与其他个性化元素一样,在决定从数千种可能显示的类型中选择时要考虑新鲜度和多样性。

We present an explanation for the choice of rows using a member’s implicit genre preferences — recent plays, ratings, and other interactions — , or explicit feedback provided through our taste preferences survey. We will also invite members to focus a row with additional explicit preference feedback when this is lacking.

我们使用会员的隐式流派偏好(最近的演出,评分和其他互动)或通过我们的喜好偏好调查提供的显式反馈,为行的选择提供了解释。如果缺少,我们还将邀请成员将重点放在一行上,并提供其他明确的偏好反馈。

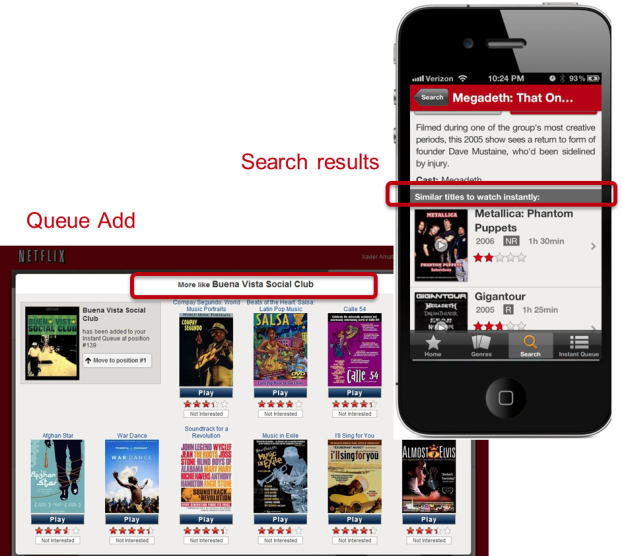

Similarity is also an important source of personalization in our service. We think of similarity in a very broad sense; it can be between movies or between members, and can be in multiple dimensions such as metadata, ratings, or viewing data. Furthermore, these similarities can be blended and used as features in other models. Similarity is used in multiple contexts, for example in response to a member’s action such as searching or adding a title to the queue. It is also used to generate rows of “adhoc genres” based on similarity to titles that a member has interacted with recently. If you are interested in a more in-depth description of the architecture of the similarity system, you can read about it in this past post on the blog.

相似性也是我们服务中个性化的重要来源。我们从广泛的意义上考虑相似性。它可以在电影之间或在成员之间,并且可以在多个维度上使用,例如元数据,评分或观看数据。此外,可以将这些相似性融合在一起并用作其他模型中的特征。在多个上下文中使用相似性,例如,响应于成员的动作(例如,搜索队列或向队列添加标题)。它也用于基于与成员最近与之交互的标题的相似性来生成“特殊类型”的行。如果您对相似性系统的体系结构有更深入的描述感兴趣,可以在博客的上一篇文章中阅读。

In most of the previous contexts — be it in the Top10 row, the genres, or the similars — ranking, the choice of what order to place the items in a row, is critical in providing an effective personalized experience. The goal of our ranking system is to find the best possible ordering of a set of items for a member, within a specific context, in real-time. We decompose ranking into scoring, sorting, and filtering sets of movies for presentation to a member. Our business objective is to maximize member satisfaction and month-to-month subscription retention, which correlates well with maximizing consumption of video content. We therefore optimize our algorithms to give the highest scores to titles that a member is most likely to play and enjoy.

在以前的大多数情况下(无论是在Top10行中,在流派中还是在类似情况中),对于提供有效的个性化体验而言,排名(选择将项连续放置的顺序)都是至关重要的。我们的排名系统的目标是在特定的上下文中实时找到成员的一组商品的最佳排序。我们将排名分解为对电影集进行评分,排序和过滤,以呈现给成员。我们的业务目标是最大程度地提高会员满意度和按月订阅保留率,这与最大限度地提高视频内容的消费量密切相关。因此,我们优化了算法,为会员最有可能玩和喜欢的游戏打出最高分。

Now it is clear that the Netflix Prize objective, accurate prediction of a movie’s rating, is just one of the many components of an effective recommendation system that optimizes our members enjoyment. We also need to take into account factors such as context, title popularity, interest, evidence, novelty, diversity, and freshness. Supporting all the different contexts in which we want to make recommendations requires a range of algorithms that are tuned to the needs of those contexts. In the next part of this post, we will talk in more detail about the ranking problem. We will also dive into the data and models that make all the above possible and discuss our approach to innovating in this space.

现在很明显,Netflix奖的目标,即准确预测电影的收视率,只是有效推荐系统中众多组件的其中之一,它可以优化会员的娱乐体验。我们还需要考虑诸如背景,标题受欢迎程度,兴趣,证据,新颖性,多样性和新鲜度等因素。要支持我们要提出建议的所有不同上下文,需要一系列适合这些上下文需求的算法。在本文的下一部分,我们将更详细地讨论排名问题。我们还将深入研究使上述所有可能性变为可能的数据和模型,并讨论我们在该领域进行创新的方法。

Netflix Recommendations: Beyond the 5 stars (Part 2)

Netflix Technology BlogFollow

Jun 20, 2012 · 10 min read

by Xavier Amatriain and Justin Basilico (Personalization Science and Engineering)

In part one of this blog post, we detailed the different components of Netflix personalization. We also explained how Netflix personalization, and the service as a whole, have changed from the time we announced the Netflix Prize.

[## Netflix Recommendations: Beyond the 5 stars (Part 1)

One of the most valued Netflix assets is our recommendation system

medium.com

](https://medium.com/@Netflix_Techblog/netflix-recommendations-beyond-the-5-stars-part-1-55838468f429)

The $1M Prize delivered a great return on investment for us, not only in algorithmic innovation, but also in brand awareness and attracting stars (no pun intended) to join our team. Predicting movie ratings accurately is just one aspect of our world-class recommender system. In this second part of the blog post, we will give more insight into our broader personalization technology. We will discuss some of our current models, data, and the approaches we follow to lead innovation and research in this space.

在第一部分这篇博客中,我们详细Netflix的个性化的不同组件。我们还解释了自宣布Netflix Price之日起,Netflix的个性化以及整个服务的变化。

Ranking

The goal of recommender systems is to present a number of attractive items for a person to choose from. This is usually accomplished by selecting some items and sorting them in the order of expected enjoyment (or utility). Since the most common way of presenting recommended items is in some form of list, such as the various rows on Netflix, we need an appropriate ranking model that can use a wide variety of information to come up with an optimal ranking of the items for each of our members.

推荐系统的目标是呈现许多吸引人的项目供个人选择。这通常是通过选择一些项目并按预期享受(或效用)的顺序对它们进行排序来完成的。由于推荐项目的最常见呈现方式是某种形式的列表,例如Netflix上的各个行,因此我们需要一个合适的排名模型,该模型可以使用各种信息来为每个项目提供最佳的排名我们的成员。

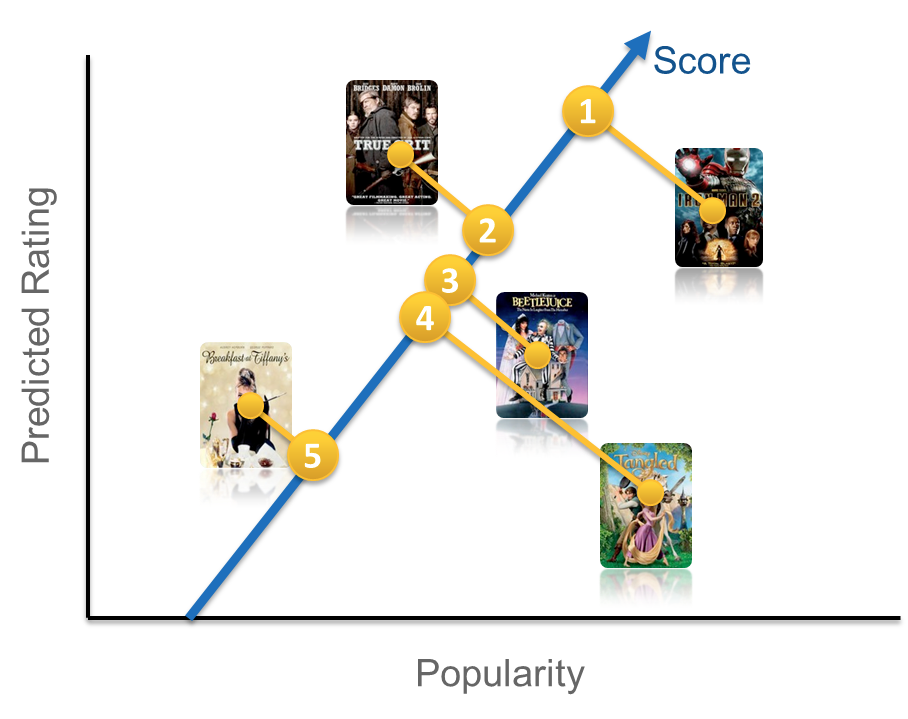

=If you are looking for a ranking function that optimizes consumption, an obvious baseline is item popularity. The reason is clear: on average, a member is most likely to watch what most others are watching. However, popularity is the opposite of personalization: it will produce the same ordering of items for every member. Thus, the goal becomes to find a personalized ranking function that is better than item popularity, so we can better satisfy members with varying tastes.=

=如果您正在寻找优化消费的排名功能,则显而易见的基线是商品受欢迎程度。原因很明确:平均而言,成员最有可能观看大多数其他成员正在观看的内容。但是,受欢迎程度与个性化相反:它将为每个成员产生相同的项目排序。因此,目标是找到一种比商品受欢迎程度更好的个性化排名功能,以便我们更好地满足口味不同的会员的需求。=

Recall that our goal is to recommend the titles that each member is most likely to play and enjoy. One obvious way to approach this is to use the member’s predicted rating of each item as an adjunct to item popularity. Using predicted ratings on their own as a ranking function can lead to items that are too niche or unfamiliar being recommended, and can exclude items that the member would want to watch even though they may not rate them highly. To compensate for this, rather than using either popularity or predicted rating on their own, we would like to produce rankings that balance both of these aspects. At this point, we are ready to build a ranking prediction model using these two features.

回想一下,我们的目标是推荐每个成员最有可能玩和喜欢的游戏。解决此问题的一种显而易见的方法是使用会员对每个商品的预测评分作为商品受欢迎程度的辅助手段。单独使用预测的收视率作为排名功能可能会导致推荐的商品过于小众或不熟悉,并且会排除会员想要观看的商品,即使它们可能未对它们进行高评价。为了弥补这一点,我们不希望自己单独使用流行度或预测评分,而是希望在两者之间取得平衡。至此,我们准备使用这两个功能构建排名预测模型。

There are many ways one could construct a ranking function ranging from simple scoring methods, to pairwise preferences, to optimization over the entire ranking. For the purposes of illustration, let us start with a very simple scoring approach by choosing our ranking function to be a linear combination of popularity and predicted rating. This gives an equation of the form frank(u,v) = w1 p(v) + w2 r(u,v) + b, where u=user, v=video item, p=popularity and r=predicted rating. This equation defines a two-dimensional space like the one depicted below.

构造排名函数的方法有很多种,从简单的评分方法到成对的偏好,再到整个排名的优化。为了说明的目的,让我们从一个非常简单的评分方法开始,将我们的排名函数选择为受欢迎程度和预测评分的线性组合。这给出了形式为frank(u,v)= w1 p(v)+ w2 r(u,v)+ b的方程式,其中u =用户,v =视频项,p =人气,r =预测的收视率。此方程式定义了一个二维空间,如下图所示。

Once we have such a function, we can pass a set of videos through our function and sort them in descending order according to the score. You might be wondering how we can set the weights w1 and w2 in our model (the bias b is constant and thus ends up not affecting the final ordering). In other words, in our simple two-dimensional model, how do we determine whether popularity is more or less important than predicted rating? There are at least two possible approaches to this. You could sa