Putting an ML model into production using Feast and Kubeflow on Azure

JayeshPosted on 2021年3月31日 • Updated on 2021年4月8日

#machinelearning#kubernetes#cloudnative#azure

Introduction

A lot of teams are adopting machine learning (ML) for their products to enable them to achieve more and deliver value. When we think of implementing an ML solution, there are two parts that come to mind:

许多团队正在为他们的产品采用机器学习(ML) ,以使他们能够实现更多和交付价值。当我们考虑实现机器学习解决方案时,我们会想到两个部分:

- Model development. 模型开发

- Model deployment and CI/CD. 模型部署和 CI/CD

The first is the job of a data scientist, who researches on what model architecture to use, what optimization algorithms work best, and other work pertaining to making a working model.

首先是数据科学家的工作,他们研究使用什么样的模型架构,什么样的优化算法工作得最好,以及其他有关建立工作模型的工作。

Once the model is showing satisfactory response on local inputs, it is time to put it into production, where it can be served to the public and client applications.

一旦该模型对本地输入显示出令人满意的响应,就是将其投入生产的时候了,在那里它可以为公众和客户应用程序提供服务。

The requirements from such a production system include, but are not limited to, the following:

这种生产系统的要求包括但不限于下列各项:

- Ability to train the model at scale, across different compute as required.

- 根据需要在不同的计算范围内对模型进行训练的能力。

- Workflow automation that entails preprocessing of data, training, and serving the model.

- 工作流自动化,包括数据预处理、训练和为模型服务。

- An easy-to-configure system of serving the model that works the same for all major frameworks used for machine learning, like Tensorflow and such.

- 一个易于配置的服务于模型的系统,对于所有主要的用于机器学习的框架都是一样的,比如 Tensorflow 等。

- Platform agnostic; something that runs as good on your local setup as on any cloud provider.

- 与平台无关; 在本地设置上运行的效果与在任何云提供商上运行的效果一样好。

- A user-friendly and intuitive interface that allows the data scientists with zero knowledge of Kubernetes to leverage its power.

- 一个用户友好和直观的界面,使数据科学家与 Kubernetes 的零知识,以利用其权力。

Kubeflow is an open-source machine learning toolkit for cloud-native applications that covers all that we discussed above and provides an easy way for anyone to get started with deploying ML in production.

Kubeflow 是一个针对本地云应用的开源机器学习工具包,它涵盖了我们上面讨论过的所有内容,并且为任何人提供了一个简单的方法来开始在生产环境中部署机器学习。

The Kubeflow Central Dashboard中央控制台

In addition, we need some common storage for features that our model would use for training and serving.

This storage should allow

另外,我们需要一些通用的存储空间来存储我们的模型用于训练和服务的特性。这种存储应该允许

- Serving of features for both training and inference.

- 特征服务于训练和推理。

- Data consistency and accurate merging of data from multiple sources.

- 数据一致性和准确合并来自多个来源的数据。

- Prevent errors like data leaks from happening and so on.

- 防止数据泄漏等错误的发生。

A lot of the teams working on machine learning have their own pipelines for fetching data, creating features, and storing and serving them but in this article, I'll introduce and work with Feast, an open-source feature store for ML.

许多从事机器学习的团队都有自己的管道来获取数据、创建特性、存储和服务它们,但在本文中,我将介绍并使用一个针对机器学习的开源特性存储 Feast。

From tecton.ai

I'll link an amazing article here (What is a Feature Store) that explains in detail what feature stores are, why they are important, and how Feast works in this context.

我将在这里链接一篇精彩的文章(什么是功能商店) ,详细解释特征存储是什么,为什么它们很重要,以及Feast 在这种情况下是如何工作的。

We'll talk about Feast in the next article in this series.

我们将在本系列的下一篇文章中讨论Feast 。

Contents

- Why do we need Kubeflow? 我们为什么需要 Kubeflow?

- Prerequisites 先决条件

- Setup 设置

- Feast for feature store Feast特征存储

- Kubeflow pipelines Kubeflow 管道

- Pipeline steps 管道的步骤

- Run the pipeline 运行管道

- Conclusion 总结

Why do we need Kubeflow? 我们为什么需要 Kubeflow?

Firstly, most of the folks developing machine learning systems are not experts in distributed systems. To be able to efficiently deploy a model, a fair amount of experience in GitOps, Kubernetes, containerization, and networking is expected. This is because managing these services is very complex, even for relatively less sophisticated solutions. A lot of time is wasted in preparing the environment and tweaking the configurations before model training can begin.

首先,大多数开发机器学习系统的人并不是分布式系统的专家。为了能够有效地部署一个模型,需要在 GitOps、 Kubernetes、集装箱化和网络方面有相当数量的经验。这是因为管理这些服务非常复杂,即使对于相对不那么复杂的解决方案也是如此。在开始模型训练之前,大量的时间被浪费在准备环境和调整配置上

Secondly, building a highly customized and "hacked-together" solution means that any plans to change the environment or orchestration provider will require a sizeable re-write of code and infrastructure configuration.

其次,构建一个高度自定义和“合并”的解决方案意味着,任何改变环境或业务流程提供者的计划都需要对代码和基础设施配置进行大量的重写。

Kubeflow is a composable, portable solution that runs on Kubernetes and makes using your ML stack easy and extensible.

是一个可组合的、可移植的解决方案,它运行在 Kubernetes 上,并且使您的机器学习协议栈易于使用和可扩展。

Prerequisites 先决条件

Before we begin, I'll recommend that you get familiar with the following, in order to better understand the code and the workflow.

在我们开始之前,我建议您熟悉以下内容,以便更好地理解代码和工作流。

- Docker and Kubernetes (I have built a hands-on, step-by-step course that covers both these concepts. Find it here and the associated YouTube playlist here!

Docker 和 Kubernetes (我已经建立了一个实践的,一步一步的课程,涵盖了这两个概念。在这里找到它和相关的 YouTube 播放列表在这里!

-

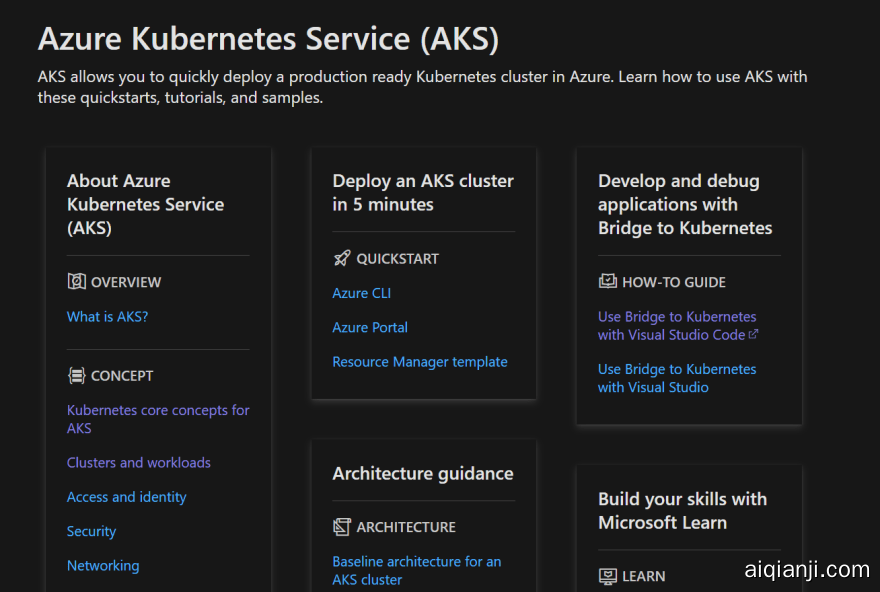

Azure Kubernetes Service. We'll be using it as the platform of choice for deploying our solution. You can check out this seven-part tutorial on Microsoft Learn (an awesome site to learn new tech really fast!) that will help you get started with using AKS for deploying your application.

-

Azure Kubernetes服务。我们将使用它作为部署解决方案的首选平台。你可以在微软学习网站上查看这个七部分的教程(这是一个学习新技术非常快的很棒的网站!)这将帮助您开始使用 AKS 部署您的应用程序。这个页面有其他的快速入门和有用的教程。

![image]() This page has other quickstarts and helpful tutorials.

This page has other quickstarts and helpful tutorials.

Although we're using AKS for this project, you can always take the same code and deploy it on any provider or locally using platforms like Minikube, kind and such.

虽然我们在这个项目中使用了 AKS,但是你总是可以使用相同的代码并将其部署到任何提供商或者本地使用的平台上,比如 Minikube,等等。

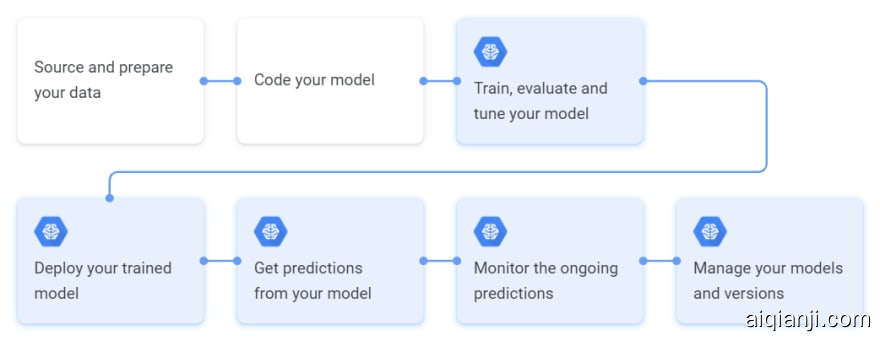

- A typical machine learning workflow and the steps involved. You don't need the knowledge to build a model, but at least an idea of what the process looks like.

- 一个典型的机器学习工作流程和涉及的步骤。您不需要知识来构建模型,但至少需要了解过程是什么样的。这是一个工作流的例子,来自 Google Cloud 的这篇文章。

![ML workflow]()

This is an example workflow, from this article by Google Cloud. - Some knowledge about Kubeflow and its components. If you're a beginner, you can watch a talk I delivered on Kubeflow at Azure Kubernetes Day from 4.55 hours.

对 Kubeflow 及其组成部分有所了解。如果你是一个初学者,你可以观看我在 Azure Kubernetes Day 上发表的 Kubeflow 演讲(4.55小时)。

Setup

- Create an AKS cluster and deploy Kubeflow on it.

![image]()

![image]()

The images above show how you can create an AKS cluster from the Azure portal.

1)创建一个 AKS 集群并在其上部署 Kubeflow。上面的图片展示了如何从 Azure 门户创建一个 AKS 集群。

This tutorial will walk you through all the steps required, from creating a new cluster (if you haven't already) to finally having a running Kubeflow deployment.

本教程将指导您完成所需的所有步骤,从创建一个新集群(如果您还没有创建的话)到最终拥有一个正在运行的 Kubeflow 部署。

- Once Kubeflow is installed, you'll be able to visit the Kubeflow Central Dashboard by either port-forwarding, using the following command:

2)一旦安装了 Kubeflow,你可以使用以下命令通过 port-forwarding 访问 Kubeflow Central Dashboard:

kubectl port-forward svc/istio-ingressgateway -n istio-system 8080:80

or by getting the address for the Istio ingress resource, if Kubeflow has configured one:

或者通过获取 Istio 入口资源的地址,如果 Kubeflow 配置了一个:

kubectl get ingress -n istio-system

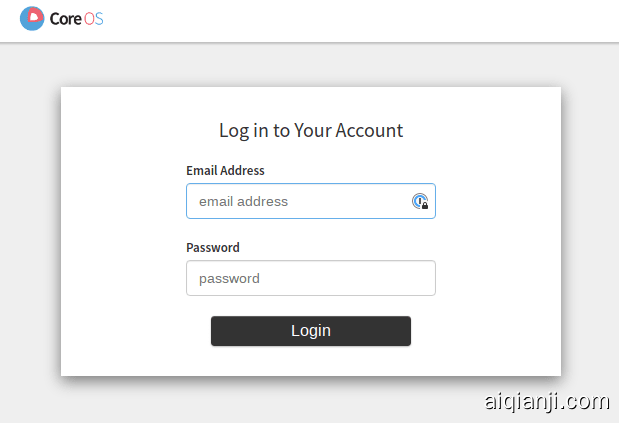

The address field in the output of the command above can be visited to open up the dashboard. The following image shows how a dashboard looks on start-up.

可以访问上面命令输出中的地址字段来打开仪表板。下图显示了启动时仪表板的外观。

The default credentials are email admin@kubeflow.org and password 12341234. You can configure this following the docs here.

默认的是 email admin@kubeflow. org 和密码12341234。

- Install Feast on your cluster. Follow the guide here.

3)在你的集群上安装 Feast。按照这里的指南。

- Finally, clone this repository to get all the code for the next steps.

4)最后,克隆这个存储库以获得下一步的所有源码。

git clone https://www.aiqianji.com/openoker/production_ml.git

Code to take an ML model into production using Feast and Kubeflow

Feast for feature store

After reading the article I linked above for Feast, I assume you've had some idea of what Feast is used for, and why it is important.

在阅读了上面我为Feast 链接的文章之后,我假设你已经知道Feast 是用来做什么的,以及为什么它很重要。

For now, we won't be going into the details on how Feast is implemented and will reserve it for the next edition, for the sake of readability.

The code below doesn't use Feast to fetch the features, but local files. In the next article, we'll see how we can plug Feast into our solution without affecting a major portion of the code.

现在,为了便于阅读,我们不会详细讨论 Feast 是如何实现的,而是将其保留到下一版中。下面的代码不使用 Feast 来获取特性,而是使用本地文件。在下一篇文章中,我们将看到如何在不影响大部分代码的情况下将 Feast 插入到解决方案中。

Kubeflow Pipelines

Kubeflow Pipelines is a component of Kubeflow that provides a platform for building and deploying ML workflows, called pipelines. Pipelines are built from self-contained sets of code called pipeline components. They are reusable and help you perform a fixed set of tasks together and manage them through the Kubeflow Pipelines UI.

Kubeflow pipeline 是 Kubeflow 的一个组成部分,它为建立和部署机器学习工作流提供了一个平台,称为 pipeline。管道是由称为管道组件的自包含的代码集构建的。它们是可重用的,可以帮助您一起执行一组固定的任务,并通过 Kubeflow pipeline UI 管理它们。

Kubeflow pipelines offer the following features:

Kubeflow 管道具有以下特点:

- A user interface (UI) for managing and tracking experiments, jobs, and runs.

- 用于管理和跟踪实验、作业和运行的用户界面(UI)。

- An engine for scheduling multi-step ML workflows.

- 一种用于调度多步 ML 工作流的引擎。

- An SDK for defining and manipulating pipelines and components.

- 一个用于定义和操作管道和组件的 SDK。

- Integration with Jupyter Notebooks to allow running the pipelines from code itself.

- 与 Jupyter Notebooks 集成,允许从代码本身运行管道。

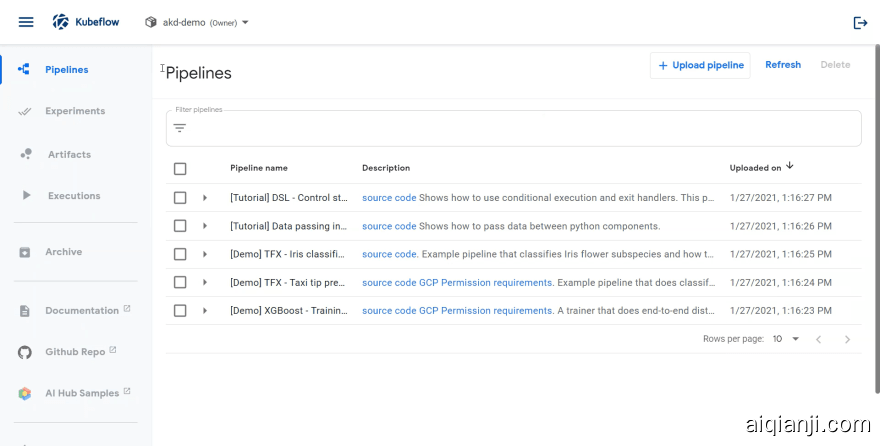

This is how the Kubeflow pipelines homepage looks like. It contains some sample pipelines that you can run and observe how each step progresses in a rich UI.

这就是 Kubeflow 管道主页的样子。它包含一些示例管道,您可以运行这些管道并观察每个步骤在丰富的 UI 中的进展情况。

You can also create a new pipeline with your own code, using the following screen.

您还可以使用自己的代码创建一个新的管道,使用下面的屏幕。

More hands-on footage can be found in my talk that I'll link again here, that I delivered on Kubeflow at Azure Kubernetes Day

在我的演讲中可以找到更多的实际操作镜头,我将在这里再次链接,这是我在 Azure Kubernetes 日上发表的 Kubeflow 视频

How to build a pipeline 如何建立管道

下面的图片总结了我将在下一节中采取的创建管道的步骤。

- The first step is to write your code into a file and then build a Docker image that contains the file.

第一步是将代码写入一个文件,然后构建一个包含该文件的 Docker 映像。 - Once an image is ready, you can use it in your pipeline code to create a step, using something called

ContainerOp. It is part of the Python SDK for Kubeflow.

ContainerOpis used as a pipeline step to define a container operation. In other words, it takes in details about what image to run, with what parameters and commands, as a step in the pipeline.

一旦一个图像准备好了,您可以在管道代码中使用它来创建一个步骤,使用名为 ContainerOp 的东西。它是 Python SDK for Kubeflow 的一部分。ContainerOp 用作定义容器操作的管道步骤。换句话说,作为流水线中的一个步骤,它详细介绍了要运行什么映像、使用什么参数和命令。 - The code that helps you to define and interact with Kubeflow pipelines and components is the

kfp.dslpackage. DSL stands for domain-specific language and it allows you to write code in python which is then converted into Argo pipeline configuration behind the scenes.

帮助您定义 Kubeflow 管道和组件并与之交互的代码是 kfp.dsl 包。代表领域特定语言,它允许你用 python 编写代码,然后在幕后将其转换成阿戈管道配置。

Argo pipelines use containers as steps and have a YAML definition for each step; the DSL package transforms your python code into the definitions that the Argo backend can use to run a workflow.

Argo 管道使用容器作为步骤,并且每个步骤都有一个 YAML 定义; DSL 包将您的 python 代码转换成 Argo 后端可以用来运行工作流的定义。

All of the concepts discussed above will be used in the next section when we start writing our pipeline steps and the code can be found at this GitHub repository.

当我们开始编写管道步骤时,上面讨论的所有概念都将在下一节中使用,代码可以在这个 GitHub 存储库中找到:production_ml

Code to take an ML model into production using Feast and Kubeflow

使用 Feast 和 Kubeflow 将 ML 模型投入生产的代码

Pipeline steps 管道的步骤

The pipeline we'll be building will consist of four steps, each one built to perform independent tasks.

我们将构建的管道将由四个步骤组成,每个步骤用于执行独立的任务。

- Fetch - get data from Feast feature store into a persistent volume.

- 从 Feast 特性存储中获取数据到一个持久性卷中。

- Train - use training data to train the RL model and store the model into persistent volume.

- 训练使用训练数据来训练 RL 模型,并将模型存储到持久容量中。

- Export - move the model to an s3 bucket.

- 导出——将模型移动到 s3桶中。

- Serve - deploy the model for inference using KFServing.

- 服务——使用 KFServing 部署推理模型。

Fetch

The fetch step would act as a bridge between the feature store (or some other source for our training data) and our pipeline code. While designing the code in this step, we should try to avoid any dependency on future steps. This will ensure that we could switch implementations for a feature store without affecting the code of the entire pipeline.

In the development phase, I have used a .csv file for sourcing the training data, and later, when we switch to Feast, we won't have to make any changes to our application code.

获取步骤将充当特性存储(或者我们的训练数据的其他来源)和我们的管道代码之间的桥梁。在设计此步骤中的代码时,我们应该尽量避免对未来步骤的任何依赖。这将确保我们可以在不影响整个管道代码的情况下切换特性存储的实现。在开发阶段,我使用了。用于获取训练数据的 csv 文件,稍后,当我们切换到 Feast 时,我们将不必对应用程序代码进行任何更改。

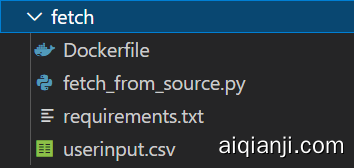

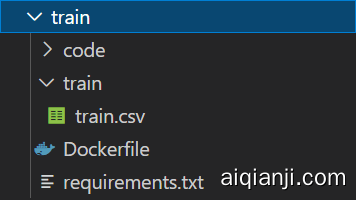

The directory structure looks like the following:

目录结构如下所示:

fetch_from_source.py- the python code that talks to the source of training data, downloads the data, processes it and stores it in a directory inside a persistent volume.- Fetch _ from _ source.py —— python 代码,它与训练数据源通信,下载数据,处理数据并将其存储在持久卷中的目录中。

userinput.csv(only in dev environment) - the file that serves as the input data. In production, it'll be redundant as the input will be sourced through Feast. You can find that the default values of the arguments inside the code point to this location.- Csv (仅在 dev 环境中)——作为输入数据的文件。在生产中,这将是多余的,因为输入将通过宴会采购。您可以发现代码中的参数的默认值指向这个位置。

Dockerfile- the image definition for this step which copies the python code to the container, installs the dependencies, and runs the file.- Dockerfile -- 这个步骤的映像定义,它将 python 代码复制到容器中,安装依赖项,并运行文件。

Writing the pipeline step 编写流水线步骤

# fetch data

operations['fetch'] = dsl.ContainerOp(

name='fetch',

image='insert image name:tag',

command=['python3'],

arguments=[

'/scripts/fetch_from_source.py',

'--base_path', persistent_volume_path,

'--data', training_folder,

'--target', training_dataset,

]

)

A few things to note:

需要注意的一些事情:

- The step is defined as a ContainerOp which allows you to specify an image for the pipeline step which is run as a container.

- 该步骤被定义为 ContainerOp,它允许您为作为容器运行的管道步骤指定映像。

- The file

fetch_from_source.pywill be run when this step is executed. - 执行此步骤时,将运行从 _ source.py 获取 _ 的文件。

- The arguments here include 这里的论点包括

base_path: The path to the volume mount.- Base _ path: 指向卷装入的路径。

data: The directory where the input training data is to be stored.- Data: 存储输入训练数据的目录。

target: The filename to store good input data.- Target: 用于存储好的输入数据的文件名。

How does it work? 它是如何工作的?

The code inside the fetch_from_source.py file first parses the arguments passed while running it.

It then defines the paths for retrieving and storing the input data.

从 _ source.py 文件中获取 _ 的代码首先解析运行时传递的参数。然后,它定义用于检索和存储输入数据的路径。

base_path = Path(args.base_path).resolve(strict=False)

data_path = base_path.joinpath(args.data).resolve(strict=False)

target_path = Path(data_path).resolve(strict=False).joinpath(args.target)

The target_path exists on the mounted volume. This ensures that the training data is visible to the subsequent pipeline steps.

In the next steps, the .csv file is read as a pandas Dataframe and after some processing, it is stored on the target_path using the following line.

目标 _ 路径存在于已装入的卷中。这可以确保训练数据对于后续的流水线步骤是可见的。在接下来的步骤中,。Csv 文件作为 pandas Dataframe 读取,经过一些处理后,使用下面的行将其存储在 target _ path 上。

df.to_csv(target_path, index=False)

Train 训练

The training step contains the code for our ML model. Generally, there would be a number of files hosting different parts of the solution and one file (say, the critical file) which calls all the other functions. Therefore, the first step to containerizing your ML code is to identify the dependencies between the components in your code.

In our case, the file that is central to the training is run.py. This was created from the run.ipynb notebook because it is straightforward to execute a file inside the container using terminal commands.

训练步骤包含了我们的机器学习模型的代码。通常,会有许多文件承载解决方案的不同部分,一个文件(比如关键文件)调用所有其他函数。因此,包装 ML 代码的第一步是识别代码中组件之间的依赖关系。在我们的示例中,训练的核心文件是 run.py。这是在 run.ipynb 笔记本中创建的,因为使用终端命令在容器内直接执行文件是很简单的。

The directory structure looks like the following:

code- directory to host all your ML application files.- 代码-目录托管所有的 ML 应用程序文件。

train(only in dev environment) - contains the file that serves as the input data. In production, it'll be redundant as the input will be sourced from the persistent volume. You can find that the default values of the arguments inside the code point to this location.- Train (仅在 dev 环境中)-包含用作输入数据的文件。在生产环境中,这是多余的,因为输入来自持久性卷。您可以发现代码中的参数的默认值指向这个位置。

Dockerfile- the image definition for this step which copies all the python files to the container, installs the dependencies, and runs the critical file.- Dockerfile -- 该步骤的映像定义,它将所有 python 文件复制到容器中,安装依赖项,并运行关键文件。

Writing the pipeline step 编写流水线步骤

# train

operations['training'] = dsl.ContainerOp(

name='training',

image='insert image name:tag',

command=['python3'],

arguments=[

'/scripts/code/run.py',

'--base_path', persistent_volume_path,

'--data', training_folder,

'--outputs', model_folder,

]

)

A few things to note:

需要注意的一些事情:

- The step is defined as a ContainerOp which allows you to specify an image for the pipeline step which is run as a container.

- 该步骤被定义为 ContainerOp,它允许您为作为容器运行的管道步骤指定映像。

- The file

run.pywill be run when this step i