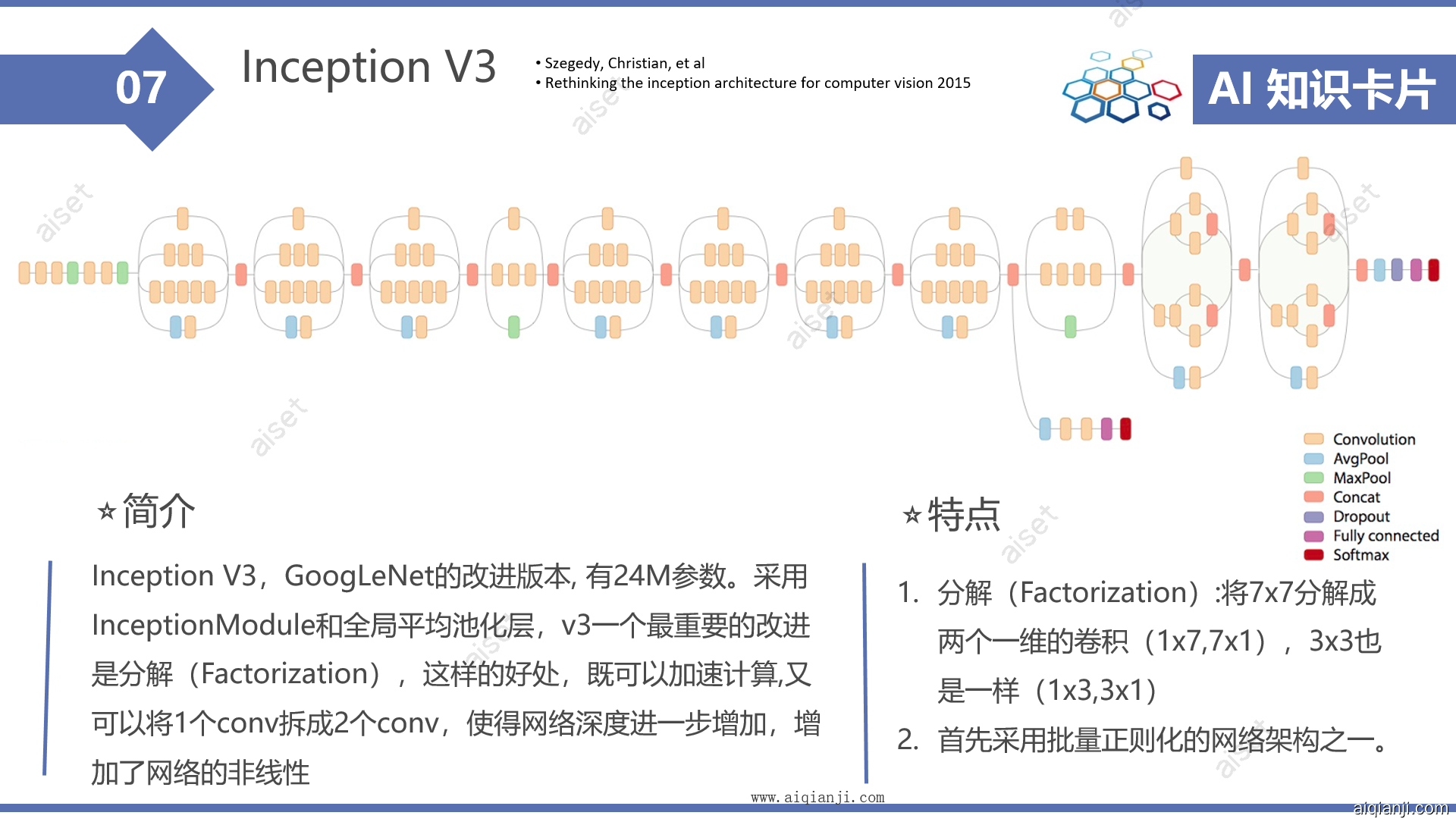

简介

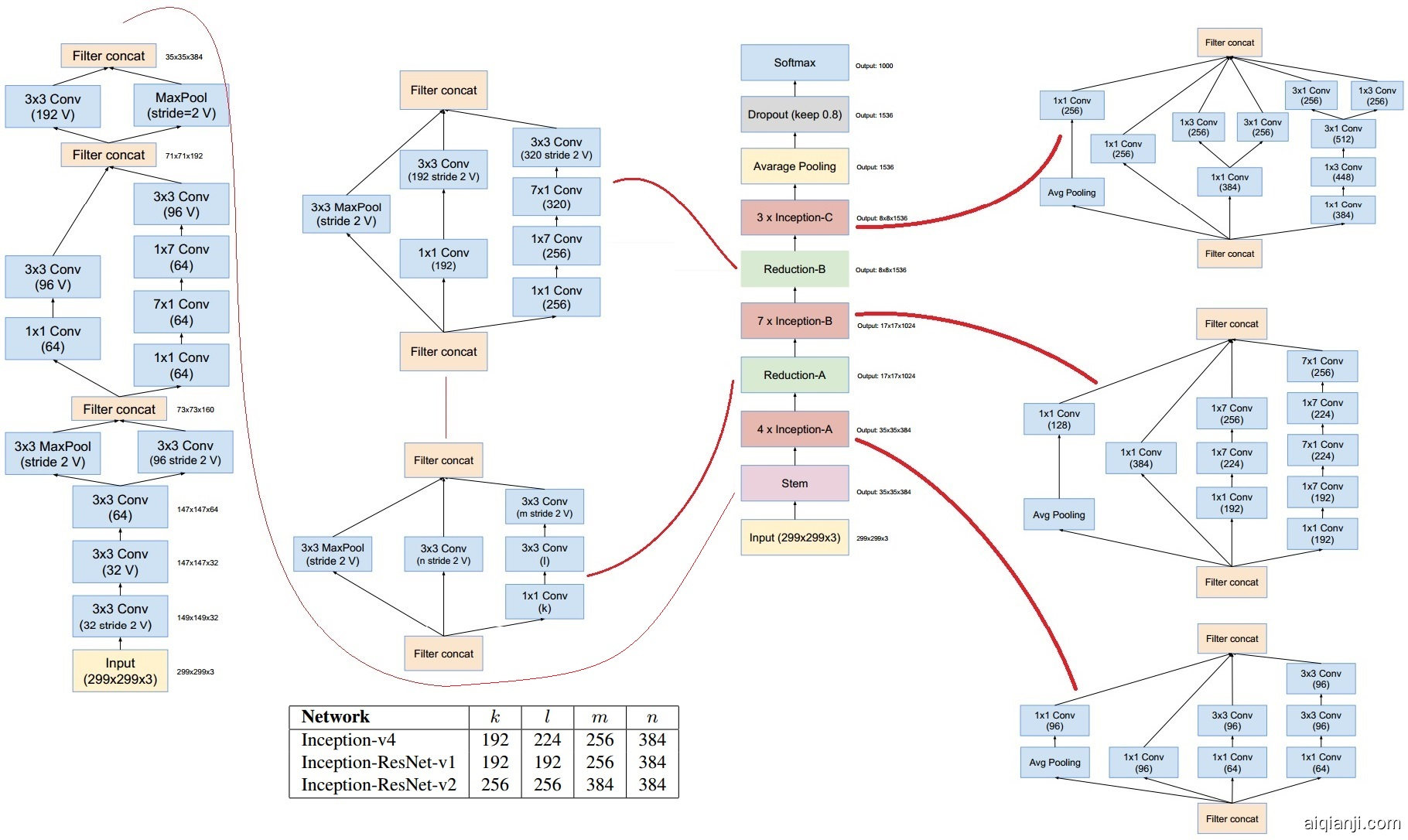

Inception V3,GoogLeNet的改进版本,采用InceptionModule和全局平均池化层,v3一个最重要的改进是分解(Factorization),将7x7分解成两个一维的卷积(1x7,7x1),3x3也是一样(1x3,3x1),这样的好处,既可以加速计算(多余的计算能力可以用来加深网络),又可以将1个conv拆成2个conv,使得网络深度进一步增加,增加了网络的非线性,此外非对称卷积核的使用还增加了特征的多样性。还有值得注意的地方是网络输入从224x224变为了299x299,更加精细设计了35x35/17x17/8x8的模块;ILSVRC 2012 Top-5错误率降到3.58%

基本信息

paper:“Rethinking the inception architecture for computer vision.”

author:Szegedy, Christian, et al.

pdf:http://arxiv.org/abs/1512.00567

翻译:http://www.aiqianji.com/blog/article/30

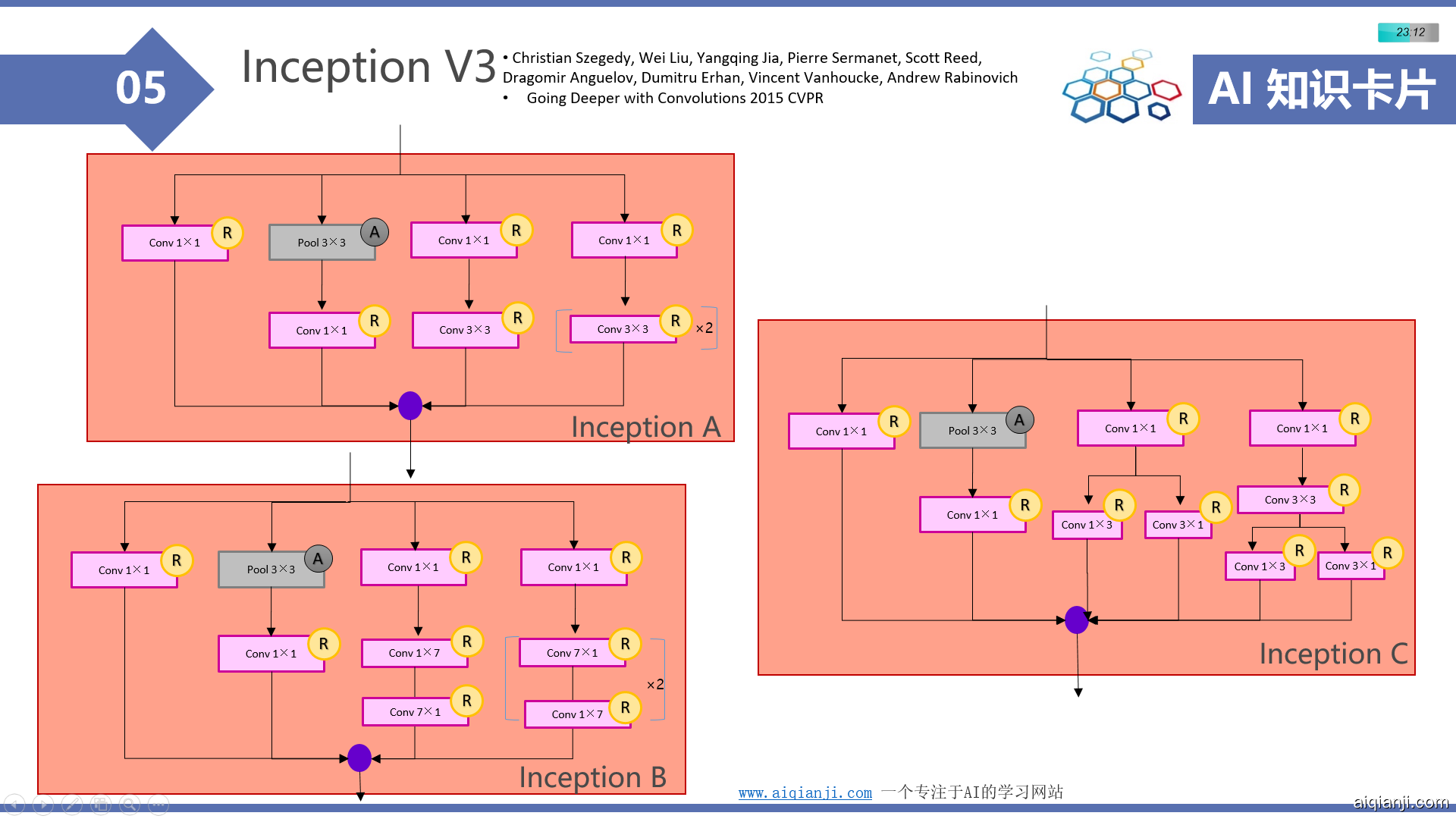

创新点:

1.分解(Factorization):将7x7分解成两个一维的卷积(1x7,7x1),3x3也是一样(1x3,3x1)

a. 节约了大量参数,加速计算;

b. 增加了卷积层数,提高了模型的拟合能力;

c. 非对称卷积核的使用增加了特征的多样性。

2.首先采用批量正则化的网络架构之一。

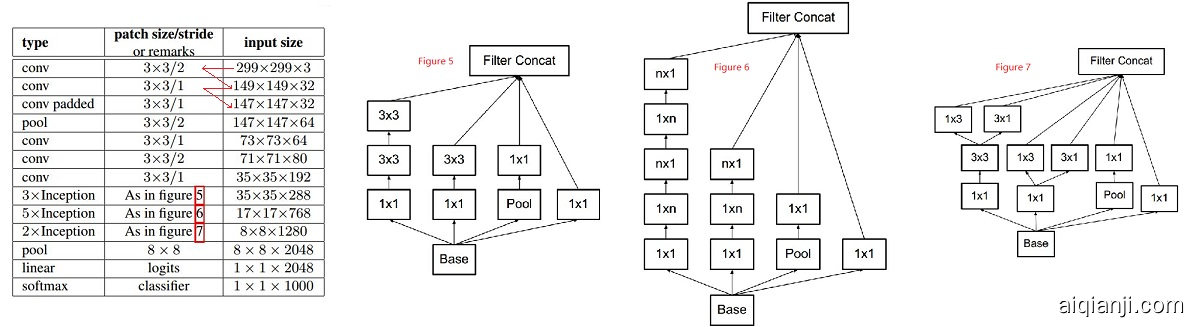

网络结构

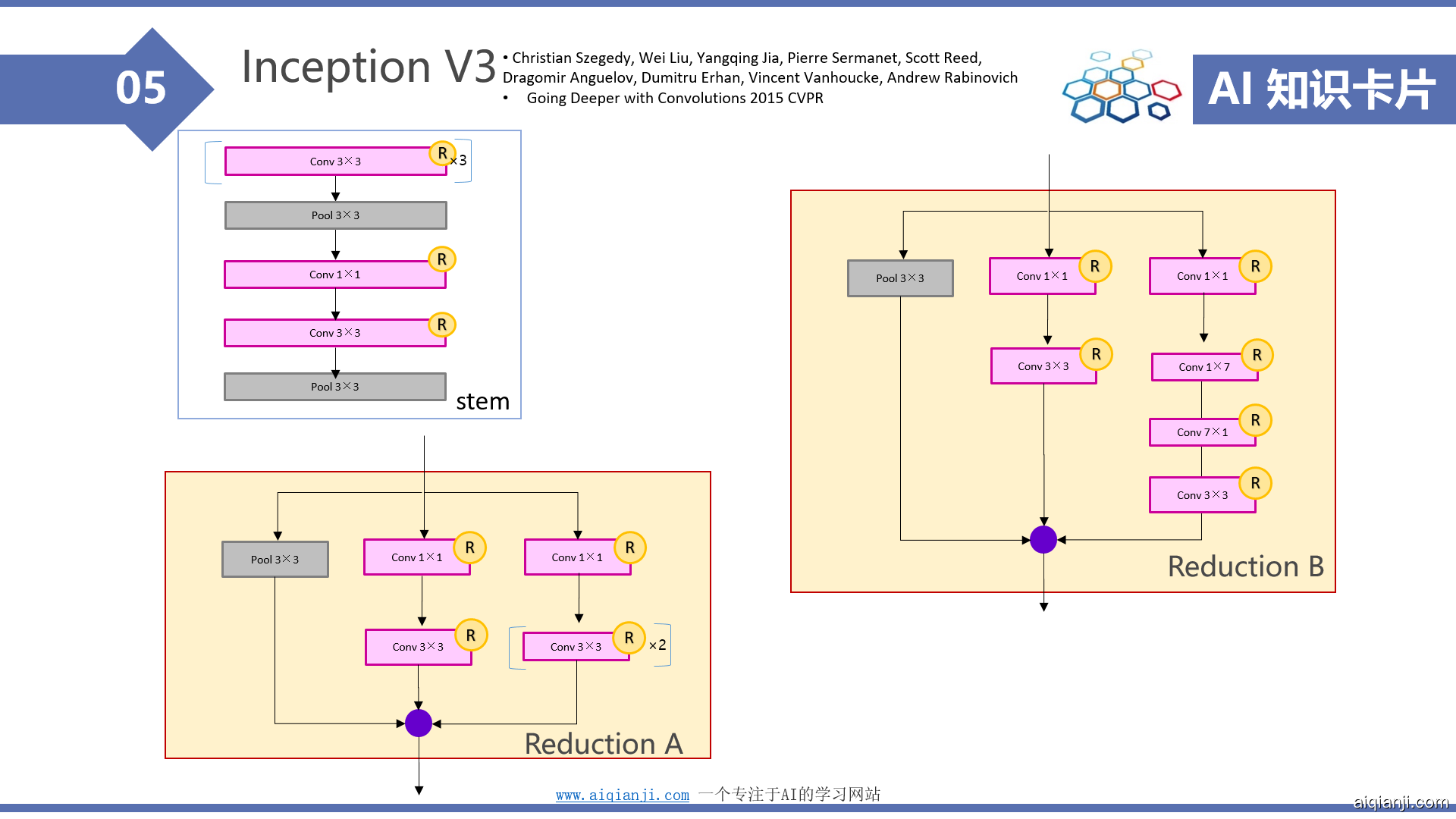

Inception V3 原始结构如图

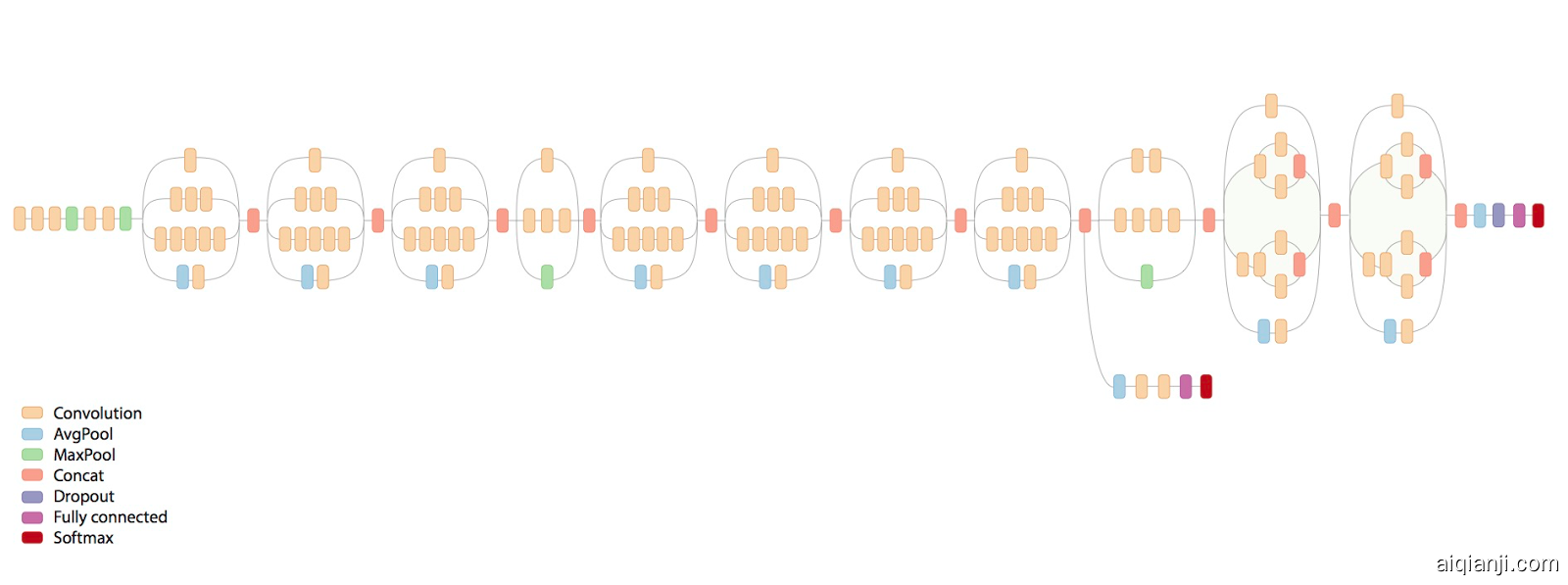

抽象如图(来自googleblog)

Factorization 如图:

抽象如图:

源码

tensorflow 源码 https://github.com/tensorflow/models/tree/master/research/slim/nets/inception_v3.py

pytorch: https://github.com/pytorch/vision/blob/master/torchvision/models/inception.py

self.aux_logits = aux_logits

self.transform_input = transform_input

self.Conv2d_1a_3x3 = conv_block(3, 32, kernel_size=3, stride=2)

self.Conv2d_2a_3x3 = conv_block(32, 32, kernel_size=3)

self.Conv2d_2b_3x3 = conv_block(32, 64, kernel_size=3, padding=1)

self.maxpool1 = nn.MaxPool2d(kernel_size=3, stride=2)

self.Conv2d_3b_1x1 = conv_block(64, 80, kernel_size=1)

self.Conv2d_4a_3x3 = conv_block(80, 192, kernel_size=3)

self.maxpool2 = nn.MaxPool2d(kernel_size=3, stride=2)

self.Mixed_5b = inception_a(192, pool_features=32)

self.Mixed_5c = inception_a(256, pool_features=64)

self.Mixed_5d = inception_a(288, pool_features=64)

self.Mixed_6a = inception_b(288)

self.Mixed_6b = inception_c(768, channels_7x7=128)

self.Mixed_6c = inception_c(768, channels_7x7=160)

self.Mixed_6d = inception_c(768, channels_7x7=160)

self.Mixed_6e = inception_c(768, channels_7x7=192)

self.AuxLogits: Optional[nn.Module] = None

if aux_logits:

self.AuxLogits = inception_aux(768, num_classes)

self.Mixed_7a = inception_d(768)

self.Mixed_7b = inception_e(1280)

self.Mixed_7c = inception_e(2048)

self.avgpool = nn.AdaptiveAvgPool2d((1, 1))

self.dropout = nn.Dropout()

self.fc = nn.Linear(2048, num_classes)

其他:

Inception V4:

知识点 :

BN ,Inception,Factorization