简介

ResNet,深度残差网络,通过shortcut( skip connection )的设计,打破了深度神经网络深度的限制,使得网络深度可以多达到1001层。

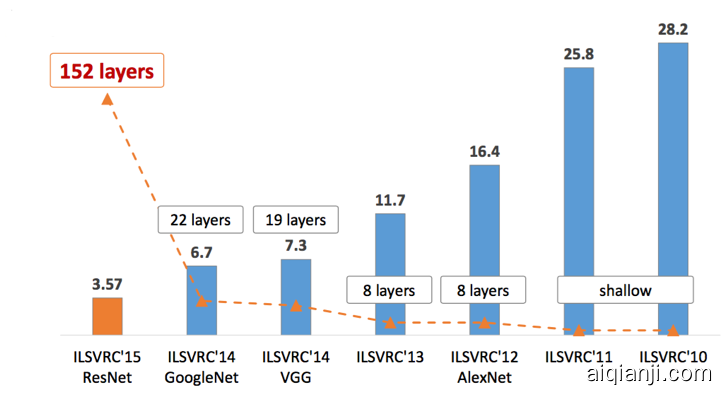

它构建的152层深的神经网络,在ILSVRC2015获得在ImageNet的classification、detection、localization以及COCO的detection和segmentation上均斩获了第一名的成绩,其中classificaiton 取得3.57%的top-5错误率,

基本信息

论文 "Deep residual learning for image recognition."

paper:https://arxiv.org/pdf/1512.03385.pdf

作者 He, Kaiming, et al.

翻译:http://www.aiqianji.com/blog/article/27

创新点

1 设计残差连接:

2 使用批量正则化

网络结构

ResNet 原始结构如图

ResNet最根本的动机就是所谓的“退化”问题,即当模型的层次加深时,错误率却提高了。 但是模型的深度加深,学习能力增强,因此更深的模型不应当产生比它更浅的模型更高的错误率。

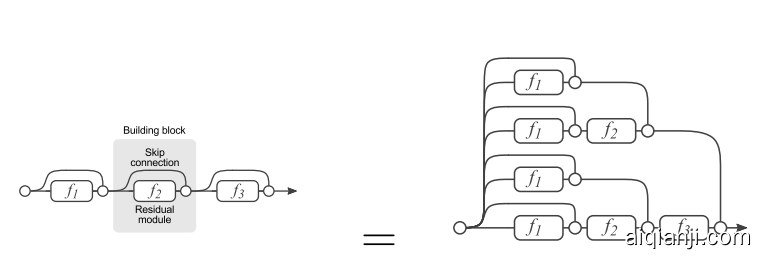

ResNet的基本模块如图,通过增加shortcut,增加一个identity mapping(恒等映射),将原始所需要学的函数H(x)转换成F(x)+x,而这两种表达的效果相同,但是优化的难度却并不相同,这一想法也是源于图像处理中的残差向量编码,通过一个reformulation,将一个问题分解成多个尺度直接的残差问题,能够很好的起到优化训练的效果。此外当模型的层数加深时,这个简单的结构能够很好的解决退化问题。

ResNet公式:

这里的l表示层,xl表示l层的输出,Hl表示一个非线性变换。所以对于ResNet而言,l层的输出是l-1层的输出加上对l-1层输出的非线性变换。

模块设计:

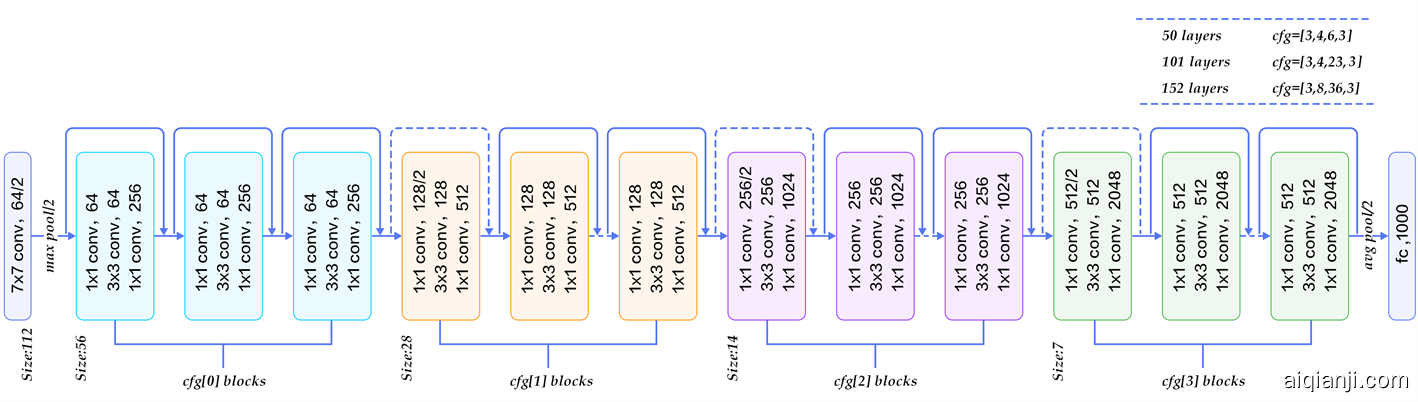

resnet网络结构对比图如图:

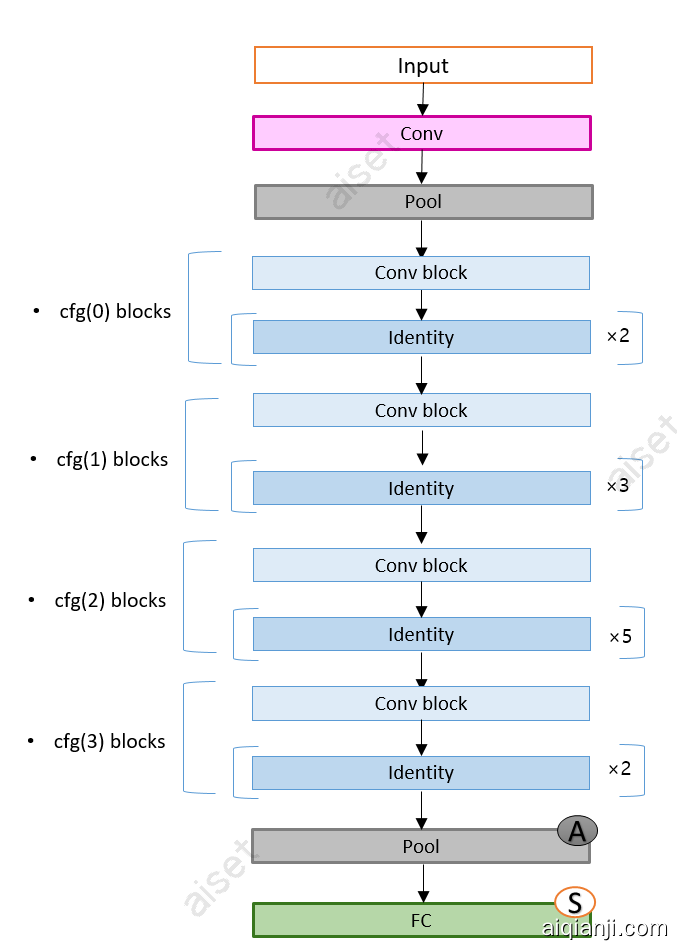

从图像尺寸,我们可以看到整个过程中有多次降采样。在conv3_1 conv4_1 conv5_1,都会有stride = 2 的降采样. 降采样前后的尺寸不同,因此不能直接相加,所以模块分为两种,一种是卷积模块,projection:用1×1卷积变换尺寸,来处理。另一种是indentity模块,用shortcut连接。基本结构大致相同。

抽象如图:

源码:

tensorflow 源码 https://github.com/tensorflow/models/tree/master/research/slim/nets/resnet_v1.py

https://github.com/tensorflow/models/tree/master/research/slim/nets/resnet_v2.py

caffe https://github.com/KaimingHe/deep-residual-networks

torch https://github.com/facebook/fb.resnet.torch

pytorch https://github.com/pytorch/vision/blob/master/torchvision/models/resnet.py

练习:在32*32 的 cifar10 上进行十分类,用pytorch构建网络结构

手写示例:

class BasicBlock(nn.Module):

expansion = 1

def __init__(self, in_planes, planes, stride=1):

super(BasicBlock, self).__init__()

self.conv1 = nn.Conv2d(

in_planes, planes, kernel_size=3, stride=stride, padding=1, bias=False)

self.bn1 = nn.BatchNorm2d(planes)

self.conv2 = nn.Conv2d(planes, planes, kernel_size=3,

stride=1, padding=1, bias=False)

self.bn2 = nn.BatchNorm2d(planes)

self.shortcut = nn.Sequential()

if stride != 1 or in_planes != self.expansion*planes:

self.shortcut = nn.Sequential(

nn.Conv2d(in_planes, self.expansion*planes,

kernel_size=1, stride=stride, bias=False),

nn.BatchNorm2d(self.expansion*planes)

)

def forward(self, x):

out = F.relu(self.bn1(self.conv1(x)))

out = self.bn2(self.conv2(out))

out += self.shortcut(x)

out = F.relu(out)

return out

class Bottleneck(nn.Module):

expansion = 4

def __init__(self, in_planes, planes, stride=1):

super(Bottleneck, self).__init__()

self.conv1 = nn.Conv2d(in_planes, planes, kernel_size=1, bias=False)

self.bn1 = nn.BatchNorm2d(planes)

self.conv2 = nn.Conv2d(planes, planes, kernel_size=3,

stride=stride, padding=1, bias=False)

self.bn2 = nn.BatchNorm2d(planes)

self.conv3 = nn.Conv2d(planes, self.expansion *

planes, kernel_size=1, bias=False)

self.bn3 = nn.BatchNorm2d(self.expansion*planes)

self.shortcut = nn.Sequential()

if stride != 1 or in_planes != self.expansion*planes:

self.shortcut = nn.Sequential(

nn.Conv2d(in_planes, self.expansion*planes,

kernel_size=1, stride=stride, bias=False),

nn.BatchNorm2d(self.expansion*planes)

)

def forward(self, x):

out = F.relu(self.bn1(self.conv1(x)))

out = F.relu(self.bn2(self.conv2(out)))

out = self.bn3(self.conv3(out))

out += self.shortcut(x)

out = F.relu(out)

return out

class ResNet(nn.Module):

def __init__(self, block, num_blocks, num_classes=10):

super(ResNet, self).__init__()

self.in_planes = 64

self.conv1 = nn.Conv2d(3, 64, kernel_size=3,

stride=1, padding=1, bias=False)

self.bn1 = nn.BatchNorm2d(64)

self.layer1 = self._make_layer(block, 64, num_blocks[0], stride=1)

self.layer2 = self._make_layer(block, 128, num_blocks[1], stride=2)

self.layer3 = self._make_layer(block, 256, num_blocks[2], stride=2)

self.layer4 = self._make_layer(block, 512, num_blocks[3], stride=2)

self.linear = nn.Linear(512*block.expansion, num_classes)

def _make_layer(self, block, planes, num_blocks, stride):

strides = [stride] + [1]*(num_blocks-1)

layers = []

for stride in strides:

layers.append(block(self.in_planes, planes, stride))

self.in_planes = planes * block.expansion

return nn.Sequential(*layers)

def forward(self, x):

out = F.relu(self.bn1(self.conv1(x)))

out = self.layer1(out)

out = self.layer2(out)

out = self.layer3(out)

out = self.layer4(out)

out = F.avg_pool2d(out, 4)

out = out.view(out.size(0), -1)

out = self.linear(out)

return out

结果:

ResNet18 :BEST ACC. PERFORMANCE: 90.050%

ResNet50 :BEST ACC. PERFORMANCE: 90.340%

改进:

Identity Mappings in Deep Residual Networks

2016发表了“Identity Mappings in Deep Residual Networks”pdf中表明,通过使用identity mapping来更新残差模块,可以获得更高的准确性。