LIMR: Less is More for RL Scaling

LIMR: 少即是多——强化学习的扩展之道

Abstract

摘要

In this paper, we ask: what truly determines the effectiveness of RL training data for enhancing language models’ reasoning capabilities? While recent advances like o1, Deepseek R1, and Kimi1.5 demonstrate RL’s potential, the lack of transparency about training data requirements has hindered systematic progress. Starting directly from base models without distillation, we challenge the assumption that scaling up RL training data inherently improves performance. we demonstrate that a strategically selected subset of just 1,389 samples can outperform the full 8,523-sample dataset. We introduce Learning Impact Measurement (LIM), an automated method to evaluate and prioritize training samples based on their alignment with model learning trajectories, enabling efficient resource utilization and scalable implementation. Our method achieves comparable or even superior performance using only 1,389 samples versus the full 8,523 samples dataset. Notably, while recent data-efficient approaches (e.g., LIMO and s1) show promise with 32B-scale models, we find it significantly under performs at 7B-scale through supervised fine-tuning (SFT). In contrast, our RL-based LIMR achieves $16.7,%$ higher accuracy on AIME24 and outperforms LIMO and s1 by $13.0%$ and $22.2%$ on MATH500. These results fundamentally reshape our understanding of RL scaling in LLMs, demonstrating that precise sample selection, rather than data scale, may be the key to unlocking enhanced reasoning capabilities. For reproducible research and future innovation, we are open-sourcing LIMR, including implementation of LIM, training and evaluation code, curated datasets, and trained models at https://github.com/GAIR-NLP/LIMR.

在本文中,我们提出一个问题:究竟是什么决定了强化学习 (RL) 训练数据在提升语言模型推理能力方面的有效性?尽管最近的进展如 o1、Deepseek R1 和 Kimi1.5 展示了 RL 的潜力,但训练数据需求的不透明性阻碍了系统性进展。我们直接从基础模型出发,不进行蒸馏,挑战了“扩大 RL 训练数据规模必然提升性能”的假设。我们证明,通过策略性选择的仅 1,389 个样本的子集,可以超越包含 8,523 个样本的完整数据集。我们引入了学习影响度量 (Learning Impact Measurement, LIM),这是一种基于模型学习轨迹自动评估和优先排序训练样本的方法,从而实现资源的高效利用和可扩展的实施。我们的方法仅使用 1,389 个样本就取得了与完整 8,523 个样本数据集相当甚至更优的性能。值得注意的是,尽管最近的数据高效方法(如 LIMO 和 s1)在 32B 规模的模型上表现出潜力,但我们发现它们在 7B 规模上通过监督微调 (SFT) 表现显著不佳。相比之下,我们基于 RL 的 LIMR 在 AIME24 上的准确率提高了 $16.7,%$,并且在 MATH500 上分别比 LIMO 和 s1 高出 $13.0%$ 和 $22.2%$。这些结果从根本上重塑了我们对大语言模型中 RL 扩展的理解,表明精确的样本选择,而非数据规模,可能是解锁增强推理能力的关键。为了可重复的研究和未来的创新,我们开源了 LIMR,包括 LIM 的实现、训练和评估代码、精选数据集以及训练模型,详见 https://github.com/GAIR-NLP/LIMR。

Figure 1: (a) The accuracy on AIME24 across using different training datasets in RL without any data distillation and SFT training as cold start.. Our specifically curated LIMR dataset, a strategically selected subset from the full dataset, MATH (level 3-5), achieved comparable accuracy levels while utilizing less than one-sixth of the data volume. Notably, LIM significantly outperformed a randomly selected dataset of equivalent size, demonstrating the effectiveness of our selective dataset construction methodology. (b) A comparison of different data-efficient models. The results reveal that directly applying SFT on the LIMO (Ye et al., 2025) and s1 (Mu en nigh off et al., 2025) datasets with Qwen-Math-7B yields significantly inferior results compared to using RL with LIMR, implying that, for small models, RL is more effective in achieving data efficiency.

图 1: (a) 在没有任何数据蒸馏和 SFT 训练的冷启动情况下,使用不同训练数据集在 AIME24 上的准确率。我们特别策划的 LIMR 数据集,是从完整数据集中战略性地选择的一个子集,MATH (3-5 级),在使用不到六分之一的数据量的情况下,达到了相当的准确率水平。值得注意的是,LIM 显著优于同等大小的随机选择数据集,展示了我们选择性数据集构建方法的有效性。(b) 不同数据高效模型的比较。结果表明,直接在 LIMO (Ye et al., 2025) 和 s1 (Mu en nigh off et al., 2025) 数据集上应用 SFT 并使用 Qwen-Math-7B 得到的结果显著劣于使用 RL 与 LIMR 的结果,这意味着对于小型模型,RL 在实现数据效率方面更为有效。

1 Introduction

1 引言

Recent advances in Large Language Models (LLMs) have demonstrated the remarkable effectiveness of reinforcement learning (RL) in enhancing complex reasoning capabilities. Models like o1 (OpenAI, 2024), Deepseek R1 (Guo et al., 2025), and Kimi1.5 (Team et al., 2025) have shown that RL training can naturally induce sophisticated reasoning behaviors, including self-verification, reflection, and extended chains of thought. However, a critical gap exists in our understanding of RL training: these pioneering works provide limited transparency about their training data scale, making it challenging for the research community to build upon their success. Follow-up open-source efforts (Table 1) have explored diverse experimental scenarios, from base models to distilled long-form chain-of-thought models, with RL data volumes ranging from 8K (Zeng et al., 2025) to 150K (Cui et al., 2025), but without clear guidance on optimal data requirements or scaling principles. In this work, we try to explore the scaling dynamics of RL training data by focusing on a foundational scenario: starting directly from base models without distillation (similar to the RL scaling setting of Deepseek R1-zero).

大语言模型 (LLM) 的最新进展展示了强化学习 (RL) 在增强复杂推理能力方面的显著效果。o1 (OpenAI, 2024)、Deepseek R1 (Guo et al., 2025) 和 Kimi1.5 (Team et al., 2025) 等模型表明,RL 训练可以自然地引发复杂的推理行为,包括自我验证、反思和扩展的思维链。然而,我们对 RL 训练的理解存在一个关键空白:这些开创性工作对其训练数据规模的透明度有限,使得研究界难以在其成功基础上继续发展。后续的开源努力 (表 1) 探索了从基础模型到蒸馏的长形式思维链模型的各种实验场景,RL 数据量从 8K (Zeng et al., 2025) 到 150K (Cui et al., 2025) 不等,但没有明确指导最优数据需求或扩展原则。在这项工作中,我们试图通过关注一个基础场景来探索 RL 训练数据的扩展动态:直接从基础模型开始,不进行蒸馏 (类似于 Deepseek R1-zero 的 RL 扩展设置)。

This lack of understanding of RL training data requirements presents several fundamental challenges:

这种对 RL 训练数据需求的理解缺失带来了几个根本性挑战:

More importantly, this uncertainty raises a crucial question: Is scaling up RL training data truly the key to improving model performance, or are we overlooking more fundamental factors such as sample quality and selection criteria?

更重要的是,这种不确定性引发了一个关键问题:扩大RL(强化学习)训练数据是否真的能提升模型性能,还是我们忽视了更根本的因素,如样本质量和选择标准?

In this work, we challenge the assumption that larger RL training datasets necessarily lead to better performance. Our key insight is that the quality and relevance of training samples matter far more than their quantity. Through extensive empirical analysis, we make several surprising observations that fundamentally change our understanding of RL training dynamics:

在这项工作中,我们挑战了“更大的强化学习 (RL) 训练数据集必然带来更好性能”的假设。我们的关键发现是,训练样本的质量和相关性远比数量重要。通过广泛的实证分析,我们得出了若干令人惊讶的观察结果,这些结果从根本上改变了我们对 RL 训练动态的理解:

- We find that a carefully selected subset of RL training samples (1,389) can achieve comparable or even superior performance compared to training with the full dataset (8,523). 2. Most importantly, we develop an automated quantitative method for evaluating the potential value of RL training samples. Our method, which we call Learning Impact Measurement (LIM), can effectively predict which samples will contribute most significantly to model improvement. This automated approach eliminates the need for manual sample curation and makes our methodology easily scalable. 3. Recent approaches like LIMO and s1 have demonstrated the potential of distilled reasoning data efficiency through supervised fine-tuning with 32B models. We find that at 7B-scale, these methods significantly under perform. Our RL-based LIMR achieves $16.7%$ higher accuracy on AIME24 $32.5%$ vs $15.8%$ and surpasses LIMO and s1 by $13.0%$ and $22.2%$ on MATH500 ( $78.0%$ vs $65.0%$ , $55.8%$ ), suggesting that RL may be more effective for enhancing reasoning capabilities in data-sparse scenarios.

- 我们发现,精心选择的强化学习训练样本子集(1,389 个)可以达到甚至优于使用完整数据集(8,523 个)进行训练的性能。

- 最重要的是,我们开发了一种自动化定量方法来评估强化学习训练样本的潜在价值。我们称之为学习影响度量(Learning Impact Measurement, LIM)的方法,可以有效预测哪些样本对模型改进的贡献最大。这种自动化方法消除了手动样本筛选的需要,并使我们的方法易于扩展。

- 最近的方法如 LIMO 和 s1 已经证明了通过使用 32B 模型进行监督微调来提升推理数据效率的潜力。我们发现,在 7B 规模下,这些方法表现显著不佳。我们基于强化学习的 LIMR 在 AIME24 上的准确率提高了 16.7%(32.5% vs 15.8%),并且在 MATH500 上分别超越了 LIMO 和 s1 13.0% 和 22.2%(78.0% vs 65.0%,55.8%),这表明在数据稀疏的场景中,强化学习可能更有效地提升推理能力。

Our findings have significant implications for the field of LLM development. They suggest that the path to better reasoning capabilities may not lie in simply scaling up RL training data, but rather in being more selective about which samples to use. This insight could dramatically reduce the computational resources required for effective RL training while potentially improving final model performance. Furthermore, our automated sample evaluation method provides a practical tool for researchers and practitioners to implement these insights in their own work. For reproducible research and future innovation, we release all LIMR artifacts openly, including LIMR dataset and model, all training and evaluation code, and implementation details of LIM.

我们的研究结果对大语言模型(LLM)开发领域具有重要意义。它们表明,提升推理能力的路径可能不在于简单地扩大强化学习(RL)训练数据的规模,而在于更有选择性地使用样本。这一见解可能会大幅减少有效强化学习训练所需的计算资源,同时潜在地提高最终模型的性能。此外,我们的自动化样本评估方法为研究人员和实践者提供了一个实用的工具,以在他们的工作中实施这些见解。为了可重复的研究和未来的创新,我们公开了所有LIMR的成果,包括LIMR数据集和模型、所有训练和评估代码,以及LIM的实现细节。

2 Methodology

2 方法论

We present Learning Impact Measurement (LIM), a systematic approach to quantify and optimize the value of training data in reinforcement learning. Our method addresses the critical challenge of data efficiency in RL training by analyzing learning dynamics to identify the most effective training samples.

我们提出了学习影响度量 (Learning Impact Measurement, LIM),这是一种系统化的方法,用于量化和优化强化学习中训练数据的价值。我们的方法通过分析学习动态,识别最有效的训练样本,解决了强化学习训练中数据效率的关键挑战。

2.1 Learning Dynamics in RL Training

2.1 RL 训练中的学习动态

To understand the relationship between training data and model improvement, we conducted extensive analysis using the MATH-FULL dataset (Hendrycks et al., 2021), which contains 8,523 mathematical problems of varying difficulty levels (3-5). Our investigation reveals that different training samples contribute unequally to model learning, contrary to the conventional approach of treating all samples uniformly. As illustrated in Figure 2a, we

为了理解训练数据与模型改进之间的关系,我们使用 MATH-FULL 数据集(Hendrycks et al., 2021)进行了广泛分析,该数据集包含 8,523 个不同难度级别(3-5)的数学问题。我们的研究表明,与传统的将所有样本统一对待的方法不同,不同的训练样本对模型学习的贡献是不均等的。如图 2a 所示,我们

| 方法 | 初始模型 | 长CoT蒸馏 | 问题数量 |

|---|---|---|---|

| STILL-3 (Team, 2025b) | Instruct | 是 | 29,925 |

| DeepScaleR (Luo et al., 2025) | Instruct | 是 | 40,314 |

| Sky-T1 (Team, 2025a) | Instruct | 是 | 45,000 |

| THUDM-T1 (Hou et al., 2025) | Instruct | 否 | 30,000 |

| PRIME (Cui et al., 2025) | Instruct | 否 | 150,000 |

| SimpleRL (Zeng et al., 2025) | Base | 否 | 8,523 |

| LIMR | Base | 否 | 1,389 |

Table 1: Comparison of various methods. The “init model” refers to the type of the initial actor model, we performance RL directly on the base model. “Long CoT Distillation” indicates whether the initial model distills long CoT for cold start.

表 1: 各种方法的比较。"init model" 指的是初始 actor 模型的类型,我们直接在基础模型上进行强化学习。"Long CoT Distillation" 表示初始模型是否为冷启动蒸馏长 CoT。

Figure 2: (a) Learning dynamics analysis of training samples from MATH-FULL dataset across epochs. Solution reward trajectories reveal diverse patterns: samples maintaining near-zero rewards, samples quickly achieving high rewards, and those showing dynamic learning progress with varying improvement rates. (b) Sample learning trajectories compared against the average reward curve (red). Higher LIM scores reflect better alignment with model’s learning trajectory, where trajectories showing similar growth patterns receive higher scores.

图 2: (a) 从 MATH-FULL 数据集中提取的训练样本在不同训练周期中的学习动态分析。解决方案奖励轨迹揭示了多种模式:样本保持接近零的奖励,样本快速获得高奖励,以及那些表现出动态学习进展且改进率不同的样本。 (b) 样本学习轨迹与平均奖励曲线(红色)对比。较高的 LIM 分数反映了与模型学习轨迹更好的对齐,其中表现出相似增长模式的轨迹获得了更高的分数。

observe diverse learning trajectories: some samples exhibit stable performance patterns, while others show complex learning dynamics that appear to drive significant model improvements.

观察到多样化的学习轨迹:一些样本表现出稳定的性能模式,而另一些则显示出复杂的学习动态,这些动态似乎推动了模型的显著改进。

These observations lead to our key insight: the value of training data in RL can be systematically measured by examining how well individual samples align with the model’s overall learning progression. This understanding forms the foundation of LIM, our proposed method for quantifying sample effectiveness.

这些观察结果引出了我们的关键见解:RL(强化学习)中训练数据的价值可以通过检查单个样本与模型整体学习进度的契合程度来系统性地衡量。这一理解构成了LIM(学习影响度量)的基础,这是我们提出的量化样本有效性的方法。

2.2 Learning Impact Measurement (LIM)

2.2 学习影响测量 (LIM)

LIM centers on a model-aligned trajectory analysis that evaluates training samples based on their contribution to model learning. Our key finding is that samples whose learning patterns complement the model’s overall performance trajectory tend to be more valuable for optimization.

LIM 专注于一种模型对齐的轨迹分析,该分析根据训练样本对模型学习的贡献来评估它们。我们的关键发现是,学习模式与模型整体性能轨迹互补的样本往往对优化更有价值。

2.2.1 Model-aligned Trajectory Analysis

2.2.1 模型对齐的轨迹分析

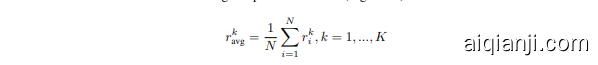

Given that neural network learning typically follows a logarithmic growth pattern, we use the model’s average reward curve as a reference for measuring sample effectiveness (Figure 2b):

鉴于神经网络学习通常遵循对数增长模式,我们使用模型的平均奖励曲线作为衡量样本效率的参考(图 2b):

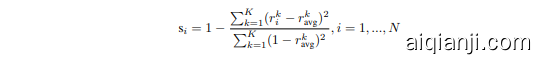

where $\boldsymbol{r}_{i}^{k}$ represents the reward of sample $i$ at epoch $k$ , and $N$ is the total number of samples. For each sample, LIM computes a normalized alignment score:

其中 $\boldsymbol{r}_{i}^{k}$ 表示样本 $i$ 在第 $k$ 个 epoch 的奖励,$N$ 是样本的总数。对于每个样本,LIM 计算一个归一化的对齐分数:

This score quantifies how well a sample’s learning pattern aligns with the model’s overall learning trajectory, with higher scores indicating better alignment.

该分数量化了样本的学习模式与模型整体学习轨迹的匹配程度,分数越高表示匹配度越好。

2.2.2 Sample Selection Strategy

2.2.2 样本选择策略

Based on the alignment scores, LIM implements a selective sampling strategy: $s_{i}>\theta$ where $\theta$ serves as a quality threshold that can be adjusted according to specific requirements. In our experiments, setting $\theta=0.6$ yielded an optimized dataset (LIMR) of 1,389 high-value samples from the original dataset.

基于对齐分数,LIM 实现了选择性采样策略:$s_{i}>\theta$,其中 $\theta$ 作为质量阈值,可根据特定需求进行调整。在我们的实验中,设置 $\theta=0.6$ 从原始数据集中生成了一个包含 1,389 个高价值样本的优化数据集(LIMR)。

2.3 Baseline Data Selection Methods

2.3 基线数据选择方法

While developing our core methodology, we explored several alternative approaches that helped inform and validate our final method. These approaches, provide valuable insights into data selection in RL.

在开发我们的核心方法时,我们探索了几种替代方法,这些方法有助于指导和验证我们的最终方法。这些方法为RL中的数据选择提供了宝贵的见解。

Random Sampling baseline (RAND) randomly selects 1,389 samples from MATH-FULL to match the size of our main approach, providing a fundamental reference point for evaluating selective sampling effectiveness.

随机采样基线 (RAND) 从 MATH-FULL 中随机选择 1,389 个样本,以匹配我们主要方法的规模,为评估选择性采样的有效性提供一个基本参考点。

Linear Progress Analysis method (LINEAR) evaluates samples based on their consistency in showing steady improvements across training epochs. While this approach captures samples with gradual progress, it often misses valuable samples that show rapid early gains followed by stabilization. Using a threshold of $\theta=0.7$ , this method yields 1,189 samples.

线性进度分析方法 (LINEAR) 基于样本在训练周期中表现出的稳定改进的一致性来评估样本。这种方法虽然能够捕捉到逐步进展的样本,但通常会错过那些在早期快速提升后趋于稳定的有价值样本。使用阈值 $\theta=0.7$,该方法共筛选出 1,189 个样本。

2.4 Reward Design

2.4 奖励设计

Similar to deepseek r1 (Guo et al., 2025), we use a rule-based reward function. Specifically, for a correct answer, the reward is 1; for an incorrect but properly formatted answer, the reward is -0.5; and for a answer with formatting errors, the reward is $^{-1}$ . Formally, this can be expressed as:

与 deepseek r1 (Guo et al., 2025) 类似,我们使用基于规则的奖励函数。具体来说,对于正确答案,奖励为 1;对于错误但格式正确的答案,奖励为 -0.5;对于格式错误的答案,奖励为 $^{-1}$。这可以正式表示为:

3 Experiment

3 实验

3.1 Experimental Setup

3.1 实验设置

Training We conduct RL training using PPO (Schulman et al., 2017) algorithm implemented in the OpenRLHF (Hu et al., 2024) framework. Using Qwen2.5-Math-7B (Yang et al., 2024) as our initial policy model, we configure the rollout batch size as 1,024 and generate 8 samples per prompt with a temperature of 1.2 during exploration. The training process uses a batch size of 256, with learning rates set to 5e-7 and 9e-6 for the actor and critic models respectively, and a KL coefficient of 0.01.

训练

我们使用 OpenRLHF (Hu 等人, 2024) 框架中实现的 PPO (Schulman 等人, 2017) 算法进行强化学习训练。以 Qwen2.5-Math-7B (Yang 等人, 2024) 作为初始策略模型,我们将 rollout 批量大小配置为 1,024,并在探索过程中为每个提示生成 8 个样本,温度为 1.2。训练过程使用 256 的批量大小,actor 和 critic 模型的学习率分别设置为 5e-7 和 9e-6,KL 系数为 0.01。

Evaluation We conducted experimental evaluations on multiple challenging benchmarks, including: (1) MATH500; (2) AIME2024; (4) AMC2023. To accelerate the evaluation process, we utilized the vLLM (Kwon et al., 2023) framework. For AIME24, AMC23, due to the limited number of questions (30 and 40 respectively), we performed 4 sampling runs per question with a temperature of 0.4. For MATH500, we employed greedy decoding for inference.

评估我们在多个具有挑战性的基准上进行了实验评估,包括:(1) MATH500;(2) AIME2024;(4) AMC2023。为了加速评估过程,我们使用了 vLLM (Kwon et al., 2023) 框架。对于 AIME24 和 AMC23,由于问题数量有限(分别为 30 和 40),我们对每个问题进行了 4 次采样运行,温度为 0.4。对于 MATH500,我们使用了贪婪解码进行推理。

3.2 Main Results

3.2 主要结果

Table 2: Main results on difficult math benchmarks.

表 2: 在难度数学基准上的主要结果。

| 方法 | #问题 | AIME2024 | MATH500 | AMC2023 | 平均 |

|---|---|---|---|---|---|

| Qwen-Math-7B | 16.7 | 52.4 | 52.5 | 40.5 | |

| Qwen-Math-7B-FULL | 8,523 | 32.5 | 76.6 | 61.9 | 57.0 |

| Qwen-Math-7B-RAND | 1,389 | 25.8 | 66.0 | 56.3 | 49.4 |

| Qwen-Math-7B-LINEAR | 1,138 | 28.3 | 74.6 | 61.9 | 54.9 |

| LIMR | 1,389 | 32.5 | 78.0 | 63.8 | 58.1 |

As illustrated in Table 2, directly applying RL to Qwen-Math-7B using the MATH-FULL dataset resulted in a significant performance improvement. Different data selection strategies, however, led to notable variations in performance. Training with the MATH-RAND dataset results in an average accuracy drop of $8.1%$ compared to using the full dataset, whereas MATH-LINEAR incurs only a $2%$ loss. More notably, LIMR, despite an $80%$ reduction in dataset size, performs nearly on par with MATH-FULL. This further supports the notion that in RL, only a small subset of questions plays a critical role.

如表 2 所示,直接在 Qwen-Math-7B 上使用 MATH-FULL 数据集应用强化学习 (RL) 带来了显著的性能提升。然而,不同的数据选择策略导致了显著的性能差异。使用 MATH-RAND 数据集进行训练时,与使用完整数据集相比,平均准确率下降了 $8.1%$,而 MATH-LINEAR 仅导致了 $2%$ 的损失。更值得注意的是,尽管 LIMR 将数据集大小减少了 $80%$,但其性能几乎与 MATH-FULL 相当。这进一步支持了在强化学习中,只有一小部分问题起关键作用的观点。

Figure 3: Performance and training dynamics

图 3: 性能和训练动态

Additionally, we analyze the evolution of various metrics during RL training on the MATH-FULL, MATHRAND, and LIMR datasets. As shown in Figur 3a, the accuracy curves of LIMR and MATH-FULL are nearly identical, both significantly outperforming MATH-RAND. Meanwhile, Figure 3b indicates that the training curve for MATH-FULL exhibits instability i